- The paper introduces Agentic Neural Networks, a framework that models multi-agent systems as layered networks with self-evolving capabilities.

- It employs a two-phase optimization strategy with forward dynamic team selection and backward textual refinement to enhance agent collaboration.

- Experimental results on datasets like HumanEval (72.7%-87.8% accuracy) validate its superiority over static multi-agent configurations.

Agentic Neural Networks: Self-Evolving Multi-Agent Systems via Textual Backpropagation

The paper "Agentic Neural Networks: Self-Evolving Multi-Agent Systems via Textual Backpropagation" (2506.09046) introduces the Agentic Neural Network (ANN), a novel framework that applies neural network principles to multi-agent systems (MAS). The ANN framework aims to address the limitations of static, manually engineered multi-agent configurations by conceptualizing multi-agent collaboration as a layered neural network architecture, where each agent acts as a node and each layer forms a cooperative team focused on a specific subtask.

Core Methodology

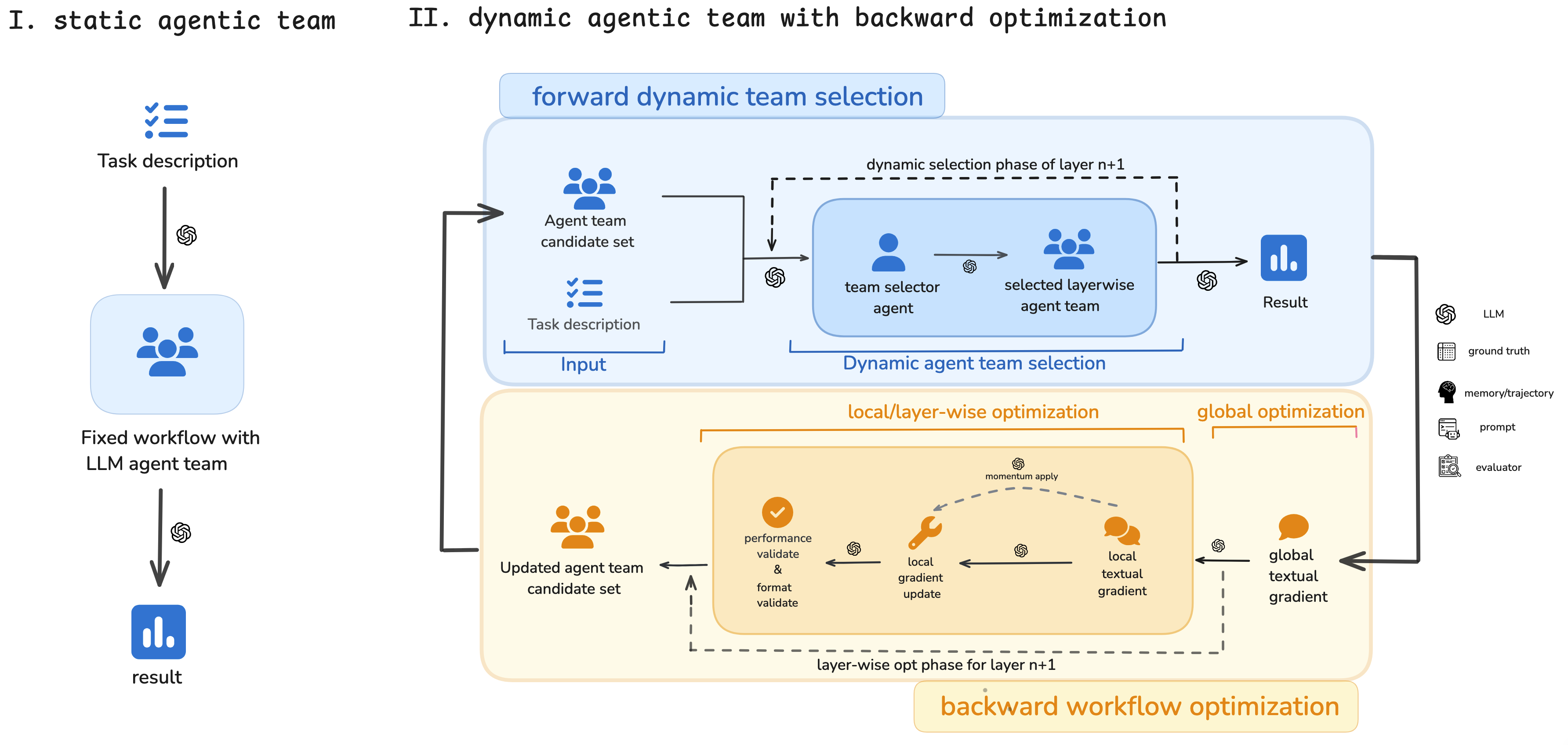

The ANN methodology draws inspiration from classic neural networks, replacing numerical weight optimizations with dynamic agent-based team selection and iterative textual refinement. It employs a two-phase optimization strategy: a forward phase for dynamic team selection and a backward phase for optimization.

Forward Dynamic Team Selection

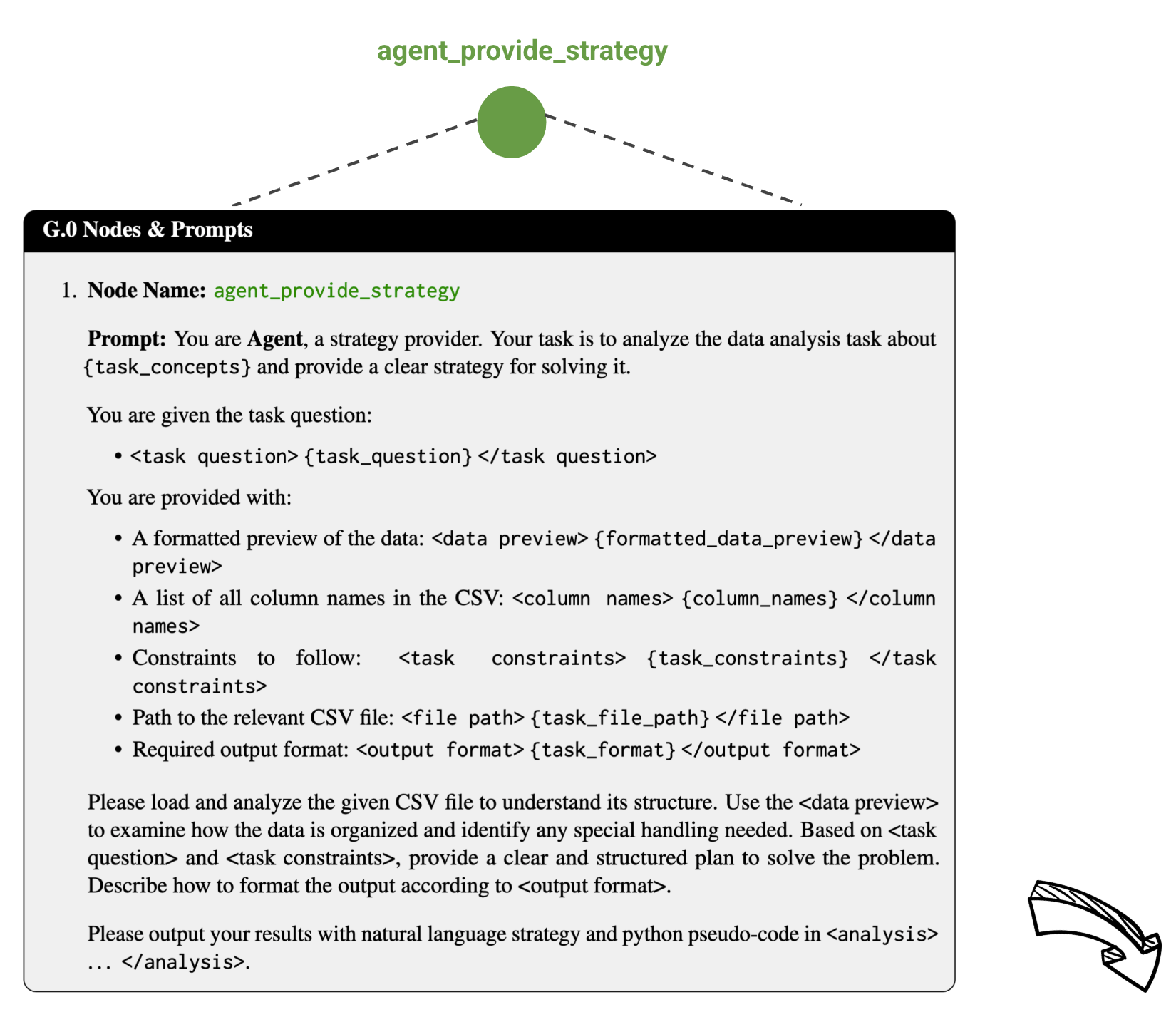

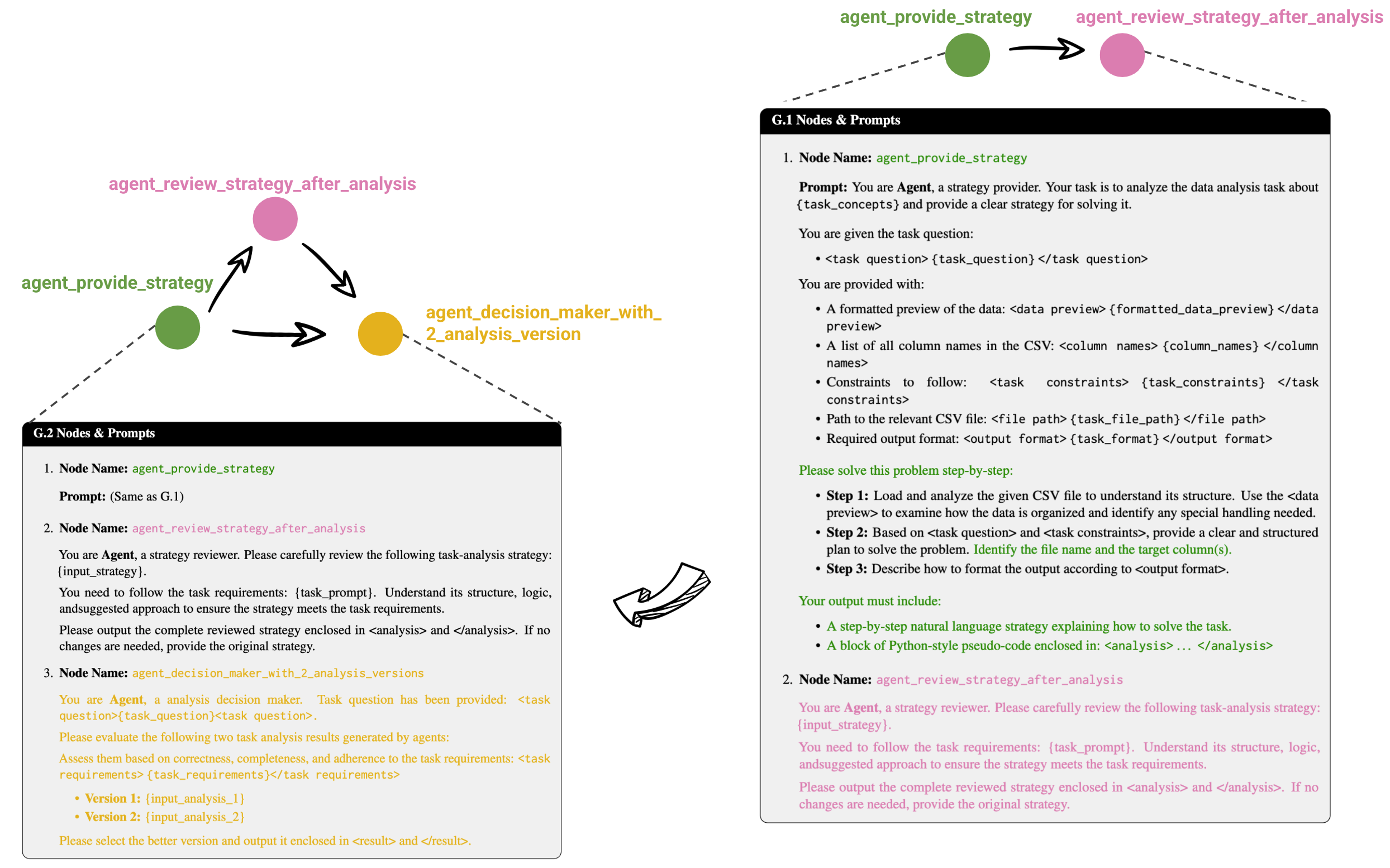

In the forward phase, the framework decomposes a complex task into subtasks, assigning each to a layer of specialized agents. This process involves:

- Defining the ANN structure: The architecture mimics neural networks, where each layer consists of agent nodes connected in a sequence to facilitate information flow.

- Selecting Layer-wise Aggregation Functions: A mechanism dynamically determines the most appropriate aggregation function at each layer, combining outputs from multiple agents based on subtask requirements.

The aggregation function selection is determined by

fℓ=DynamicRoutingSelect(Fℓ,ℓ,Iℓ,I),

where Fℓ is the set of candidate aggregation functions, Iℓ is the input to the layer, and I is the task-specific information.

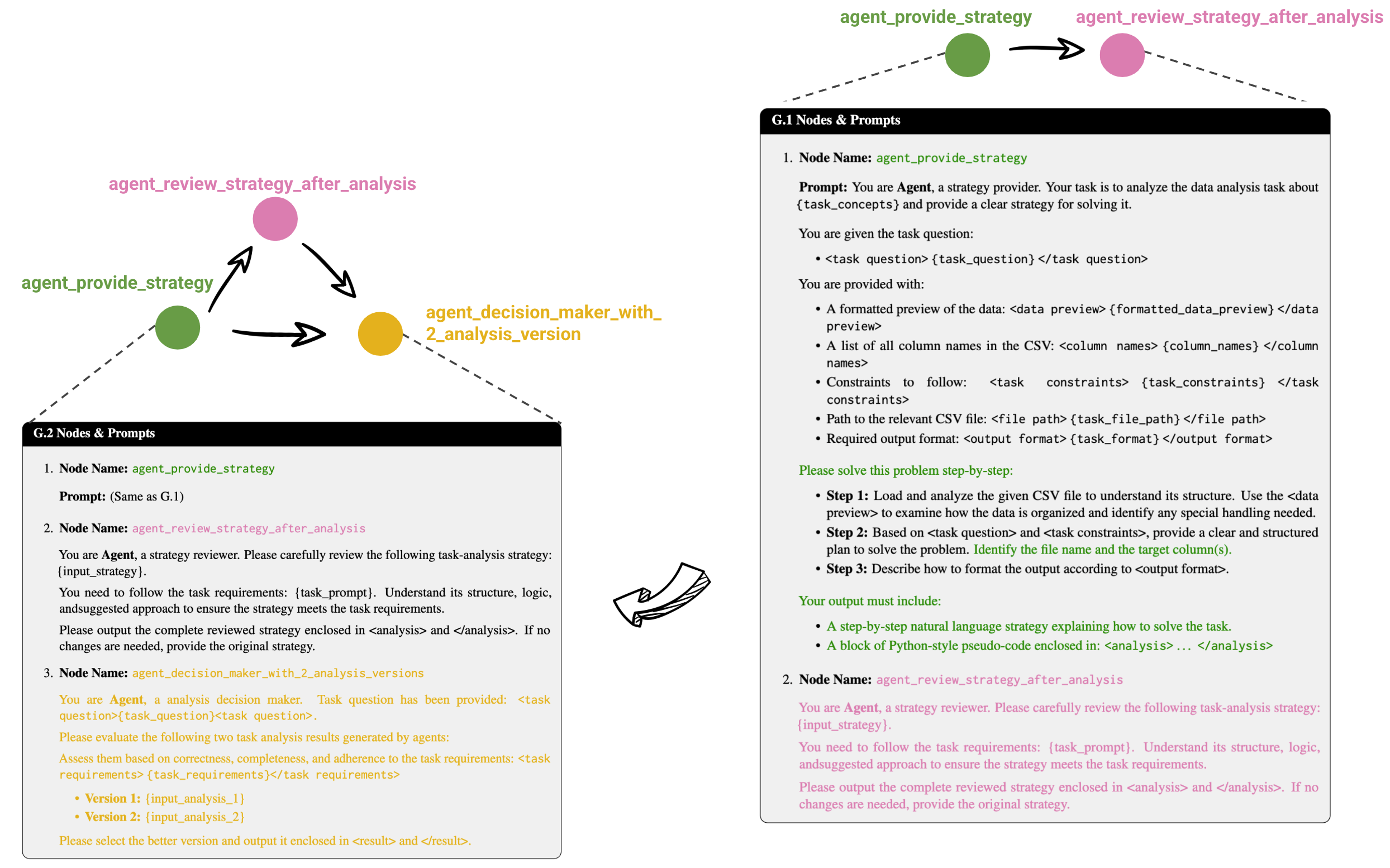

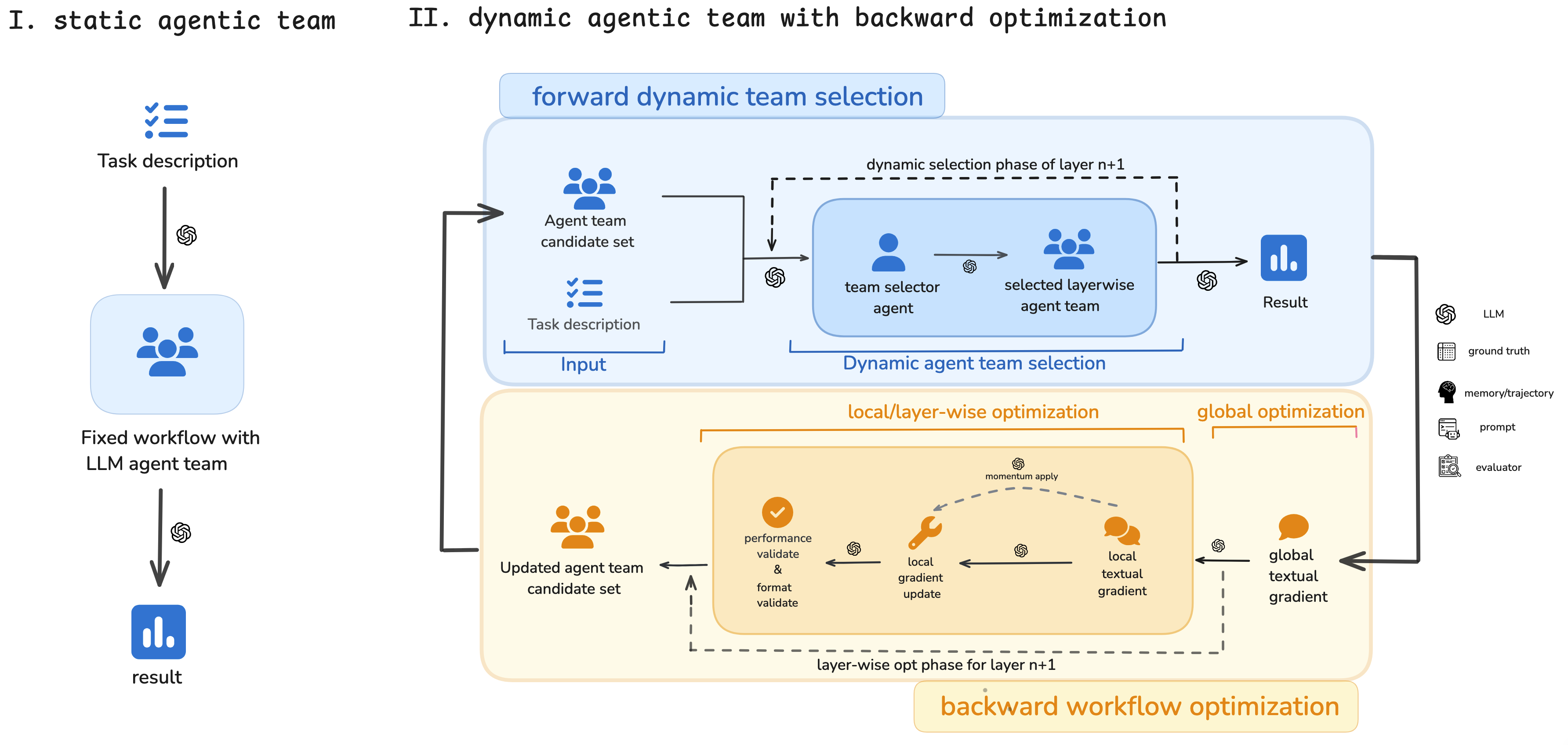

Figure 1: Comparison of static and dynamic agentic teams, illustrating the adaptability of the ANN framework.

Backward Optimization

If the predefined performance thresholds are not met after the forward pass, the backward optimization phase is triggered to refine agent interactions and aggregation functions at both global (system-wide) and local (layer-specific) levels.

- Global Optimization: Analyzes inter-layer coordination, refining interconnections and data flow to improve overall system performance. The global gradient is computed as:

Gglobal=ComputeGlobalGradient(S,τ),

where S represents the global workflow and τ denotes the execution trajectory.

- Local Optimization: Fine-tunes agents and aggregation functions within each layer, adjusting their parameters based on detailed performance feedback. The local gradient for each layer is computed as:

Glocal,ℓt=βGglobal+(1−β)×ComputeLocalGradient(ℓ,fℓ,τ),

where β is a weighting factor that balances the influence of global optimization and layer-specific gradients.

To improve stability, ANN employs momentum-based optimization.

Experimental Validation

The ANN framework was evaluated on four challenging datasets: MATH (mathematical reasoning), DABench (data analysis), Creative Writing, and HumanEval (code generation). The experimental results indicate that ANN simplifies MAS design by automating prompt tuning, role assignment, and agent collaboration, outperforming existing baselines in accuracy. For instance, on HumanEval, ANN achieved 72.7\% and 87.8\% for GPT-3.5 and GPT-4, respectively.

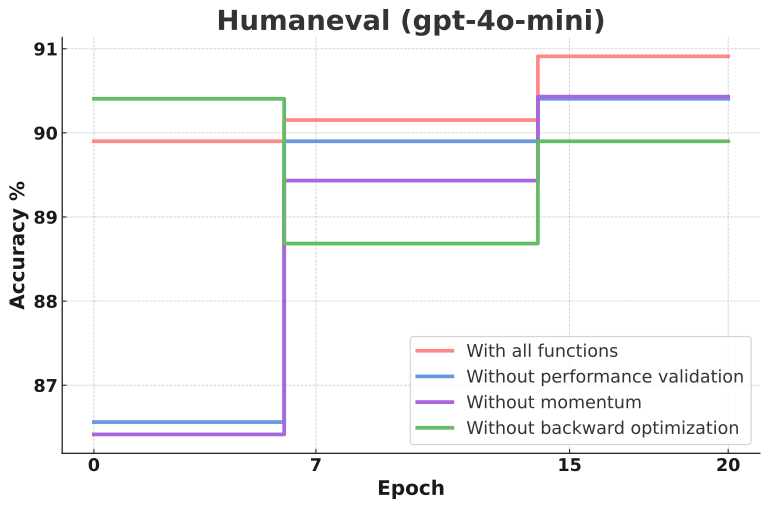

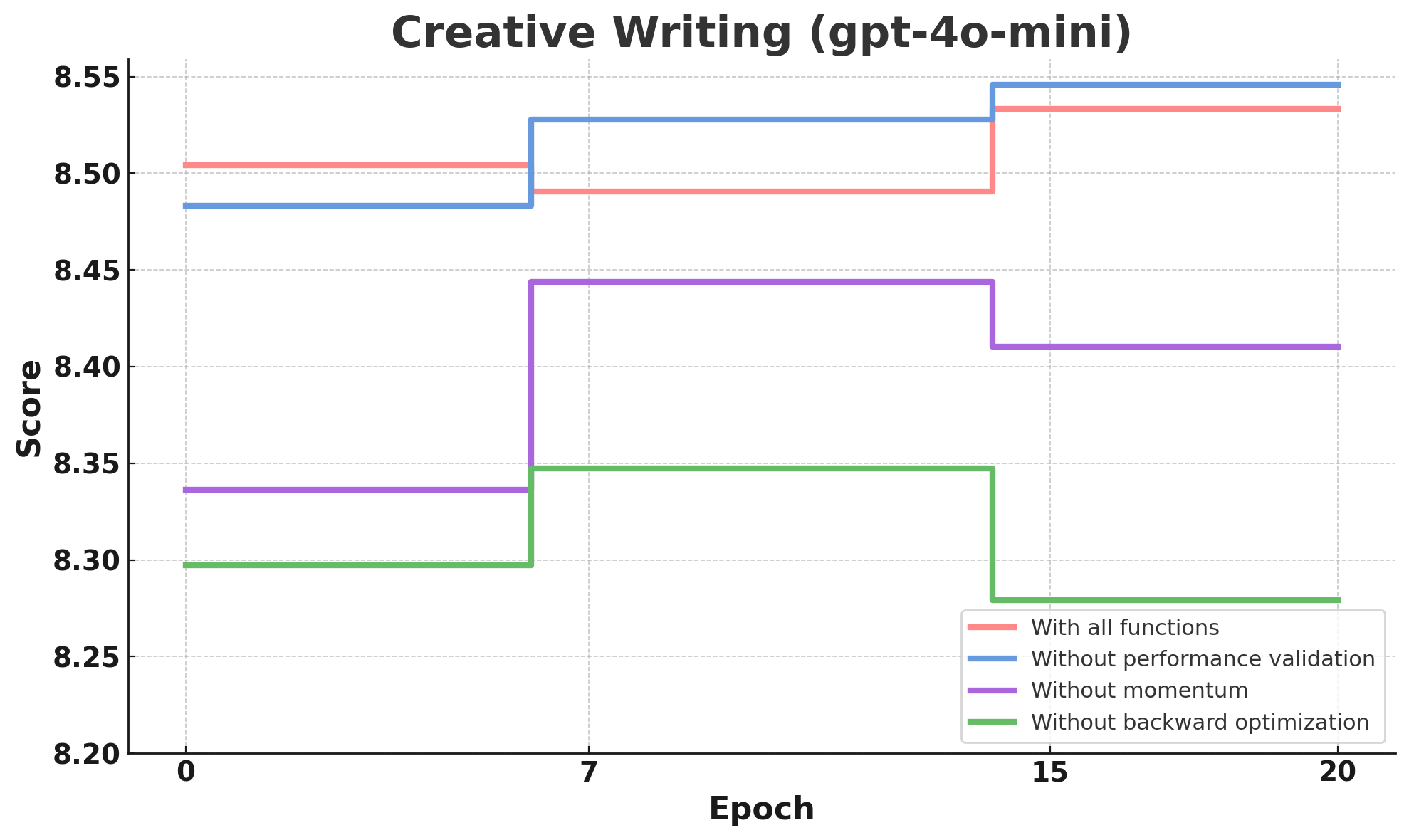

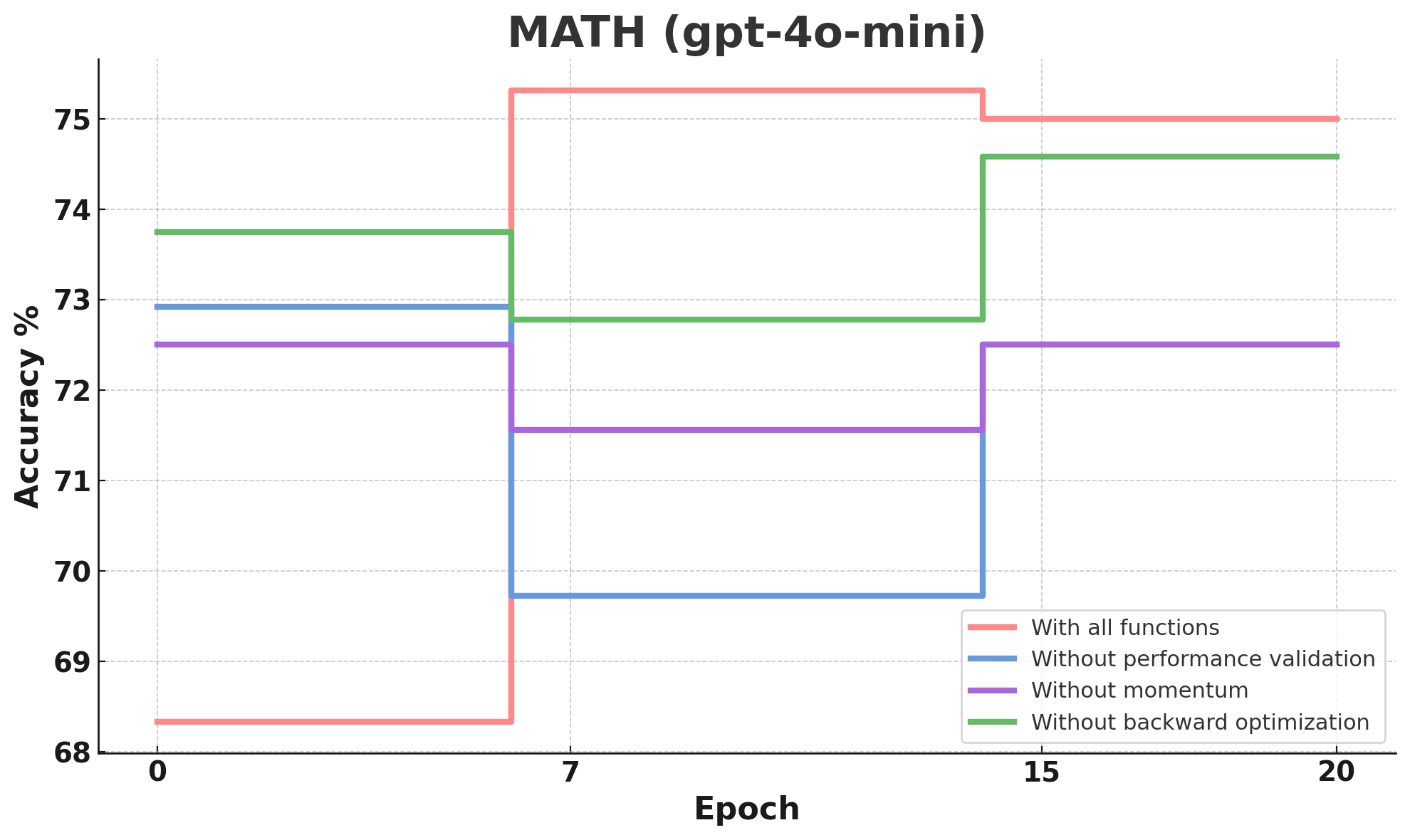

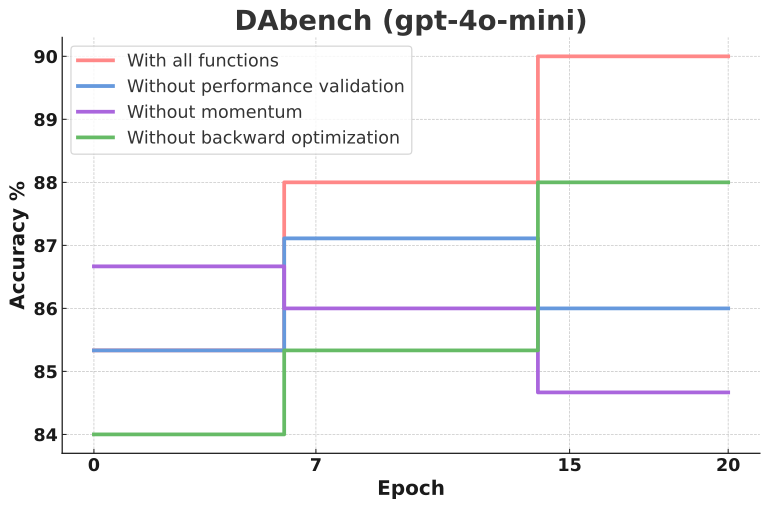

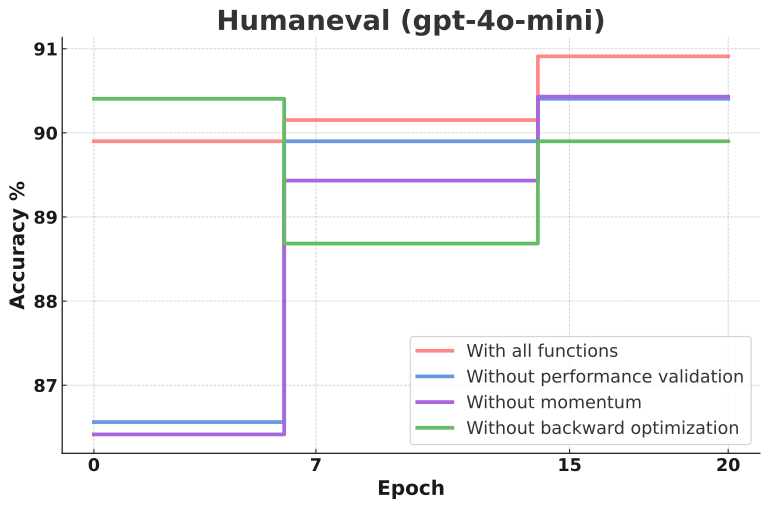

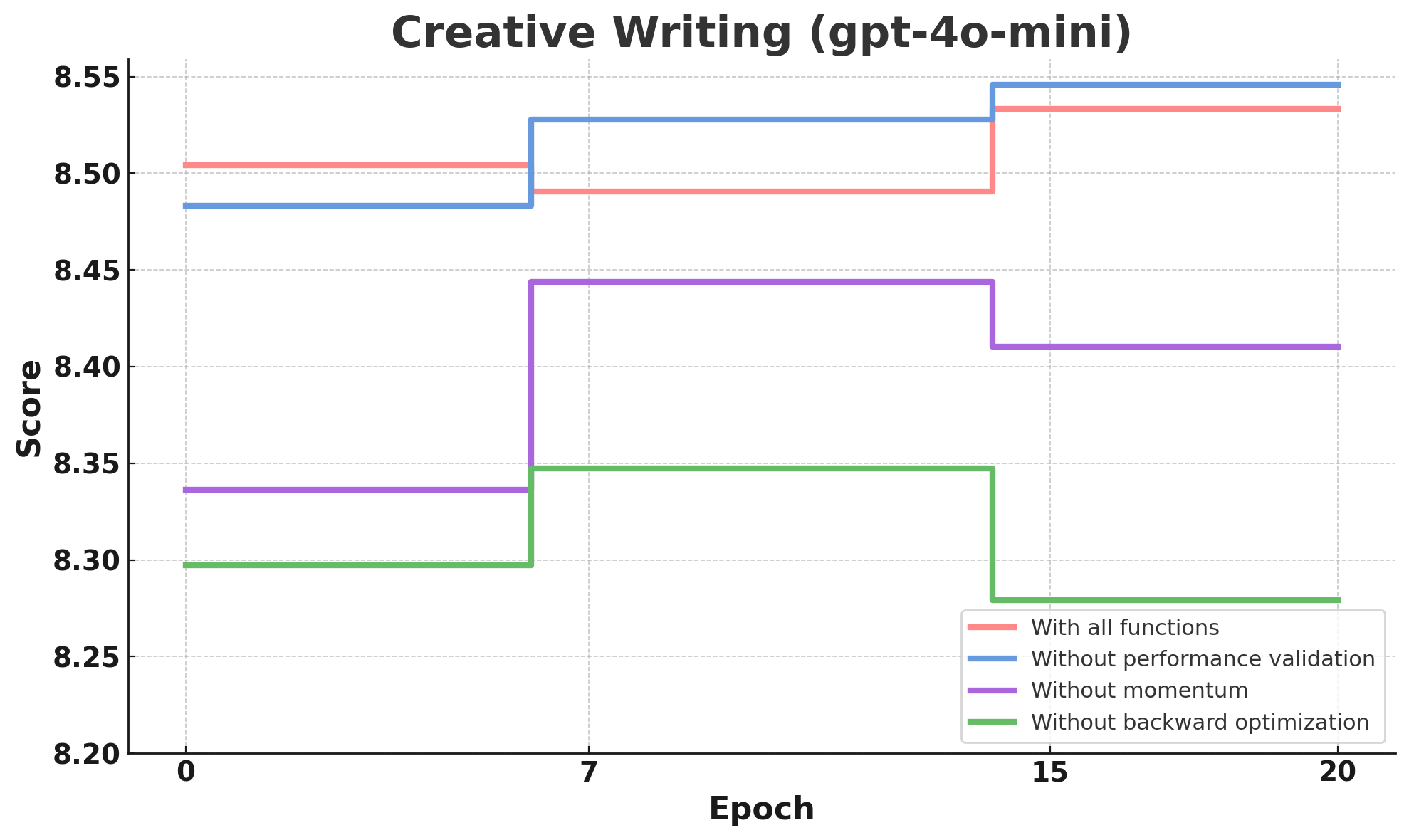

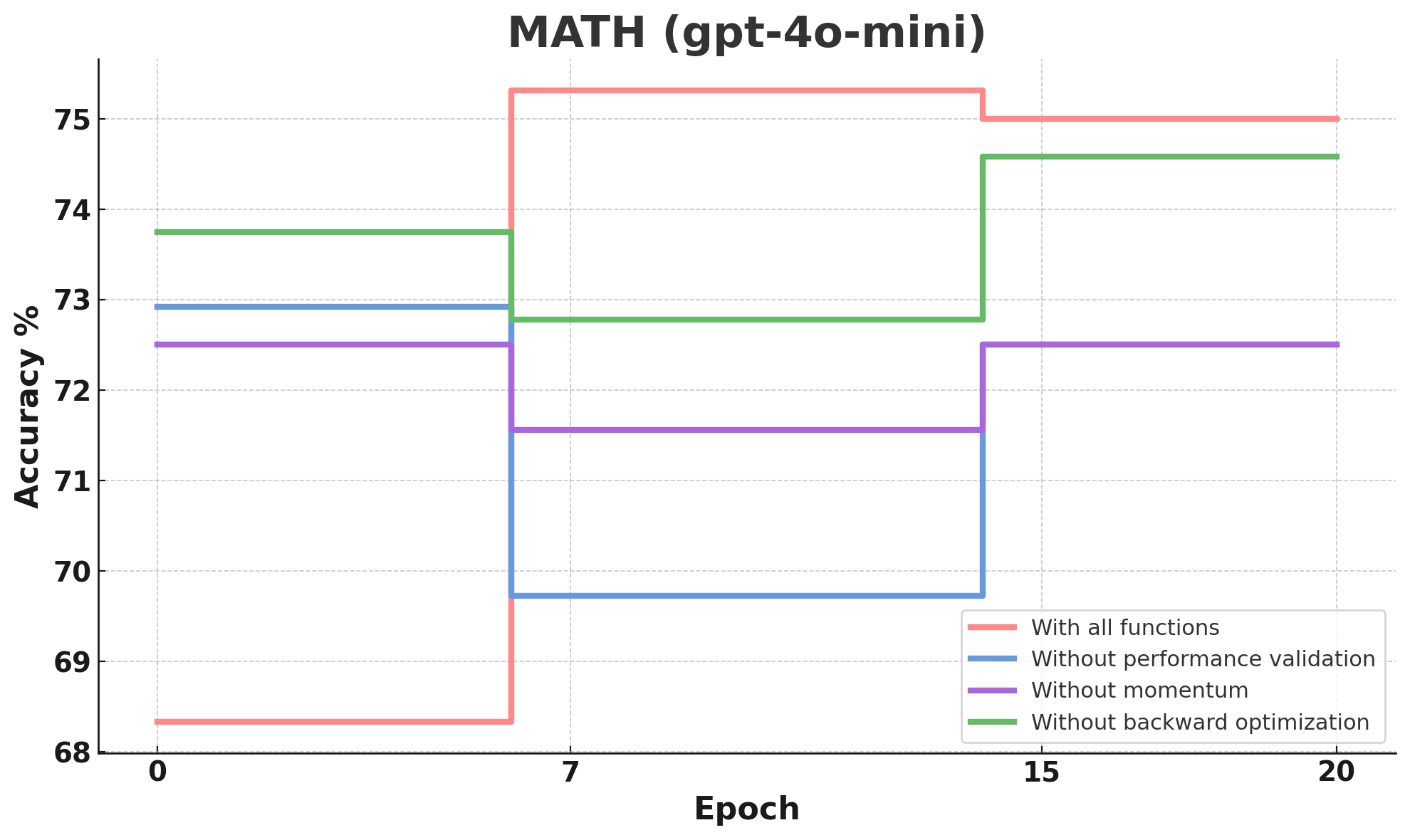

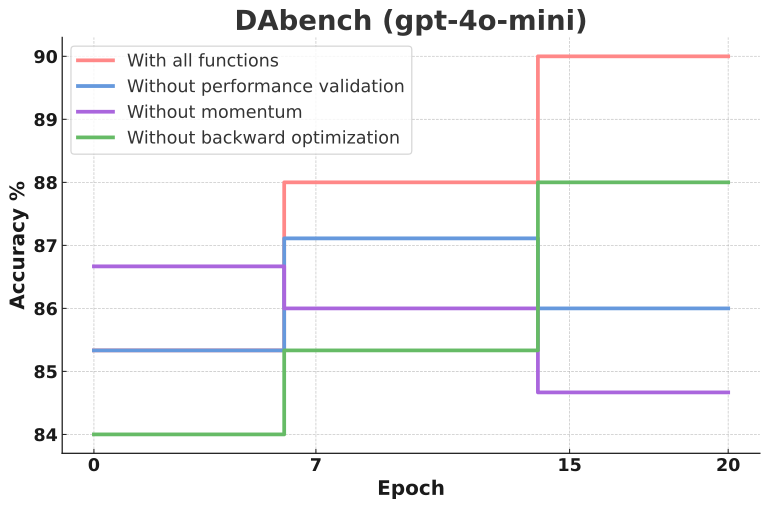

Figure 2: Ablation paper results on HumanEval, Creative Writing, MATH, and DABench, demonstrating the impact of various components of the ANN framework.

The paper also presents ablation studies to demonstrate the contribution of each component of the ANN framework. The ablation paper compares four variants: the full ANN approach, a variant without momentum-based optimization, a variant without validation-based performance checks, and a variant without backward optimization. The results indicate that each component contributes significantly to performance, and combining them yields the most reliable and robust improvements.

Implications and Future Directions

The ANN framework introduces a paradigm shift in multi-agent systems, moving from static, manually designed architectures to more data-driven, automated approaches. The framework's self-evolving capabilities, dynamically reconfiguring its agent teams and coordination strategies, offer a promising direction for creating more robust and flexible multi-agent systems.

Future work may focus on automating the generation of initial layouts from accumulated agent experience using meta-prompt learning, integrating advanced pruning techniques to enhance efficiency, introducing a dynamic role adjustment mechanism, and integrating multi-agent fine-tuning with global and local tuning of the multi-agentic workflow.

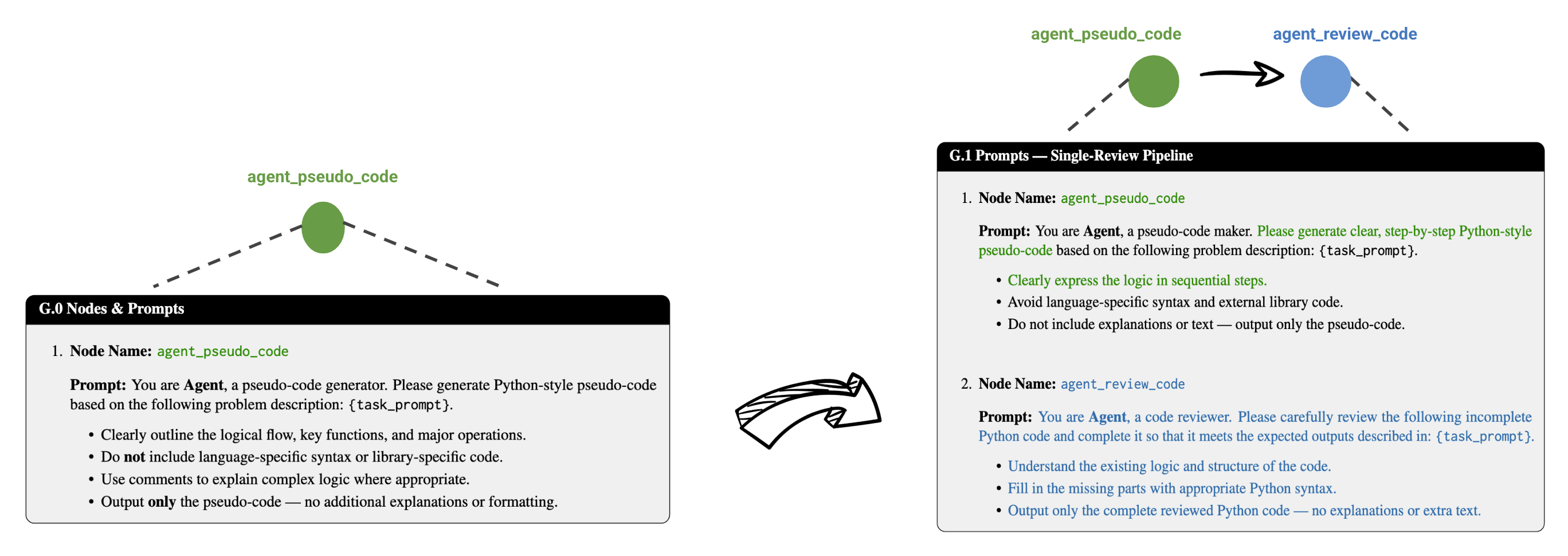

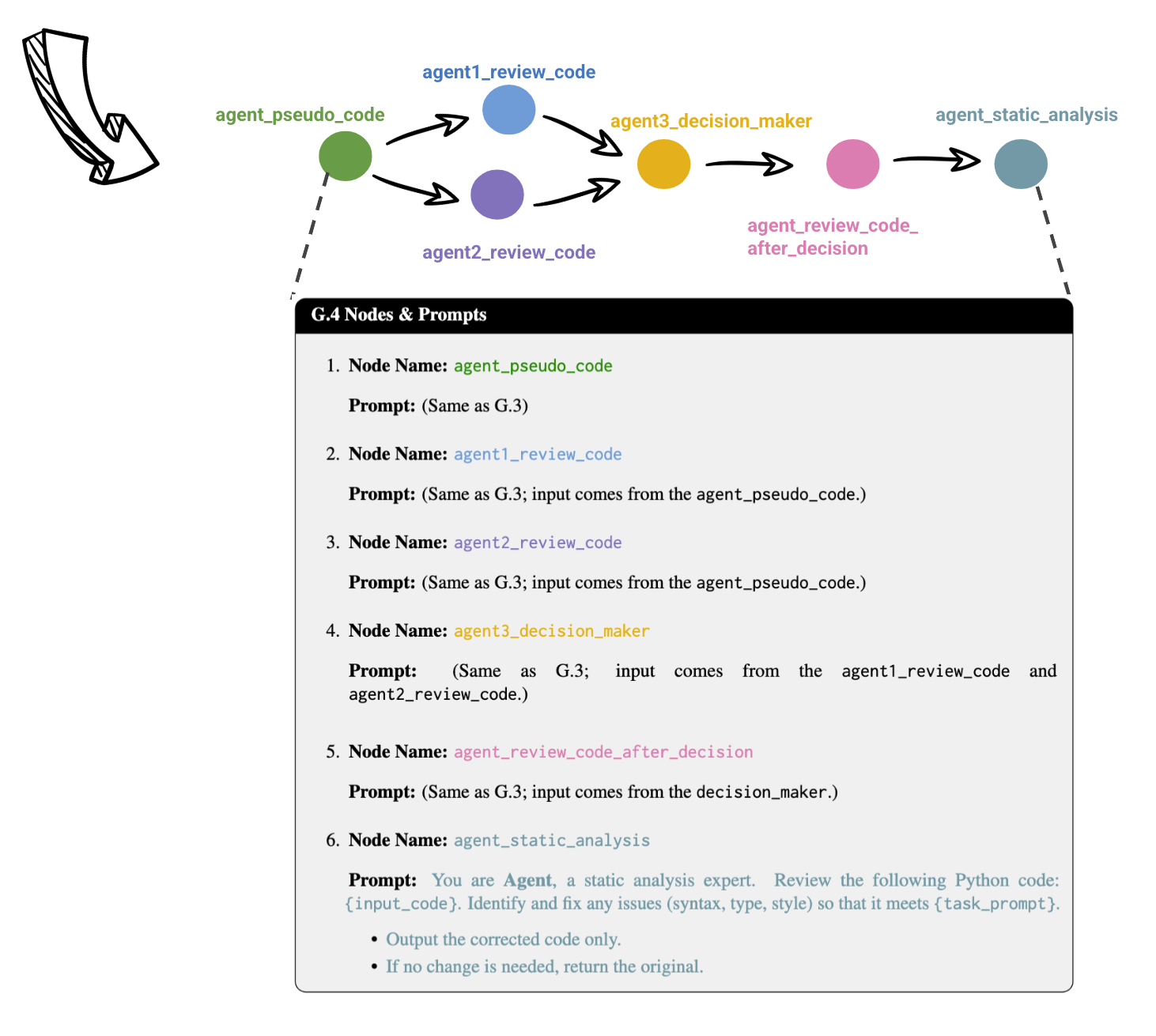

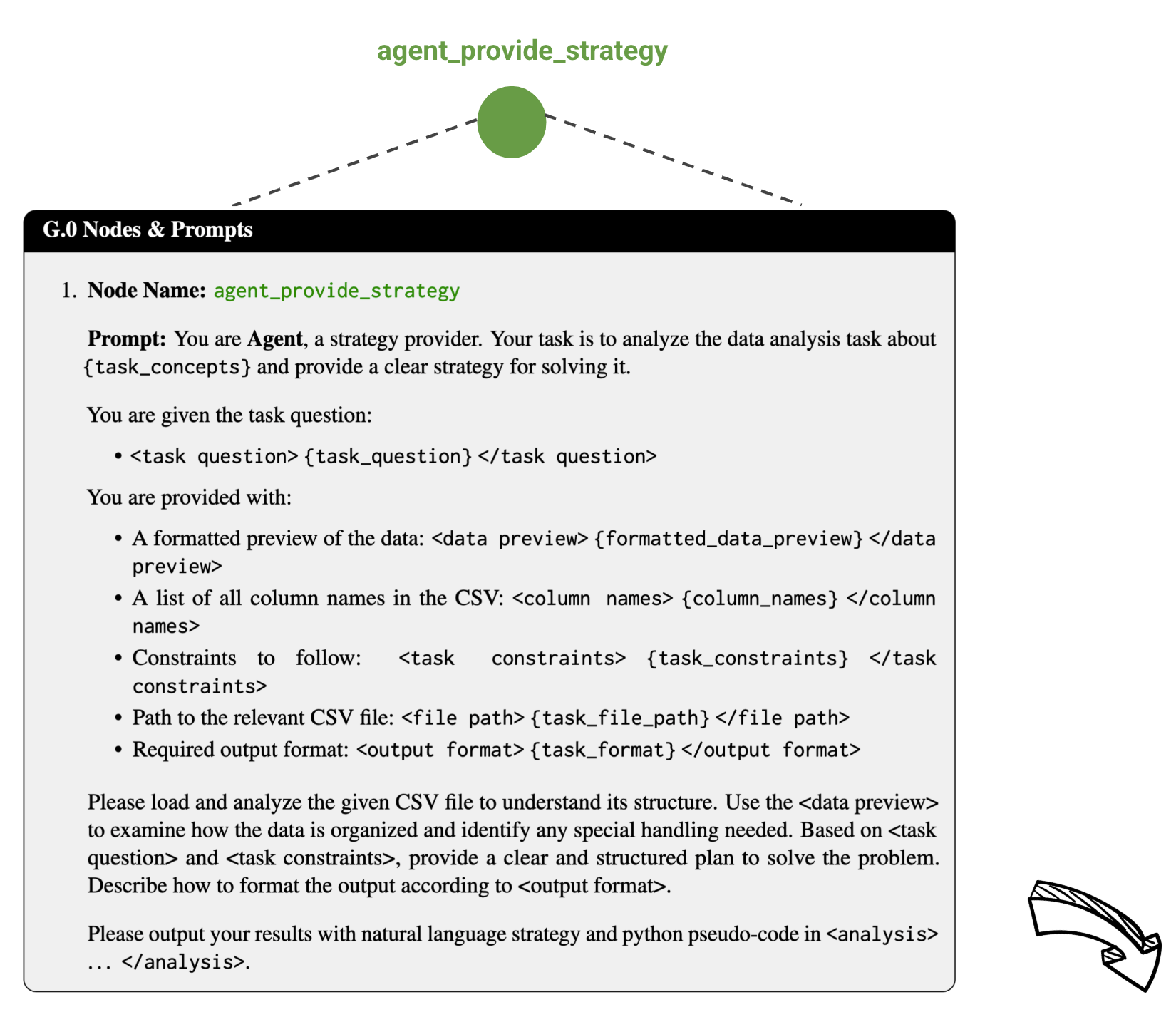

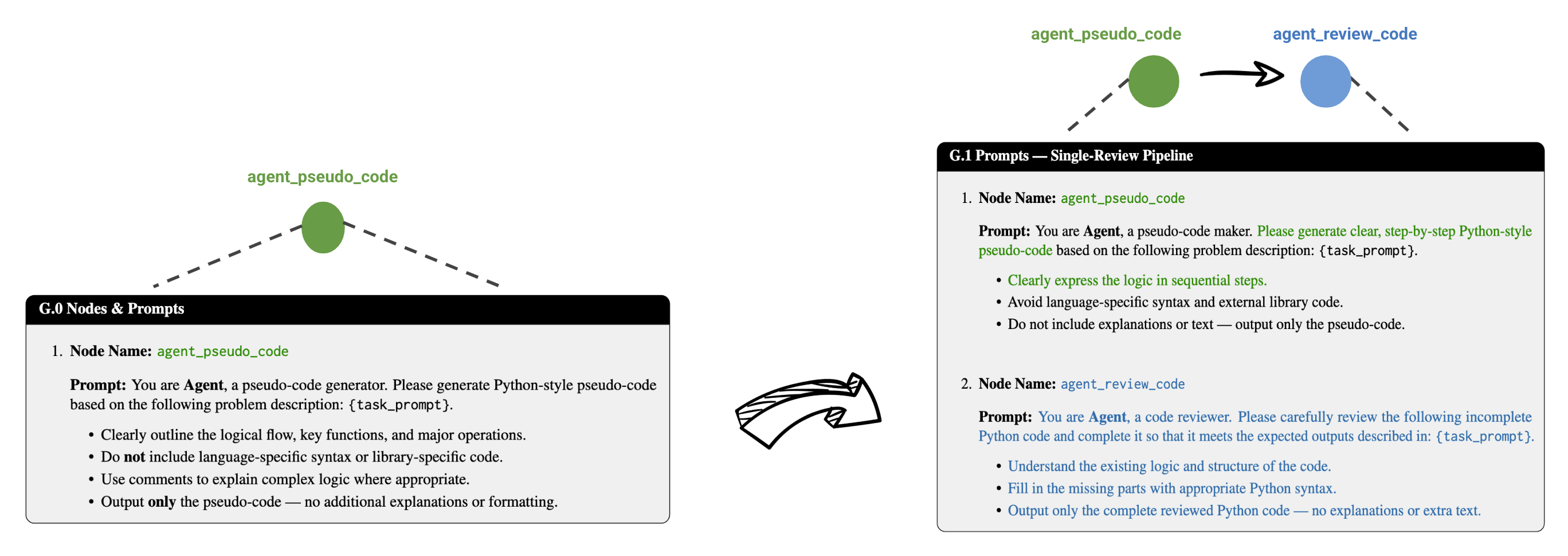

Figure 3: Prompt-evolution trajectory for the HumanEval dataset.

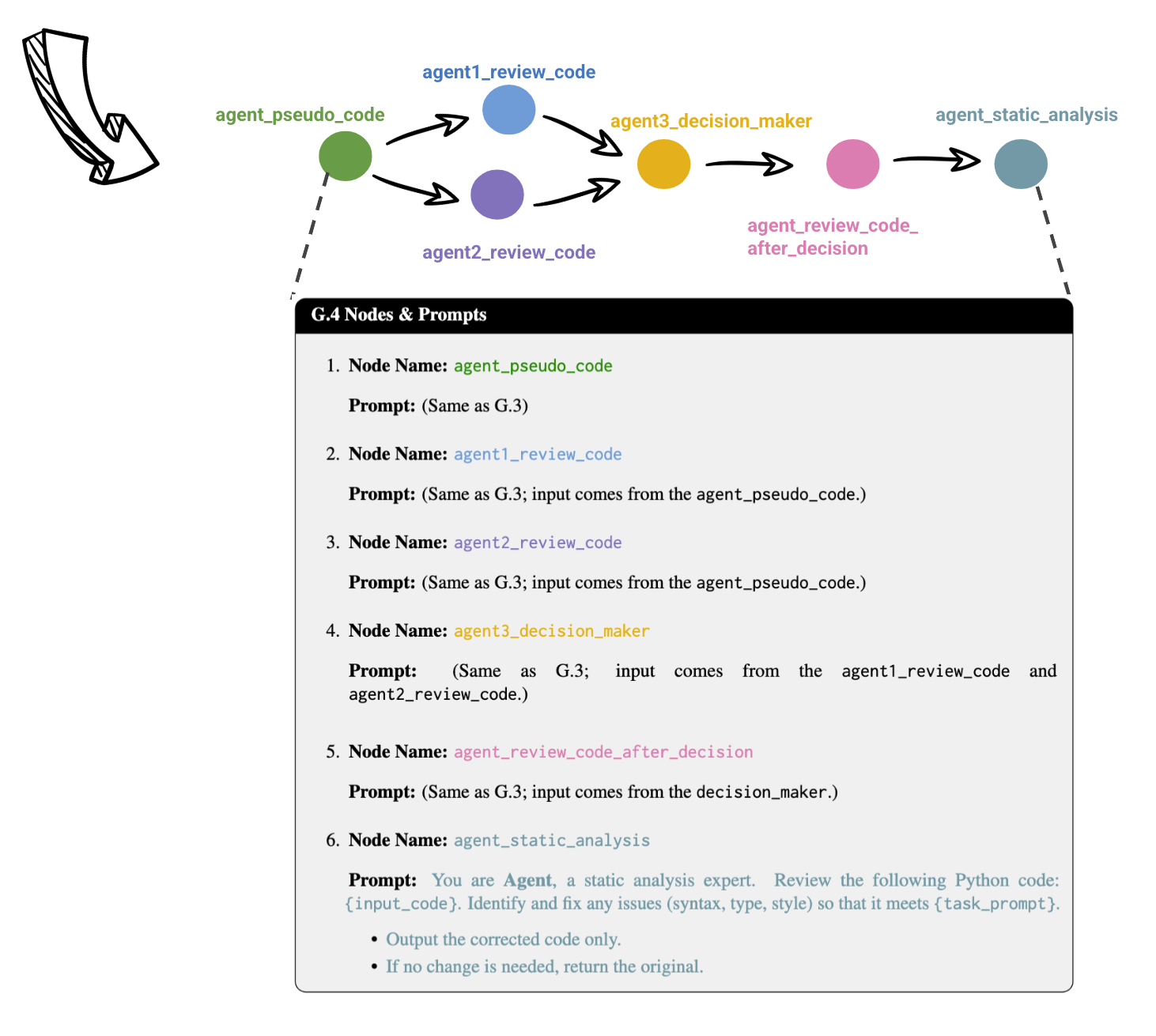

Figure 4: Prompt-evolution trajectory for the DABench dataset.

Conclusion

The Agentic Neural Network (ANN) presents a novel approach to multi-agent systems by integrating neural network principles with LLMs. The framework's dynamic agent team formation, two-phase optimization pipeline, and self-evolving capabilities demonstrate its potential for orchestrating complex multi-agent workflows. The ANN framework effectively combines symbolic coordination with connectionist optimization, paving the way for fully automated and self-evolving multi-agent systems.