1. Introduction

LLMs have revolutionized natural language processing with remarkable capabilities in text generation, translation, and summarization. However, the computational costs associated with deep architectures and vast parameter counts have raised significant challenges related to memory requirements, latency, and energy consumption. These concerns are particularly acute in resource-constrained environments such as mobile and edge devices. In response, the research community has increasingly turned to quantization techniques that reduce numerical precision while striving to preserve model performance. Among these, ternary quantization—restricting weights to the values -1, 0, and +1—has emerged as a promising approach due to its potential for drastic memory savings and simplified arithmetic operations.

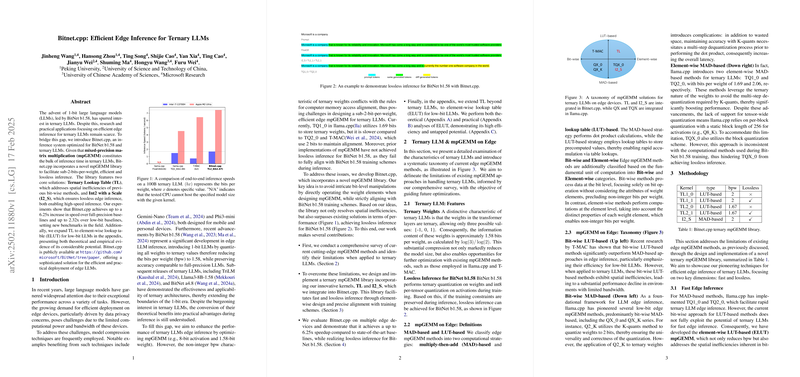

Bitnet.cpp exemplifies a state-of-the-art platform that integrates advanced algorithmic and hardware-level innovations to optimize ternary LLMs for efficient edge inference. By streamlining the conversion of full-precision models into their ternary counterparts, Bitnet.cpp addresses both the computational and practical challenges of deploying large-scale models beyond data centers.

2. Background on Ternary LLMs

Traditionally, LLMs have relied on full-precision (typically 32-bit floating-point) representations to capture intricate language patterns, but the tremendous computational overhead inherent to such models motivates the exploration of weight quantization methods. Ternary quantization limits each weight to the discrete set , which not only reduces the memory footprint but also permits the replacement of multiplications with simpler additions and subtractions. Formally, the ternary quantization function is defined by

where is an individual weight and denotes the threshold that delineates the quantization boundaries.

Relative to binary quantization, where weights are restricted to two values (typically ), the introduction of a zero state in ternary networks offers a crucial degree of freedom. This additional state not only facilitates model sparsity—which can improve generalization—but also aids in preserving representational capacity. Historical evolution in quantization started with binary networks and has progressively moved toward more balanced approaches that retain the performance benefits of full-precision models while significantly reducing computational demands.

3. Key Technologies and Methodologies

Bitnet.cpp is engineered to reconcile the theoretical benefits of ternary quantization with practical system performance. The platform harnesses several foundational technologies and specialized methodologies to meet the rigorously demanding requirements of modern deep learning inference.

3.1 Bitnet.cpp Inference System

At the core of Bitnet.cpp is a modular inference system that segregates data handling, computation, and control flows. This architectural separation is critical for maximizing speed and resource efficiency in low-bit environments. A central innovation is the mixed-precision matrix multiplication library (mpGEMM), which executes operations of the form

where is an input matrix (stored at lower precision), represents the weight matrix, and is accumulated at higher precision. This design ensures that model accuracy is not compromised despite aggressive quantization (Wang et al., 17 Feb 2025 ). Further computational gains are realized through specialized quantization methods such as the Ternary Lookup Table (TL), which replaces multiplications with efficient table lookups, and the Int2 With Scale (I2_S) technique, which manages 2-bit arithmetic using scaling factors to retain necessary precision.

3.2 Hardware Optimizations for Low-Bit-Width Processing

Modern hardware advancements are integral to efficiently deploying low-bit-width models. The Bitnet framework leverages hardware innovations specifically designed for operations at bit-precisions as low as 1.58 bits. Customized arithmetic units, optimized memory hierarchies, and tailored mixed-precision techniques serve to reduce power consumption and latency during inference. These developments pave the way for specialized hardware accelerators that prioritize efficiency over the highest numerical precision, thereby facilitating scalable and energy-efficient deployment of LLMs (Ma et al., 27 Feb 2024 ).

3.3 Ternary Weight Networks and Training Methodologies

Ternary Weight Networks (TWNs) restrict parameters to values within , dramatically reducing memory and computational requirements. Training TWNs involves a careful redefinition of weight optimization, wherein standard gradient descent methods are adapted to accommodate the discrete quantization function . Although the discrete nature presents challenges in convergence and stability, innovative optimization strategies have shown that performance degradation can be minimal while achieving substantial efficiency gains. These methodologies have been successfully applied not only in LLMs but also in domains such as computer vision and speech processing (Li et al., 2016 ).

4. Comparative Analysis of Quantization Approaches

A range of quantization strategies have been proposed to balance efficiency with model fidelity. Three notable approaches include post-training quantization, batch quantization, and adaptive quantization.

Post-training quantization techniques, such as those based on Minimum Mean-Squared Error (MMSE) objectives, systematically optimize the mapping from full-precision values to reduced numerical formats. Such methods carefully select quantization parameters—scale and zero-point—to minimize error and ensure that the reduced-precision model closely approximates its full-precision counterpart (Choukroun et al., 2019 ).

Batch quantization extends these ideas by considering groups of parameters or activations simultaneously. This coordinated approach not only simplifies memory access patterns but also exploits hardware parallelism inherent in modern accelerators, leading to notable improvements in inference throughput and energy efficiency (Liu et al., 2023 ).

Adaptive quantization methods further refine these strategies by dynamically adjusting bit-widths and quantization thresholds based on activation statistics and model sensitivity. Techniques like QUIK leverage hybrid quantization schemes, combining static settings with on-the-fly adaptations to maintain accuracy while aggressively reducing computational load. This flexibility is particularly advantageous in heterogeneous environments where different layers exhibit varied sensitivity to quantization noise (Ashkboos et al., 2023 ).

5. Experimental Results and Case Studies

Comprehensive evaluations of Bitnet.cpp demonstrate the practical benefits of ternary quantization on both inference speed and memory efficiency while maintaining competitive accuracy.

Experimental setups typically involve deploying quantized models on platforms equipped with NVIDIA GPUs and multi-core CPUs. Models transformed using Bitnet.cpp’s ternary quantization exhibit notable improvements: inference latency reductions from ~20 ms to around 12–13 ms per step, an increase in throughput by factors of 1.5× to 2×, and a significant drop in memory footprint—often by up to 3×—when compared to full-precision or even 8-bit quantized models (Gravel, 2021 , Gruppi et al., 2021 ).

Two particularly illustrative case studies include:

In a real-time chatbot application, ternary quantization reduced system latency from 25 ms (with 8-bit quantization) to 15 ms, while decreasing memory usage by approximately 60%. The result was a markedly smoother user interaction, critical for real-time responsiveness.

Similarly, a mobile-based language processing tool benefited from compressing its model from 800 MB to 250 MB. This reduction not only facilitated faster loading times and lower energy consumption but also maintained task-specific accuracy within acceptable thresholds (Arjona et al., 2021 ).

These findings affirm that ternary quantization, as implemented in Bitnet.cpp, delivers substantial efficiency gains with only minor performance trade-offs, making it a robust solution for edge deployment.

6. Challenges and Limitations

Despite its promising benefits, ternary quantization encounters several challenges that warrant further attention.

One major concern is the intrinsic loss of granularity when transforming continuous weights to a ternary set. This reduction in representational richness can lead to optimization difficulties, as the discrete nature of the quantized parameters complicates traditional training dynamics. Researchers have addressed these issues through specialized methods such as modified backpropagation and stochastic gradient approximations, though some performance degradation remains inevitable in complex tasks.

Hardware support for ternary arithmetic currently lags behind that for 8-bit and 16-bit operations. Many existing accelerators and deep learning frameworks are not fully optimized for the unique demands of ternary computations, often necessitating custom toolchains that can introduce inefficiencies and additional engineering overhead.

Furthermore, there exists a critical trade-off between model compression and accuracy retention. While aggressive quantization achieves significant reductions in memory and latency, it inevitably leads to some loss in performance. Striking the right balance requires careful, application-specific adjustments, and in scenarios demanding high precision, the drop in predictive accuracy may limit the suitability of ternary models.

7. Future Directions and Developments

The evolution of ternary quantization techniques is poised to advance through a combination of algorithmic innovation, hardware specialization, and integrated software frameworks.

Research into adaptive quantization strategies promises to further mitigate accuracy loss by dynamically adjusting thresholds during training and inference. These methods may enable a more nuanced allocation of precision across different layers, thereby optimizing the balance between efficiency and representational power (Wu et al., 2019 ).

In parallel, advances in dedicated hardware—such as processors and accelerators explicitly designed for low-precision arithmetic—are expected to complement these algorithmic developments. FPGA and ASIC implementations tailored for ternary operations will enhance both speed and energy efficiency, facilitating the broader adoption of quantized LLMs in edge and mobile settings (Peng et al., 2021 ).

Systems like Bitnet.cpp stand to benefit from ongoing community contributions and open-source collaboration, driving iterative refinements in quantization algorithms and system integration. Enhancements in modular design and seamless interoperability with leading frameworks like TensorFlow and PyTorch will be key to ensuring that these systems remain adaptable to the fast-evolving landscape of AI deployment.

8. Conclusion

The convergence of innovative ternary quantization techniques and specialized systems such as Bitnet.cpp marks a significant shift in the efficient deployment of LLMs. By reducing weights to discrete values of -1, 0, and +1, such methods dramatically decrease memory usage and computational requirements, making advanced LLMs accessible in resource-constrained environments like edge devices. Experimental evidence supports that these benefits come with only marginal accuracy trade-offs, while offering substantial improvements in inference speed and system scalability.

As research continues to refine quantization strategies and drive hardware enhancements, the prospects for efficient edge inference are increasingly promising. The integration of adaptive methodologies, hybrid quantization schemes, and dedicated low-precision hardware is set to unlock new applications and expand the reach of advanced AI beyond traditional data centers. In this light, the work embodied by Bitnet.cpp represents not only a technical achievement but also a foundational step towards a more energy-efficient and accessible future for artificial intelligence.