An Evaluation of OCR Capabilities in Large Multimodal Models: Introduction of OCRBench v2

The paper "OCRBench v2: An Improved Benchmark for Evaluating Large Multimodal Models on Visual Text Localization and Reasoning" provides a substantial contribution to the evaluation of Large Multimodal Models (LMMs) in Optical Character Recognition (OCR) tasks. Previous benchmarks have acknowledged the prowess of LMMs in text recognition but have not adequately explored their capabilities in more complex tasks such as text localization, handwritten content extraction, and logical reasoning. Thus, the authors present OCRBench v2, a comprehensive bilingual text-centric benchmark aiming to bridge the gaps identified in existing evaluations.

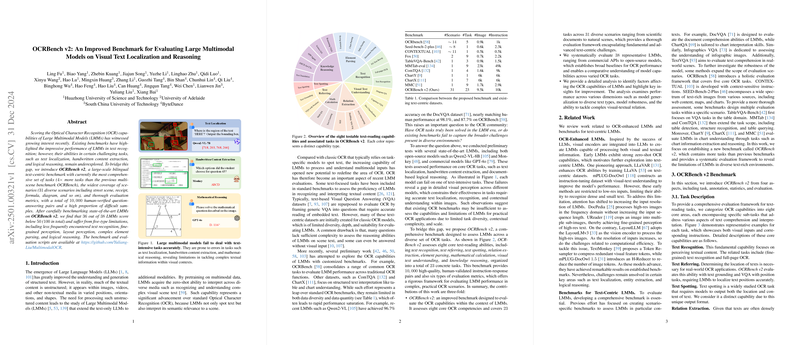

OCRBench v2 is distinguished by its expansive coverage, featuring four times the number of tasks present in prior benchmarks and spanning 31 scenarios—from street scenes to scientific diagrams. It includes a variety of text-centric tasks, bolstered by 10,000 human-verified question-answering pairs and sophisticated evaluation metrics tailored to specific tasks.

Upon evaluating 38 state-of-the-art LMMs, the authors reveal that 36 models score below 50 out of 100, uncovering five key areas of limitations: less frequently encountered text recognition, fine-grained perception, layout perception, complex element parsing, and logical reasoning. These empirical findings underscore the fact that, despite advancements, LMMs are not yet fully capable of overcoming the myriad challenges present in diverse text-rich environments.

Key Contributions and Methodology

OCRBench v2 offers a rigorous framework that breaks down OCR capabilities into eight core areas: text recognition, text referring, text spotting, relation extraction, element parsing, mathematical calculation, visual text understanding, and knowledge reasoning. This categorization is insightful for dissecting the strengths and challenges of current LMMs. The benchmark's methodological breadth ensures that various aspects of visual text processing are comprehensively evaluated, pushing beyond merely recognizing text to understanding its context and details within broader scenarios.

The benchmark utilizes a range of metrics to evaluate performance across tasks, including TEDS for parsing tasks and IoU scores for text localization, reflecting its intent to provide precise assessment tools relevant to the task's nature. For tasks involving logical reasoning and comprehension, metrics like BLEU, METEOR, and ANLS are employed.

Implications and Future Directions

The authors effectively demonstrate that LMMs, despite their zero-shot capabilities, still face difficulties in tasks that demand higher-order text understanding and reasoning, often required in real-world applications. The insights from OCRBench v2 imply that further enhancement is needed in developing LMMs that can execute fine-grained visual-textual analysis, perceive complex spatial relationships, and engage in logical reasoning with textual content.

Practically, this research guides future developments in optimizing LMM architectures to tackle high-res inputs, enhance token efficiency, and improve task-specific pretraining datasets. Theoretically, it emphasizes the importance of continued exploration into models that unify visual and textual processing more effectively, perhaps by incorporating more sophisticated contextual understanding mechanisms or hybrid approaches that combine traditional OCR techniques with LMMs.

In conclusion, OCRBench v2 represents a critical resource for advancing the field of multimodal AI and ensuring more nuanced and demanding OCR tasks are within the capability of future LMMs. This work establishes benchmarks that compel the research community to acknowledge and address the nuanced complexities present in visual text environments, paving the way for more robust and intelligent multimodal systems.