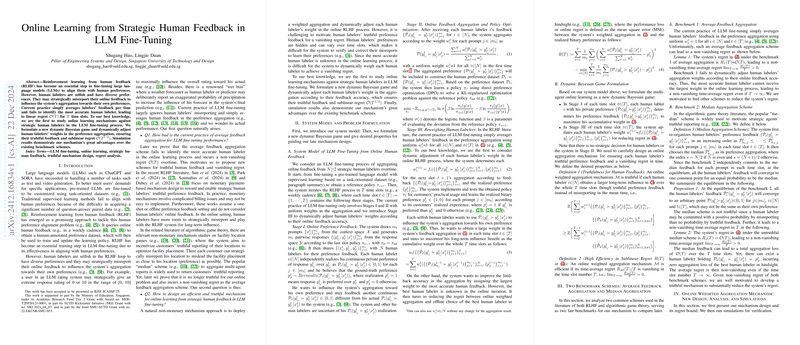

The paper "Online Learning from Strategic Human Feedback in LLM Fine-Tuning" tackles the challenge of aligning LLMs such as ChatGPT more effectively with human preferences through Reinforcement Learning from Human Feedback (RLHF). The authors identify a critical problem in the RLHF process: human labelers, driven by diverse and selfish preferences, tend to strategically misreport their feedback to skew the system's preference aggregation toward their desires. This misreporting leads to linear regret () over time slots in the current practice, where feedback is simply averaged out without distinguishing the accuracy of feedback provided by different labelers.

To address this, the authors introduce a novel online learning mechanism that dynamically adjusts the weights of human labelers' feedback according to their accuracy in a dynamic Bayesian game framework. The game is structured to ensure truthful feedback from labelers by correlating their long-term influence within the system to the accuracy of their feedback. The mechanism employs a non-monetary approach, avoiding complex billing issues associated with monetary incentive designs, and operates successfully in an online setting where human labelers have repeated interaction opportunities.

Key contributions and findings of the paper include:

- Mechanism Design:

- A weighted aggregation mechanism dynamically adjusts labelers' weights based on their reported preference accuracy.

- Ensures truthful reporting and achieves sublinear regret of , significantly better than previous approaches, by optimizing feedback aggregation in each round.

- Utilizes a step-size parameter , chosen as , where is the number of labelers and is the number of time slots, for effective learning and regret minimization.

- Theoretical Analysis:

- The authors use analytical proofs to show that their method is both truthful and efficient, leading to low regret compared to traditional feedback aggregation methods.

- They illustrate that dynamic weighting based on feedback accuracy is crucial for minimizing regret in LLM fine-tuning processes.

- Simulation Results:

- Extensive simulations validate the proposed mechanism's advantage over existing benchmarks, such as the average feedback aggregation and median aggregation schemes. These benchmarks yield non-vanishing regret, indicating inefficiency in truthfully and accurately aggregating feedback.

The methodological advancements proposed in this paper are demonstrated to provide considerable improvements in fine-tuning LLMs by effectively mitigating the impact of strategic misreporting from human labelers and aligning the models closer to truthful human preferences. This aligns with practical application needs where LLMs are expected to dynamically adapt to user feedback and preferences in real-time, establishing a novel approach to handling strategic behavior in human feedback loops.