Introduction

In the field of LLMs, achieving alignment with human preferences remains a significant challenge. Existing methods often rely on Reinforcement Learning from Human Feedback (RLHF), but such techniques encounter complexities and computational burdens. A novel method is introduced in the paper by Wenhao Liu, et al., from the School of Computer Science at Fudan University, aiming to streamline this process. This method, Representation Alignment from Human Feedback (RAHF), circumvents existing RLHF challenges by focusing on the representations within the LLMs and altering them to more accurately reflect human preferences.

Method and Related Work

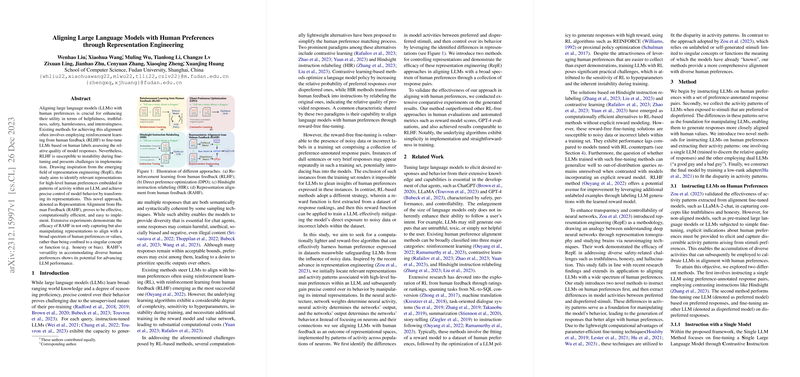

This paper takes an innovative path by identifying and manipulating latent representations in the LLM that correspond to human preferences. By altering these representations rather than the conventional model outputs directly, RAHF demonstrates an understanding of human preferences that extends beyond singular concepts to encompass a broad spectrum of values and preferences. This approach contrasts with RLHF techniques that solely fine-tune models using human-labeled data and rankings to elicit the desired response, potentially oversimplifying human preference expression.

The authors elaborate on two methods within RAHF: the first leverages a singular, instruction-tuned LLM capable of generating both preferred and dispreferred responses; the second uses dual LLMs individually optimized for preferred and dispreferred outcomes. These paradigms allow the LLM's behaviour to be fine-tuned through manipulation of its underlying representations, avoiding direct exposure to potentially biased or noisy data.

Experiments and Results

In a series of rigorous experiments, RAHF's effectiveness is validated against various competing methods under several metrics, including human evaluations and automated assessments like reward model scores and GPT-4 evaluations. The results show RAHF, especially the RAHF-DualLLMs variant, consistently surpassing RL-free approaches across both reward models. Notably, while DPO achieves higher rewards at higher temperatures, the instability associated with high-temperature sampling leads to a preference for results obtained at lower temperatures, where RAHF-DualLLMs exhibits solid performance.

The paper also described extensive human evaluations corroborating the superiority of RAHF methods over RL-free techniques, hinting at better alignment with complex human preferences. Incidentally, human participants often offered 'tie' judgments more frequently compared to GPT-4's binary decisions, indicating nuances in human assessment that automated metrics may not capture.

Conclusion

The research at hand forges a path away from conventionally resource-heavy reinforcement learning techniques used to align LLMs with human preferences. RAHF introduces a method focusing on representation engineering, which advances the model's performance aligned with human values. Extending beyond the capabilities of existing RL-based or RL-free fine-tuning methods, RAHF serves as a compelling alternative, potentially catalyzing future strides in developing controllable LLMs. The paper, therefore, marks a significant step towards refining our understanding and control over LLM outputs in a manner that is more attuned to human ethical and preference frameworks.