OmniACT: Setting New Benchmarks for Multimodal Autonomous Agents in Desktop and Web Environments

Overview

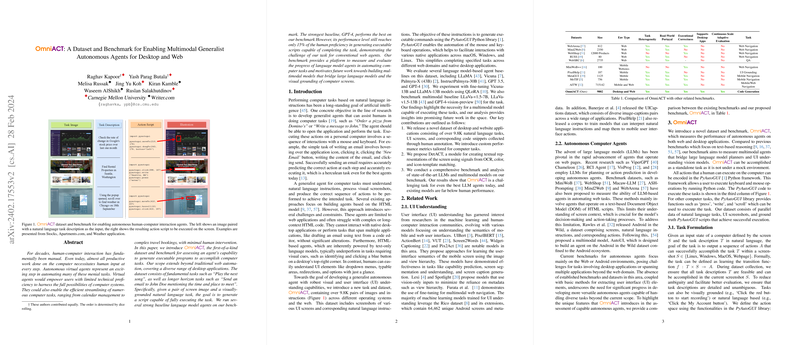

Recent advancements in AI have aimed to simplify human-computer interactions by developing autonomous virtual agents capable of executing tasks with minimal human input. These tasks, ranging from mundane activities like playing music to more complex sequences such as sending emails, significantly depend on the agent's ability to interpret natural language instructions and transform them into executable actions. Despite the proliferation of such intelligent systems, the gap between human proficiency and autonomous agents remains vast, particularly in multimodal contexts involving both desktop and web applications. To bridge this gap, the paper introduces OmniACT, a novel dataset and benchmark designed to assess the capabilities of autonomous agents in generating executable programs for comprehensive computer tasks based on visually-grounded natural language instructions.

OmniACT Dataset: A New Frontier

The OmniACT dataset is unprecedented in its scope, encompassing a wide array of tasks across various desktop and web applications. With over 9.8K task pairs, including screenshots of user interfaces (UIs) and corresponding natural language instructions, OmniACT extends beyond conventional web automation. The dataset's unique challenge lies in the agent's need to navigate through different operating systems (macOS, Windows, Linux) and web domains, making it the first dataset to focus on such a diverse range of applications for autonomous agents.

Methodological Insights

The paper lays out an exhaustive methodology for dataset preparation, focusing on the compilation of tasks that span across multiple domains on both desktop and web applications. By carefully annotating UI elements and collecting tasks through human annotation, the researchers ensured the dataset's relevance and complexity. Key to this process was the development of PyAutoGUI-derived executable tasks, offering a pragmatic approach to automating user interactions across varied applications.

Performance Benchmarking

Evaluating several state-of-the-art LLM-based agents, including GPT-4, the paper encapsulates the challenges inherent in the OmniACT benchmark. Despite GPT-4's superior performance relative to other baselines, it achieves only 15% of human proficiency, underscoring the significant challenge the OmniACT tasks present to current AI models. This finding not only illustrates the dataset's complexity but also highlights the necessity for advancements in multimodal models that can better understand and interact with both visual and textual information.

Implications and Future Directions

The implications of this research are twofold. Practically, improving autonomous agents' performance on OmniACT tasks could revolutionize how we interact with computers, making technology more accessible to users with limited technical skills and streamlining routine tasks. Theoretically, the research underscores the importance of developing more sophisticated multimodal models that integrate visual cues with natural language processing. As such models evolve, we can anticipate significant breakthroughs in AI's ability to understand and navigate complex, multimodal environments.

Concluding Thoughts

In conclusion, OmniACT represents a substantial step forward in the quest to develop generalist autonomous agents capable of executing a broad spectrum of computer tasks. By providing a challenging benchmark, the dataset not only facilitates the evaluation of current AI models but also sets a clear direction for future research. Enhancing the capabilities of autonomous agents in this domain will undoubtedly have far-reaching implications, from the democratization of technology to the automation of laborious tasks, heralding a new era in human-computer interaction.