A Comprehensive Survey on Evaluation of Multimodal LLMs

This paper conducts a meticulous exploration into the evaluation paradigms of Multimodal LLMs (MLLMs), pinpointing its crucial role in the field of AGI. The paper emphasizes the importance of evaluation in guiding the advancement and enhancement of MLLMs, which are distinguished by their ability to process multimodal data, such as language, vision, and audio inputs. Founded on the initial success of pre-trained LLMs, MLLMs advance by integrating these diverse inputs to produce more nuanced and contextually rich outputs.

The paper provides an in-depth analysis over several dimensions:

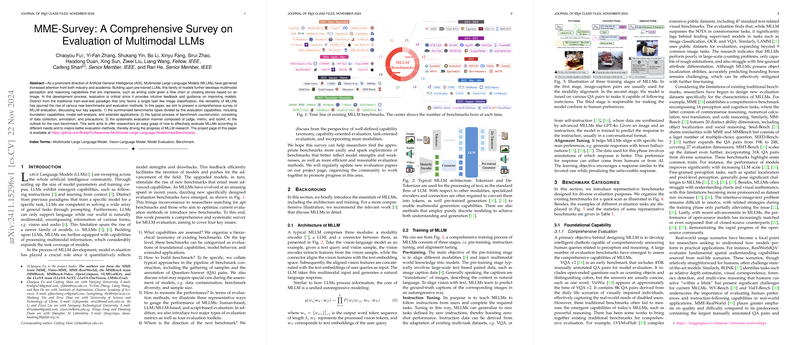

- Types of Evaluation Benchmarks: The paper categorizes the benchmarks into foundational capabilities, model self-analysis, and extended applications. Foundational capability benchmarks, which include popular ones like VQA v2 and MME, focus largely on the broad cognitive and perceptive abilities of MLLMs. In contrast, benchmarks like POPE emphasize weaknesses such as hallucinations, bias, and safety, critically analyzing how these models operate under different scenarios.

- Benchmark Construction: The paper closely examines various strategies for constructing robust evaluation benchmarks. By exploring methods ranging from reutilizing existing datasets to generating data via prompting models, the paper discusses the merits and challenges of each approach. Incorporating samples from existing datasets is noted for its efficiency, albeit with a risk of data leakage.

- Evaluation Methods: Acknowledging the complexity of assessing MLLM performance, the authors review human evaluations, LLM/MLLM-based evaluations, and script-based evaluations. Among these, human evaluations are lauded for their reliability, though burdened with cost and time inefficiencies. In contrast, script-based evaluations offer speed and consistency but may fall short in scenarios where nuanced interpretation is required.

- Performance Metrics: Central to MLLM evaluation are the deterministic and non-deterministic metrics, with accuracy, F1 score, and mAP often representing traditional deterministic metrics. However, the paper notes emerging methodologies, like CircularEval, which seek to capture the intricacies of model decision-making processes beyond simple correctness.

The implications of this work extend to both theoretical and practical realms of AI. The elucidation of current benchmarks and methods lays a groundwork for both critical evaluations of MLLMs’ strengths and weaknesses, and the formulation of future research directions. From a practical standpoint, developers and researchers are equipped with nuanced insight into crafting more effective and challenging benchmarks. The speculative outlook provided on future developments encourages addressing complex real-world applications of MLLMs, such as the nuanced comprehension of speech or engagement with 3D representations.

In conclusion, the survey palpably demonstrates the importance of a structured and multifaceted evaluation framework for MLLMs. It underscores the necessity for continuous enhancements in benchmarks and evaluation methods to keep pace with rapidly evolving MLLM capabilities. The insights and perspectives offered are poised to inform ongoing efforts to refine both the development and evaluation of MLLMs, thereby advancing their applicability and reliability across diverse and real-world contexts.