Overview of Dynamic-VLM: Simple Dynamic Visual Token Compression for VideoLLM

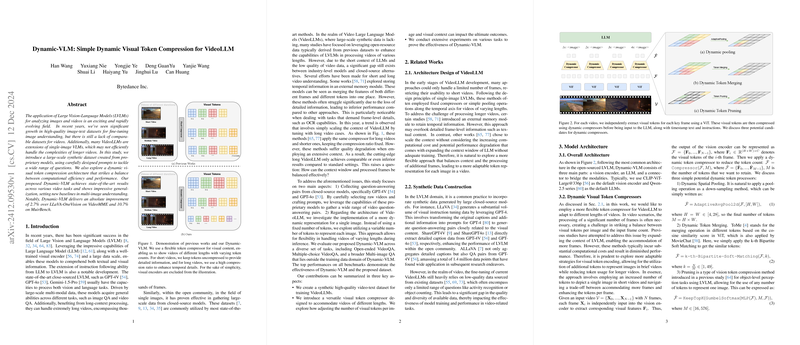

The paper introduces Dynamic-VLM, a significant contribution to the domain of Video LLMs (VideoLLMs). It addresses key limitations in current systems, which often extend single-image models to handle video content without adequately incorporating the temporal complexities that videos present. This work innovatively advances VideoLLMs by introducing a synthetic dataset and a novel dynamic visual token compression architecture.

Core Contributions

- Synthetic Dataset Construction: The paper constructs a comprehensive synthetic dataset leveraging closed-source models such as GPT-4V and GPT-4o. This dataset aims to address the current deficit in high-quality video-text datasets that are essential for training VideoLLMs. By synthesizing video question-answering pairs from raw video data, the authors enhance the dataset with diverse tasks including perception, reasoning, and temporal awareness.

- Dynamic Visual Token Compression: The architecture proposed introduces a flexible method for managing video content, allowing the model to extract and process visual information optimally. This compression mechanism adapts the number of visual tokens based on video length, maintaining high detail in short videos while efficiently summarizing longer videos. This adaptability presents a balanced approach to processing a wide range of video lengths without significant performance degradation.

- Empirical Validation and Performance: The experimental results highlight Dynamic-VLM’s state-of-the-art performance across various video tasks. Notably, it outperforms existing models on benchmarks like VideoMME and MuirBench, with improvements of 2.7% and 10.7% respectively over previous models such as LLaVA-OneVision. This underscores the model’s enhanced generalization capabilities across open-ended and multiple-choice video QA tasks.

Practical and Theoretical Implications

The practical implications of Dynamic-VLM are palpable in applications requiring nuanced video understanding. By addressing both detail retention in short videos and computational efficiency in processing longer ones, this work lays the groundwork for more scalable and adaptable video understanding tools. Theoretically, the dynamic token compression architecture reframes how token counts can be dynamically adjusted to optimize for varying input lengths, possibly influencing future computational efficiency strategies in related domains.

Future Developments

The approach posited by Dynamic-VLM suggests several avenues for future exploration. Extending the adaptability of token compression beyond videos to integrated multi-modal datasets presents a promising frontier. Moreover, the synthetic dataset construction exemplifies a broader trend towards leveraging closed-source models for enriching training datasets. Future research might explore this interaction further, refining methodologies for prompt engineering, synthetic data generation, and cross-modal learning strategies.

In conclusion, Dynamic-VLM offers clear advancements in the processing capabilities of VideoLLMs. Its contributions mark important strides in both the practical application and underlying theoretical frameworks, promoting enhanced interaction between vision and LLMs with dynamic video content. The paper’s insights on token management and dataset creation provide a blueprint for future innovations within the fast-evolving landscape of multi-modal AI systems.