Language-Guided Image Tokenization for Improved Image Generation

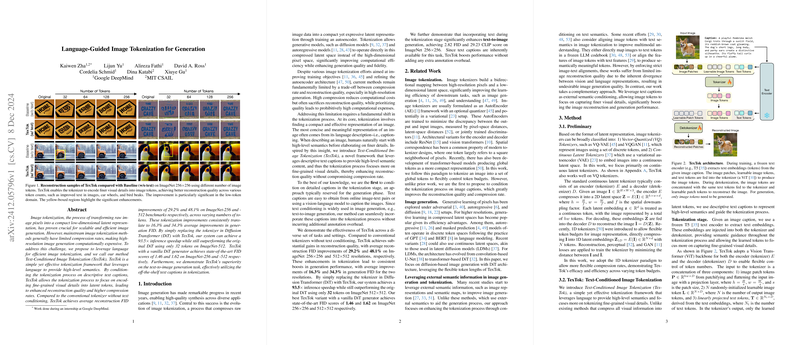

The research paper titled "Language-Guided Image Tokenization for Generation" introduces TexTok, a novel framework for image tokenization that integrates language conditioning to enhance image tokenization and subsequent image generation processes. The primary goal of this framework is to address the challenges posed by the existing image tokenization methods, particularly regarding compression rates and reconstruction quality.

In traditional image generation, tokenization plays a critical role by converting image data into a latent representation. However, there is a persistent trade-off between achieving high compression rates and maintaining reconstruction quality, especially in high-resolution scenarios where computational resources and efficiency become pivotal concerns. This paper proposes leveraging language as a guiding mechanism during the tokenization stage to achieve a more efficient and reconstruction-oriented representation.

TexTok Framework and Methodology

TexTok innovatively employs text conditioning during the tokenization process. Unlike conventional methods that might introduce text at the generation phase, TexTok incorporates it much earlier. By embedding textual descriptions into the tokenization stage, it redirects focus onto encoding finer visual details into the latent tokens, thus enhancing image quality without a significant computational overhead.

The TexTok methodology includes several advanced techniques:

- Text-Conditioned Image Tokenization: By inserting text captions into both the tokenizer and detokenizer, TexTok enriches semantic guidance early in the process, leading to an enhanced representation of visual details in the latent space.

- Adoption of Vision Transformers (ViT): Both the encoder and decoder utilize a ViT backbone, allowing flexible token control and efficient processing of high-level semantics through the latent tokens.

- Integration with Diffusion Models: The tokenized output from TexTok is further processed in generative frameworks such as the Diffusion Transformer (DiT), combining benefits of speed and quality in generative tasks.

Numerical Performance and Implications

The implementation of TexTok results in significant improvements across various performance indicators. For instance, TexTok achieves up to 93.5× inference speedup on ImageNet 512×512 while maintaining superior Frechet Inception Distance (FID) scores. Specifically, state-of-the-art FID scores of 1.46 and 1.62 were observed on ImageNet 256×256 and 512×512 respectively. These results denote substantial gains in both reconstruction fidelity and generative model efficiency, showing TexTok’s ability to operate effectively in compressed latent spaces.

Additionally, TexTok demonstrates its strength in text-to-image generation tasks, achieving a superior FID of 2.82 and a CLIP score of 29.23 on ImageNet 256×256, affirming its potential to enhance complex image generation pipelines with available textual data.

Implications and Future Directions

The proposed TexTok model stands out for its practical implications in enhancing generative model architectures, especially those constrained by computational and resource efficiency. Its ability to integrate seamlessly with existing frameworks like DiT and employ high-level language semantics opens pathways for further innovation in image synthesis, particularly in situations where minimal input data can be transformed into richly detailed outputs.

From a theoretical perspective, the introduction of language at the tokenization stage may usher new research avenues exploring the intersections of computer vision and natural language processing. More extensive applications and development of models that can efficiently harmonize these domains could lead to more robust generative models capable of understanding and synthesizing multimodal data more coherently.

In conclusion, TexTok embodies a significant advancement in the tokenization process for generative models, providing dual benefits of high reconstruction quality and reduced computational expense. This paper not only enriches current methodologies but also fuels future explorations into language-guided visual processing.