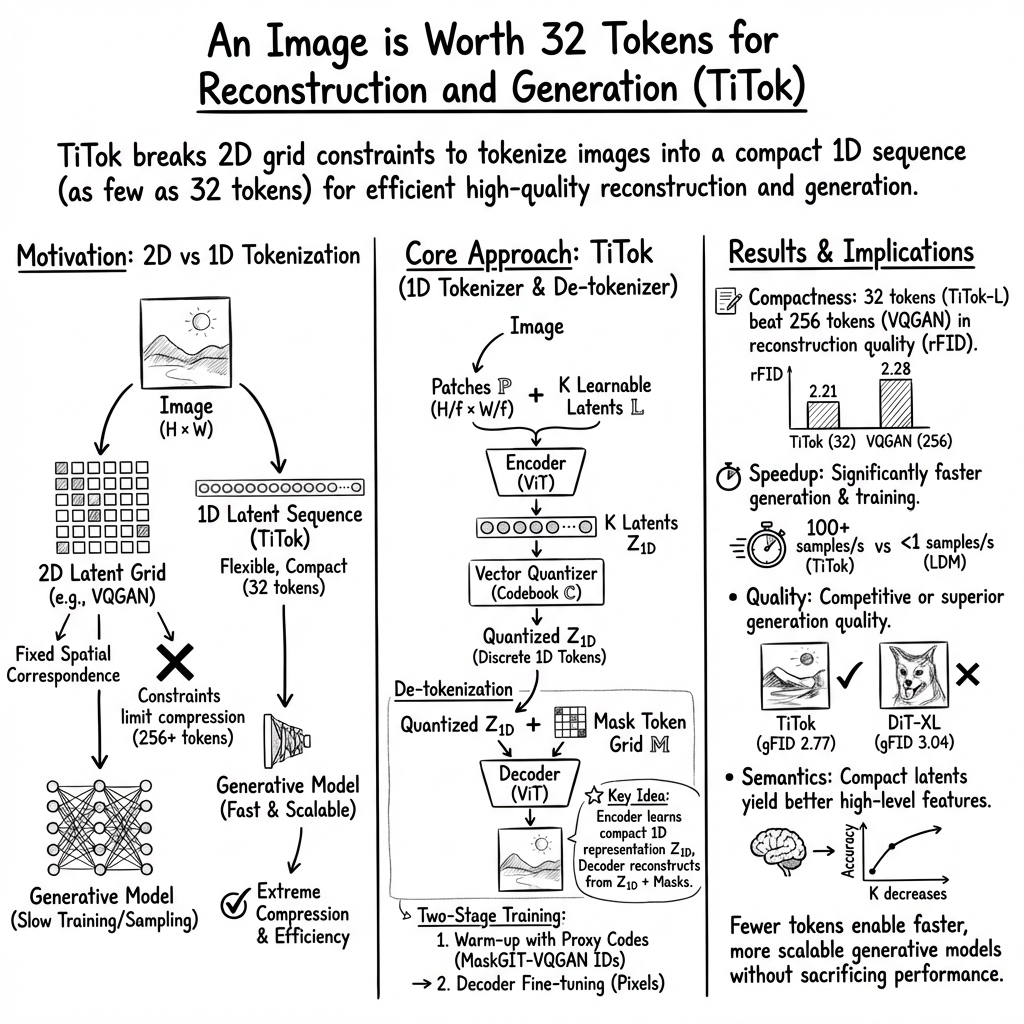

- The paper introduces TiTok, a novel tokenization framework that reduces 256×256 images to 32 tokens, significantly cutting computational complexity.

- It employs a Vision Transformer encoder-decoder architecture with vector quantization to achieve superior reconstruction and generative fidelity over traditional methods.

- The approach delivers up to a 410× increase in generation speed, promising efficient high-resolution image synthesis for practical applications.

An Image is Worth 32 Tokens for Reconstruction and Generation

Abstract:

The study presented in this paper reveals an innovative approach to image tokenization that promises substantial improvements in the efficiency of high-resolution image synthesis leveraging the inherent redundancies in images. Introducing TiTok, the authors propose a method that reduces images to discrete tokens for streamlined reconstruction and generation. Notably, TiTok achieves competitive results while markedly reducing the number of tokens needed as compared to prior methodologies. For instance, a typical 256×256 image is transformed into just 32 tokens, facilitating more efficient tokenization processes and achieving a superior performance across various metrics.

Introduction

The paper is situated in the context of recent advances in image generation, which have been significantly driven by developments in transformers and diffusion models. Traditional methodologies typically employ 2D latent grids, which fail to capitalize on the inherent redundancy within image structures. TiTok, on the other hand, shifts paradigms by encoding images into compact 1D sequences, thus offering a more efficient and effective latent representation that is well-suited for image reconstruction and generation.

Methodology

The authors postulate a novel framework, TiTok, deploying a transformer architecture consisting of a Vision Transformer (ViT) encoder, a ViT decoder, and a vector quantizer. Here, images are initially split into patches and processed into a series of latent tokens by the encoder. These tokens are then vector-quantized and subsequently reconstructed into image space by the decoder. This manner of tokenization allows a reduction in computational complexity and facilitates more robust handling of image redundancy.

Results

ImageNet-1K 256×256 Generation Benchmark

TiTok models display significant performance gains over baseline models across several metrics including both reconstruction and generative fidelity (rFID and gFID). Key performance indicators:

- TiTok-L-32 achieves a rFID of 2.21, while employing just 32 tokens, compared to MaskGIT-VQGAN’s 2.28 with 256 tokens.

- When incorporated within the MaskGIT framework, TiTok-L-32 reaches a gFID of 2.77, outperforming the MaskGIT baseline by a significant margin (6.18 gFID).

- The proposed model exhibits a 410× increase in generation speed compared to diffusion models.

ImageNet-1K 512×512 Generation Benchmark

For higher-resolution images, TiTok continues to demonstrate superior trade-offs between quality and efficiency:

- TiTok-L-64 achieves a gFID of 2.74, outperforming the state-of-the-art diffusion model DiT-XL/2 (gFID 3.04) while being substantially faster.

- The most enhanced variant, TiTok-B-128, further surpasses DiT-XL/2 in terms of both quality (2.13 gFID) and generation speed (74× faster).

Insights and Implications

This study underscores significant implications for both theoretical and practical applications. It essentially challenges the conventional structure of image tokenization by illustrating the viability of a 1D tokenization approach over the traditional 2D grid-based models. The transformation from numerous latent tokens to a highly compact form, without sacrificing image integrity, suggests a paradigm shift that could influence subsequent research and applications in generative model training and inference.

From a practical standpoint, the marked reductions in computational overhead and enhancements in generative quality and speed promise wide-ranging applicability in domains requiring efficient and high-quality image synthesis. This efficiency is especially critical as computational and energy costs become increasingly significant considerations.

Future Developments

The advancements illustrated by TiTok could very well serve as a foundation for further research into 1D tokenization across various domains beyond image generation, such as video processing or multi-modal data synthesis. Exploring different model formulations, training strategies, and the integration of advanced quantization methods are exciting domains for future work. Additionally, refinement in guidance techniques and inference-stage optimizations could further elevate the performance of such compact models, promising even more efficient and high-quality outputs across an expanded set of generative tasks.

In closing, this paper contributes a notable methodological advancement, presenting TiTok as a compact, yet robust, approach to image tokenization that effectively leverages redundancy to achieve superior performance metrics and computational efficiency.

This essay serves as an expert overview, synthesizing the key points, results, and implications of the TiTok study, thereby delivering a formal, technical summary devoid of sensational claims while highlighting the fundamental contributions and future potential of this research.