Analysis of LLMs' Capability in Solving Proportional Analogies with Knowledge-Enhanced Prompting

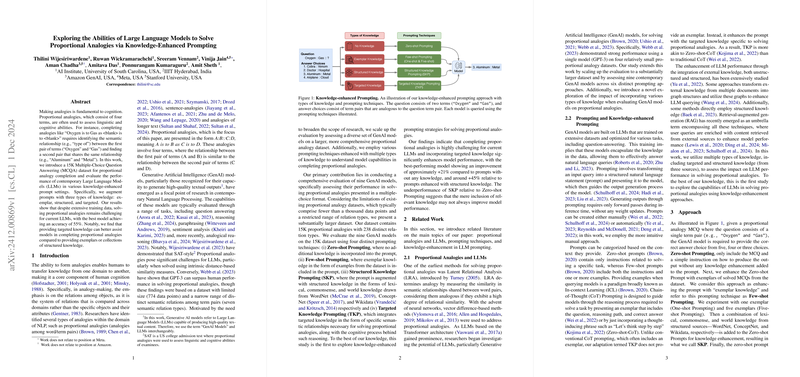

The paper "Exploring the Abilities of LLMs to Solve Proportional Analogies via Knowledge-Enhanced Prompting" presents a comprehensive evaluation of the capacity of LLMs to solve proportional analogies using a newly introduced dataset and diverse prompting techniques. It provides a structured examination of how different forms of knowledge, when incorporated into prompts, influence the performance of these LLMs.

Proportional analogies, represented as A:B::C:D, require identifying semantic relationships between pairs of terms. These analogies are fundamental to understanding cognitive and linguistic processes, acting as a measure of the analogical reasoning capabilities of LLMs. Despite the substantial training data used in developing LLMs, the paper reveals that these models still face significant challenges when tasked with solving proportional analogies.

The research involves developing a substantial dataset of 15,000 multiple-choice questions (MCQs) that encapsulate 238 distinct semantic relationships. This dataset surpasses previous resources in quantity and diversity, offering a more robust basis for evaluation. Nine generative AI models, including both proprietary and open-source, were assessed using various prompting techniques: Zero-shot Prompting, Few-shot Prompting, Structured Knowledge Prompting (SKP), and Targeted Knowledge Prompting (TKP).

A key finding from the paper is that targeted knowledge can significantly enhance performance, with an observed improvement in accuracy of up to +21% compared to prompts without any knowledge enhancement. The best model, GPT-3.5-Turbo, achieved a top accuracy of 55.25% with Targeted Knowledge Prompting. In contrast, structured knowledge did not consistently lead to better model performance, as seen with SKP providing lower results compared to Zero-shot Prompting. This suggests a nuanced role of external knowledge integration, highlighting that the contextual relevance and specificity of the knowledge incorporated can affect performance outcomes.

Despite the observed improvements, solving proportional analogies remains a complex task for LLMs, with results indicating an accuracy ceiling that suggests inherent limitations in current model architectures for this form of reasoning. The differences in model performance relative to the knowledge prompting styles provide insight into how specific types of knowledge, when systematically integrated into prompts, can modulate model reasoning capabilities.

The implications of these findings are significant for the future development of AI systems, especially in tasks requiring complex reasoning akin to human cognition. As the field moves towards integrating more sophisticated knowledge-grounded reasoning into AI, the paper emphasizes the importance of developing methodologies that appropriately harness the diverse nature of human-like reasoning patterns.

Future research may explore the potential benefits of fine-tuning models specifically for analogy completion and develop automated prompting methodologies that are resilient to semantic variability. Additionally, examining the role of emergent properties within the LLMs' architecture, possibly introducing novel architectures or training paradigms, could hold promise in enhancing the analogical reasoning capabilities.

In conclusion, this paper contributes significant insights into the current state and challenges in leveraging LLMs for complex analogy-based reasoning, presenting a detailed examination of the integration and utility of different types of knowledge within LLMs. The comprehensive experimental approach opens avenues for further exploration in advancing the capabilities of AI in understanding and performing tasks that require deep cognitive skills akin to human intelligence.