Insights into LLM Performance on MCQA Without Questions

The paper, "Artifacts or Abduction: How Do LLMs Answer Multiple-Choice Questions Without the Question?", presents an intriguing exploration into the capabilities of LLMs in multiple-choice question answering (MCQA). This paper critically examines a commonly used evaluation framework for LLMs, investigating whether these models can succeed in MCQA tasks even when deprived of the question prompts.

Key Findings

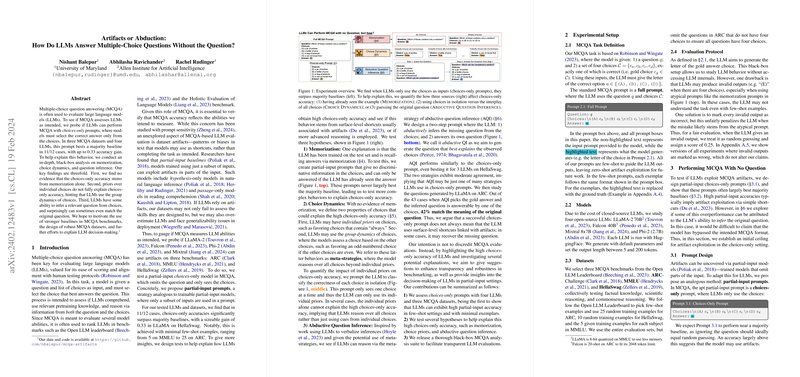

The researchers conducted experiments using three prominent MCQA datasets: ARC, MMLU, and HellaSwag, alongside four LLMs: LLaMA-2, Falcon, Phi-2, and Mixtral. Remarkably, the models' performance with "choices-only" prompts—where only answer options were provided—surpassed majority baselines in 11 out of 12 scenarios, demonstrating accuracy gains up to 0.33. This suggests that LLMs might leverage specific dynamics in the choices themselves for decision-making.

Three primary hypotheses were explored to explain these results:

- Memorization: The paper found no substantial evidence indicating that high accuracy in choices-only settings stemmed from memorization of seen examples alone. Models equipped with prompts void of discriminative information failed to exhibit significant performance, debunking the notion of memorization as the primary factor.

- Choice Dynamics: Examination into how models leverage individual priors (favoring certain words or patterns) and collective dynamics (considering the relationship between all options) revealed that individual priors weren't sufficient to account for the observed accuracy. This finding suggests that LLMs may engage in more complex reasoning processes when selecting answers based on group dynamics.

- Abductive Question Inference (AQI): LLMs were shown to possess a degree of capability to infer questions from choices—sometimes resembling the original questions—indicating a potential to engage in abductive reasoning. The performance of LLMs was on par when they generated and answered their inferred questions, and in some cases, even exceeded the choices-only prompt results.

Implications and Future Directions

The results have significant implications for the design and evaluation of MCQA datasets and LLMs. Current benchmarks may inadvertently assess model capabilities not originally intended, such as exploiting dataset artifacts rather than demonstrating comprehension or reasoning skills. Consequently, this necessitates stronger baselines and robust dataset creation protocols to mitigate artifact exploitation.

Moreover, the findings highlight the importance of understanding how LLMs are making decisions, especially in partial-input settings. The paper presents an advanced methodological framework, which should encourage further investigations into whether more sophisticated reasoning abilities can be encouraged or detected in LLMs using other strategies.

Conclusion

Overall, this paper provides a nuanced assessment of how artifacts and reasoning interplay in LLMs' performance on MCQA tasks. It emphasizes the need for transparency in LLM evaluations and prompts a reevaluation of methodologies to better align with the intended assessment of model capabilities. Future research should continue to delve into these dynamics, ideally leading to advanced models capable of achieving more consistent and interpretable performance across varied MCQA settings.