Accelerating Multimodal LLMs via Dynamic Visual-Token Exit

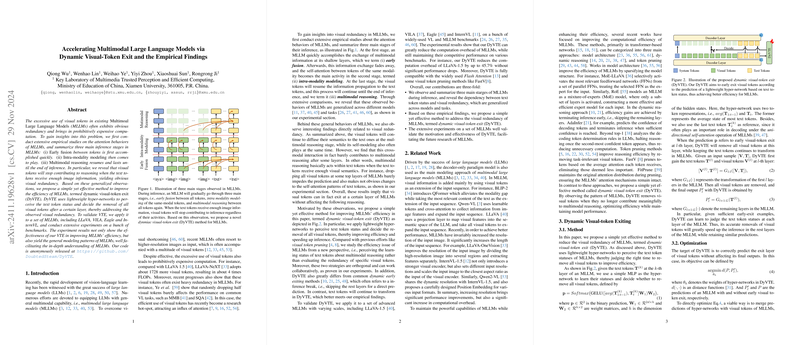

The paper presents an investigation into the efficiency limitations of Multimodal LLMs (MLLMs), specifically focusing on the redundancy of visual tokens and proposes a method termed Dynamic Visual-Token Exit (DyVTE) to address it. The foundational observation is that during inference, MLLMs generally undergo three stages: early fusion, intra-modality modeling, and multimodal reasoning. The critical insight drawn from empirical studies is that visual tokens often cease to contribute to reasoning after the text tokens have assimilated sufficient visual information, indicating a potential for computational optimization by removing redundant visual tokens.

Methodological Insights

The DyVTE method employs lightweight hyper-networks to dynamically decide when to exit visual tokens based on the status of text tokens. This method diverges from traditional token pruning techniques by focusing on the overall learning status rather than evaluating individual token redundancy. The proposed hyper-networks predict the optimal exit layer for visual tokens, which constitutes a novel approach to enhancing MLLM efficiency without compromising the model's predictive capabilities. This predictive capacity of DyVTE is validated through its application across several MLLMs, including LLaVA, VILA, Eagle, and InternVL models.

Experimental Validation

The experimental results, as detailed in the paper, demonstrate significant improvements in computational efficiency while maintaining competitive performance across a variety of benchmarks. Notably, the application of DyVTE to LLaVA-1.5 resulted in a reduction of computational overhead by up to 45.7% without a marked drop in accuracy, highlighting the effectiveness of this approach. These results underscore the ability of DyVTE not only to enhance understanding of MLLM behaviors but also to facilitate practical advancements in model efficiency.

Broader Implications

The formulation and successful implementation of DyVTE have several theoretical and practical implications. Theoretically, it supports the notion of dynamic token utilization being integral to the realization of more efficient deep learning models, specifically within the multimodal learning context. Practically, the potential of DyVTE to significantly lower computational demands opens the doors to its application in real-time and resource-constrained environments, where maintaining performance while reducing latency and energy consumption is paramount.

Future Directions

The framework established by DyVTE invites further exploration into dynamic token management strategies, potentially extending beyond visual tokens to other token types or modalities. Additionally, future research could probe the integration of DyVTE with other optimization techniques to further push the boundaries of MLLM efficiency. Exploring the adaptability of DyVTE across a wider array of models and tasks will be crucial in assessing the breadth of its applicability.

In conclusion, the paper presents a rigorous examination and novel solution to the issue of visual token redundancy in MLLMs. DyVTE stands as a promising methodology for not only improving efficiency but also enhancing our understanding of multimodal modeling processes. This approach exemplifies a crucial step towards the development of more agile and sustainable large-scale AI systems, aligning with contemporary needs for scalability and efficiency in machine learning applications.