An Analysis of Attamba: Attending To Multi-Token States

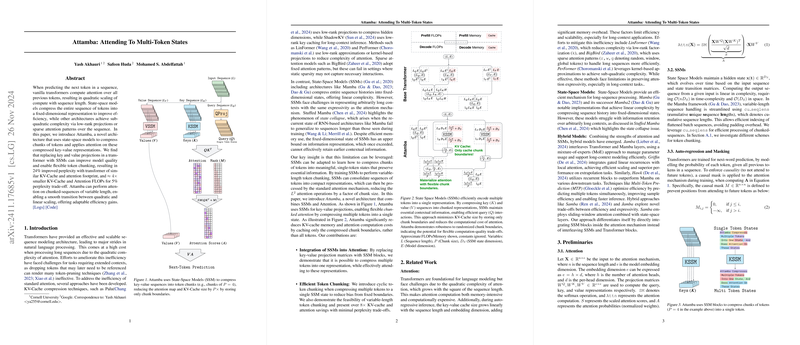

The paper "Attamba: Attending To Multi-Token States" introduces a novel architecture aimed at addressing limitations in existing transformer models, notably in handling long sequence dependencies efficiently. Transformers, renowned for their sequence modeling capabilities, are hindered by the quadratic scaling of attention mechanisms when processing extensive sequences. The Attamba architecture emerges as an innovative solution to these constraints, leveraging State-Space Models (SSMs) to compress multiple tokens into single representations, thus enhancing computational efficiency and maintaining model quality.

Key Contributions

- Integration of SSMs into Attention:

- The core innovation in Attamba lies in replacing traditional key-value projection matrices with SSMs. This substitution allows for the compression of multiple tokens into a singular token representation, facilitating a reduction in computational overhead while effectively attending to the transformed representations.

- Efficient Token Chunking:

- The architecture introduces cyclic and variable-length token chunking, which reduces biases from fixed chunk boundaries. By compressing sequences into token chunks, Attamba achieves over 8× KV-cache and attention savings with minimal impact on perplexity.

- Improved Computational Complexity:

- Attamba offers a scalable transition between quadratic and linear complexity, optimizing efficiency with adaptable token chunking strategies enabled through SSMs.

Numerical Insights and Results

Attamba demonstrates notable efficiency improvements, showing approximately 4× smaller KV-Cache and attention FLOPs for a 5% increase in perplexity when compared with transformers of similar KV-Cache and attention footprint. Attamba maintains robustness against randomized chunk boundaries, evidencing its flexibility and potential scalability for practical applications.

In comparative experiments against baseline transformer architectures and models like Mamba, minGRU, and Hawk, Attamba showcased superior performance, especially in scenarios mimicking equivalent memory or computational constraints. This was particularly evident when matched against reduced KV-Cache size transformers, where Attamba maintained consistent efficiency advantages without the need to compromise on attention model dimensions or quality at increased sequence lengths.

Theoretical and Practical Implications

Attamba's integration of SSMs into the attention mechanism fosters a dual improvement in both computational efficiency and memory requirements. The ability to compress and efficiently attend across long sequences without degradation in expressivity points to a valuable advance in long-context modeling, with significant implications for the scalability of LLMs in resource-constrained environments.

Theoretical implications include the potential for hybrid architectures that combine attention mechanisms with SSMs, paving the way for more nuanced explorations of sequence compression in LLMing tasks. Practically, Attamba offers pathways to optimize existing NLP systems, especially in applications requiring extensive context processing.

Future Directions

The promising results of Attamba suggest several avenues for future research. Key considerations include extending evaluations to diverse long-context benchmarks and exploring more sophisticated chunk boundary selection strategies informed by token importance algorithms. Testing Attamba's efficacy on larger scale models and complex NLP tasks could provide insights into its generalizability and practical deployment scenarios.

Furthermore, investigating fine-tuning methodologies and chunk boundary robustness in pre-trained models might yield additional performance gains. As AI continues to evolve, models like Attamba represent steps toward more efficient and flexible language processing frameworks. The interplay between memory-efficient architectures and effective attention mechanisms is likely to remain a focal challenge, with Attamba contributing significant knowledge to this ongoing dialogue.