Analysis of Neurolinguistic Evaluation of LLMs

The research presented in the paper "LLMs as Neurolinguistic Subjects: Identifying Internal Representations for Form and Meaning" offers a rigorous exploration of how LLMs interpret linguistic elements across different languages. The authors introduce a novel paradigm for evaluating LLMs by investigating both their psycholinguistic and neurolinguistic facets through a method they refer to as minimal pair diagnostics probing.

Distinctive Methodological Approaches

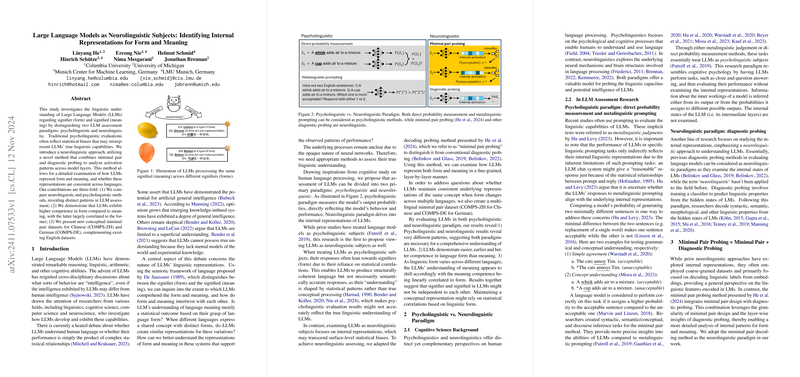

The authors distinguish between two evaluation paradigms: psycholinguistic and neurolinguistic. The psycholinguistic approach broadly relies on analyzing the surface-level output probabilities of models, aligning with traditional performance evaluations that consider models as black-box interpreters of language. In contrast, the neurolinguistic approach investigates the internal workings of LLMs by examining how different linguistic forms and meanings are represented across model layers. By leveraging the combination of minimal pairs and diagnostic probing, the paper attempts to decode the linguistic structure encoded within the models' layers, offering a granular perspective on how these models conceptualize language.

Empirical Insights and Numerical Results

The paper delivers several key empirical results with substantial numerical backup. It determines that LLMs generally show superior competence in form over meaning—an observation reflected consistently across different languages including English, German, and Chinese. Specifically, the models exhibit higher coherence in grasping linguistic structures as opposed to semantic content, which adds a critical distinction to the evaluation of model 'intelligence'. Through neurolinguistic assessments, models like Llama2 and Qwen displayed advanced capacity for capturing form, though they presented notable challenges in achieving conceptual understanding, especially across different language forms.

The paper also measures form and meaning competencies through feature learning saturation and maximum layer analysis in LLMs, signaling that the convergence of learning form emerges at earlier layers compared to semantic encoding. This pattern unveils a pivotal connection between the models' data-driven lexical grasp and their potential semantic interpretation.

Theoretical and Practical Implications

Theoretically, these findings suggest that LLMs treat language as a statistical output rather than an intrinsically understood system, hinting at a crucial divergence from human language acquisition processes. Contrary to the semantic bootstrapping evident in cognitive development in humans, LLMs prioritize syntactic structures over conceptual understanding. This insight underscores a limitation in the pursuit of genuine artificial comprehension beyond statistical correlations.

Practically, the results remind developers and practitioners in AI and NLP fields about the semiotic discrepancy between form and meaning in LLM performance. While these models show promise in replicating human linguistic patterns superficially, their limitations in conceptual encoding caution against over-reliance in contexts necessitating true semantic understanding, such as nuanced language translation or sophisticated interactive AI applications.

Future Prospects

Future research could benefit from expanding this method to cover a more extensive range of language pairs, enabling a more globally inclusive LLM training regimen. Furthermore, solving the symbol grounding problem—bridging the gap between statistical LLMs and context-dependent human language comprehension—remains a key venture for advancing LLM capabilities toward more authentic forms of intelligence. The integration of real-world context and experiential learning into LLM training paradigms could present a significant step forward in achieving this goal.

In summary, while LLMs reflect advanced surface-level understanding of linguistic forms, significant advancements are required for these models to attain supra-statistical comprehension of language meanings. The research calls for computational linguistic models to traverse beyond being sophisticated statistical tools, urging developments toward comprehensive and contextually-rich language processing systems.