Insights into Rationale-Guided Retrieval Augmented Generation for Medical Question Answering

The paper "Rationale-Guided Retrieval Augmented Generation for Medical Question Answering" presents a refined framework, RAG$^2$, that aims to enhance the reliability of retrieval-augmented generation (RAG) models within biomedical contexts. This paper directly addresses inherent challenges faced by LLMs, particularly in domains requiring high accuracy, such as medicine, where hallucinations and outdated information can compromise outcomes.

Core Innovations in RAG$^2$

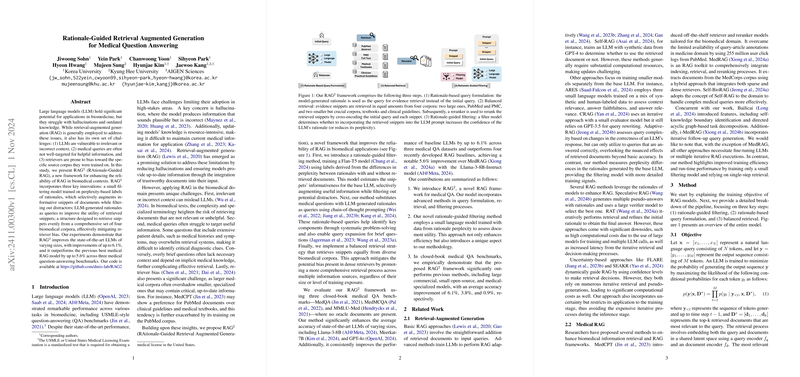

RAG$^2$ advances the current landscape of RAG through three distinctive innovations aimed at improving both reliability and contextual relevance within medical question-answering systems:

- Rationale-Guided Filtering: The framework introduces a filtering model trained on perplexity-based labels. By gauging the informativeness of retrieved snippets, it effectively curtails irrelevant or distracting information. This aspect is critical in medical applications where the correctness of information can significantly affect the actionable insights derived by healthcare professionals.

- Rationale-Based Queries: RAG$^2$ transitions from using original medical queries to LLM-generated rationales as inputs for retrieval tasks. This approach aids in expanding overly brief queries and narrowing down verbose ones, thereby enhancing the retrieval of pertinent information. This results in more focused searches that align query formulation closer to the domain-specific language of medical texts.

- Balanced Retrieval Strategy: To mitigate corpus bias, RAG$^2$ equally sources snippets from four key biomedical corpora, including PubMed, PMC, textbooks, and clinical guidelines. This method ensures a more inclusive utilization of information resources, offsetting biases that might arise from a lopsided emphasis on larger corpora.

Performance and Implications

RAG$^2$ has demonstrated a notable enhancement in performance across several benchmarks. It achieves up to a 6.1% improvement over state-of-the-art LLMs and outstrips the previous best medical RAG models by up to 5.6% in medical question-answering benchmarks, such as MedQA, MedMCQA, and MMLU-Med. This marked improvement underscores the potential of RAG$^2$ to elevate the reliability of automated medical response systems in high-stakes settings.

The paper's contributions extend beyond immediate improvements in medical QA to suggest a pathway for reducing the dependency on model retraining, thereby handling outdated knowledge more efficiently. By refining how LLMs retrieve and process information, particularly through the use of rationale-driven methodologies, RAG$^2$ posits a scalable solution that could adapt to evolving medical data without frequent and intensive retraining.

Theoretical and Practical Implications

Theoretically, this research enriches the understanding of retrieval dynamics in LLMs and highlights the importance of tailored input transformations and filtering mechanisms. Practically, for stakeholders in biomedical AI, the innovations in RAG$^2$ suggest advancements towards integrating complex AI systems more effectively within clinical workflows, where accuracy and trustworthiness are paramount.

Future Directions

While RAG$^2$ presents substantial advancements, the exploration of its adaptability and translational potential to other domains remains a promising area for future research. Given the nuanced challenges across different specialized fields, further studies could refine and tailor the retrieval and filtering strategies developed here to fit the unique requirements of other complex knowledge domains.

In conclusion, this paper lays a robust foundation for improving the application of LLMs in biomedicine through strategic innovation in retrieval-augmented generation methodologies. It addresses both procedural and theoretical challenges, enabling more reliable and contextually accurate AI systems that have significant implications for the future of medical information technology.