Overview of "Can LLMs Replace Programmers? Says 'Not Yet'"

The research paper "Can LLMs Replace Programmers? Says 'Not Yet'" explores the potential of LLMs in the domain of code generation, aiming to evaluate their efficacy in performing software development tasks akin to human programmers. Despite achieving high accuracy on existing code generation benchmarks such as HumanEval and MBPP, current LLMs demonstrate limited performance on real-world programming tasks due to shortcomings in current benchmarks and evaluation techniques.

Clarifications in Current Benchmarks

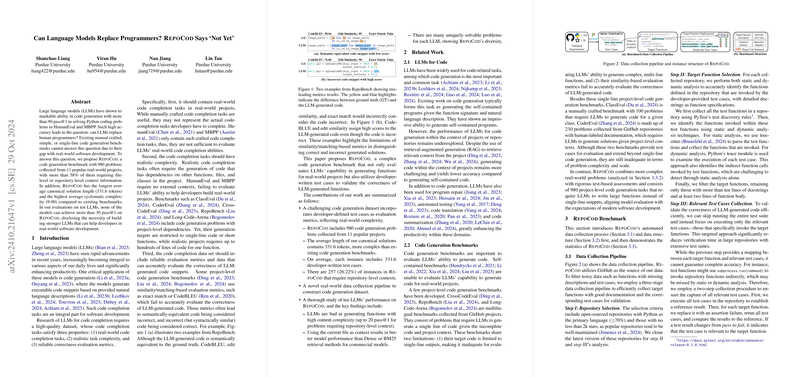

The authors assert that existing benchmarks do not capture the intricacies of actual software development tasks. They often involve manually crafted or overly simplistic scenarios that do not reflect the exigencies of real-world projects where multi-file, repository-level context, and highly complex functionalities are required. A significant issue with current benchmarks is the reliance on inadequate evaluation metrics based on similarity or exact matching, which results in misleading conclusions on code correctness.

Introducing a Robust Benchmark:

To counter these limitations, the authors present a new benchmark, consisting of 980 problems sourced from 11 widely-used software repositories. This benchmark stands out for its emphasis on complexity and realism: over half of the problems necessitate file-level or repository-level context, with an average canonical solution length of 331.6 tokens and cyclomatic complexity of 9.00. This complexity is paired with rigorous evaluation metrics through an average of 313.5 developer-crafted test cases per task, ensuring the correctness and reliability of LLM-generated code are accurately assessed.

Evaluations and Findings

In evaluating ten contemporary LLMs using the benchmark, the paper reveals that none surpass a pass@1 rate of 30%, accentuating the deficits in current models' abilities to tackle software development tasks inherent to real-world scenarios. The authors highlight that, although LLMs demonstrate competence in generating applicable functions when provided with sufficient context, their efficacy sharply decreases as problem complexity escalates, especially in tasks requiring comprehensive project-level understanding.

Implications for LLM Development

This paper underscores the necessity of advancing LLM architectures and training methodologies to handle project-level code dependencies comprehensively. Enhancing retrieval methods, particularly integrating strategies such as dense vector retrieval seen in this paper, may prove beneficial in bridging the performance gap. Further exploration into augmenting the capacity and efficiency of context processing in LLMs is warranted.

Future Directions

The benchmark provides a crucial tool for future research in expanding the capabilities of LLMs beyond their current limits. It offers a fertile ground for developing models that can meaningfully interact with complex, interdependent software environments. Leveraging improvements in retrieval techniques and addressing the intricacies of real-world code dependencies could yield significant enhancements in LLM performance.

Conclusion

The paper contributes an essential benchmark that both challenges existing models and propels the evolution of LLM capabilities within software development. It provides a comprehensive basis for future research and model development, pushing forward the boundaries of what is achievable with automated programming tools. While current LLMs are far from substituting human programmers, revisiting their architecture in line with insights drawn from this benchmark may deliver more capable code-generating agents in the future.