Efficient Knowledge Caching for Retrieval-Augmented Generation with RAGCache

Introduction to RAGCache

Retrieval-Augmented Generation (RAG) significantly enhances the performance of LLMs by integrating external knowledge bases. While RAG improves generation quality extensively, it faces key challenges like increased memory and computation overhead due to processing long input sequences. These challenges highlight the need for efficient mechanisms to manage and optimize resource utilization in RAG systems.

We propose RAGCache, a versatile multilevel dynamic caching system to address these issues. RAGCache innovatively organizes and manages the intermediate states of the retrieved knowledge, effectively improving the efficiency of retrieval and generation phases in RAG systems.

System Characterization and Inspiration

RAGCache derives from a detailed system characterization identifying the primary performance bottleneck at the LLM's generation step, influenced by lengthy input sequences. Historically, the augmentation of user requests with external documents leads to inflated memory and computation demands.

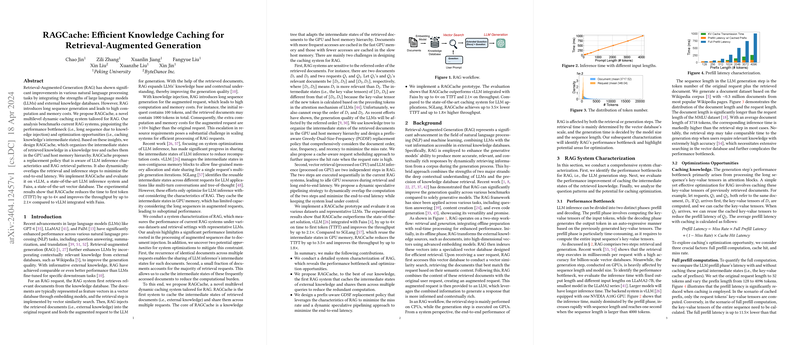

Investigating current caching mechanisms, we identified significant potential for improvement by caching intermediate states of frequent knowledge retrievals. Experiments demonstrate that the standard practice where only the LLM inference states are cached is insufficient due to the much longer sequences in augmented requests. Our evaluation uncovered that a tiny subset of documents often accounts for a significant number of retrieval operations, presenting an essential opportunity for caching optimization.

Architectural Overview

At its core, RAGCache introduces a knowledge tree data structure that adapts to the hierarchical memory model, efficiently organizing cached intermediate states in both GPU (for high-frequency access) and host memories (for less accessed data).

The caching mechanism operates under a bespoke prefix-aware Greedy-Dual-Size-Frequency (PGDSF) replacement policy, which considers document access frequency, size, and recency, facilitating intelligent cache management that aligns with the unique needs of RAG systems. Furthermore, RAGCache integrates a speculative computational strategy that overlaps knowledge retrieval and LLM inference, enhancing the efficiency and reducing the typical latency found in RAG processes.

Implementation and Performance

Implemented atop vLLM and evaluated using various LLM configurations and datasets, RAGCache showcases impressive performance improvements:

- Reduction in Time to First Token (TTFT) by up to 4x compared to existing RAG systems on various benchmarks.

- Throughput improvements by up to 2.1x, enabling faster processing rates under comparable computational resources.

Future Directions

While RAGCache marks a significant step forward, continuous improvements can further enhance RAG systems. Potential areas include more advanced predictive loading techniques for caching and exploring deeper integrations with different types of external knowledge bases, possibly extending beyond textual data to include multi-modal databases.

Conclusion

RAGCache represents a novel approach to optimize retrieval-augmented generation systems, addressing crucial performance bottlenecks through efficient knowledge caching and dynamic operational strategies. The design of RAGCache, leveraging a knowledge tree and intelligent caching policies, effectively harmonizes the retrieval and generation steps, setting a foundational system that could inspire future advancements in the field of generative AI and beyond.