SelfCodeAlign: Self-Alignment for Code Generation

The paper, "SelfCodeAlign: Self-Alignment for Code Generation," presents an innovative pipeline for enhancing the capabilities of code generation models through a process termed self-alignment. The authors introduce a fully transparent approach that circumvents the dependencies on human annotations and distillation from larger proprietary LLMs, which traditionally acts as a bottleneck in the scaling and legal usability of the models.

Key Contributions

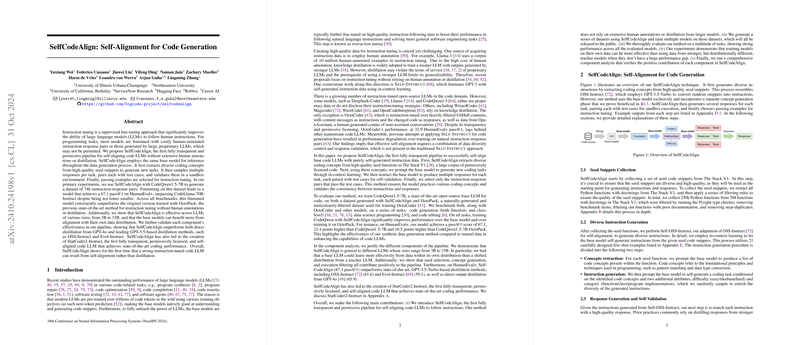

- SelfCodeAlign Methodology: The paper introduces SelfCodeAlign, a novel pipeline that allows a code generation model to align itself using self-generated instruction data. This pipeline involves several stages, including concept extraction from seed snippets, instruction generation, response generation, and self-validation through execution in a sandbox environment.

- Evaluation Results: The authors used SelfCodeAlign with various models, including CodeQwen1.5, resulting in a dataset of 74,000 instruction-response pairs. Impressively, fine-tuning a model with this dataset achieved a 67.1% pass@1 score on HumanEval+, outperforming other models, including the much larger CodeLlama-70B-Instruct, by a significant margin.

- Benchmark Performance: The SelfCodeAlign-trained models exhibited competitive or superior performance across a range of benchmarks, such as HumanEval+, MBPP, LiveCodeBench, EvoEval, and EvalPerf. The results suggest that self-aligned data can be exceptionally effective in enhancing model performance.

- Instruction Generation without Distillation: This pipeline achieves state-of-the-art coding performance without resorting to the traditional knowledge distillation approach from more extensive and heavily controlled proprietary systems like GPT-3.5 or GPT-4, facilitating more permissive and ethically aligned model distribution.

- Broad Applicability Across Model Sizes: The pipeline's effectiveness was demonstrated across a spectrum of model sizes, from 3 billion to 33 billion parameters, affirming its versatility.

Theoretical and Practical Implications

The theoretical implications of this work underscore the viability of utilizing a model's intrinsic capabilities for self-alignment in data generation, challenging the prevailing narrative that stronger teacher models are necessary. Practically, SelfCodeAlign represents a significant step towards democratizing advanced code generation tools, as it reduces reliance on costly human involvement and avoids the restrictions associated with proprietary models. This opens the path to more widespread and ethically guided deployment of such models.

Future Directions

The research invites several potential future directions. Extending the method to handle long-context instruction-response pairs could be significant, broadening the applicability of the approach. Furthermore, incorporating reinforcement learning from self-generated negatives, simplifying the process of generating reliable test cases, and expanding the evaluation to more complex programming tasks are promising areas for further exploration.

Conclusion

Overall, this paper provides a compelling case for the benefits of self-alignment in training code generation models, applying a methodology that is both innovative and impactful. By revealing strong performance without the constraints of traditional approaches, it sets a new standard for the development of code generation technologies.