Bi-Mamba4TS: Enhancing Long-Term Time Series Forecasting with a Bidirectional Mamba Model

Introduction

Time series forecasting (TSF) plays a pivotal role across numerous domains such as traffic management, energy, and finance, particularly where long-term forecasting is paramount. Although Transformer-based models have gained traction in this regard due to their capacity to model long-range dependencies, their quadratic computational complexity remains a significant bottleneck. Recently, state space models (SSM), and notably the Mamba model, have emerged as effective alternatives due to their linear computational complexity and robustness in handling long sequences. Building on this, we introduce the Bi-Mamba4TS model which integrates bidirectional Mamba models to enhance the capability of time series forecasting.

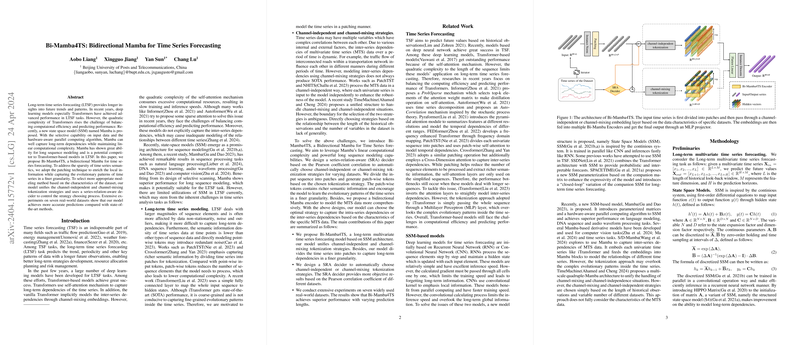

Model Architecture

The Bi-Mamba4TS employs a novel approach to model both "channel-independent" and "channel-mixing" scenarios via a mechanism that evaluates dataset characteristics to decide on the appropriate strategy. This is governed by a "series-relation-aware" (SRA) decider that leverages the Pearson correlation coefficient, providing an objective basis for strategy selection. Input data is decomposed into patches to enrich local semantic information. This patch-wise tokenization not only helps in reducing computational load but also enhances the model's ability to capture intricate evolutionary patterns in the data.

Main Contributions:

- We propose a new SSM-based model, Bi-Mamba4TS, harnessing the power of bidirectional Mamba encoders, which enhances the modeling of long-range dependencies in time series data.

- The model introduces a decision-making mechanism (SRA decider) based on Pearson correlation coefficients to autonomously decide between channel-independent and channel-mixing strategies based on the dataset characteristics.

- Extensive experiments on diverse real-world datasets show that Bi-Mamba4TS achieves superior forecasting accuracy compared to existing state-of-the-art methods.

Model Evaluation and Results

In rigorous experiments across seven varied real-world datasets, Bi-Mamba4TS consistently outperformed other leading models in long-term multivariate time-series forecasting. The model not only excelled in terms of prediction accuracy but also demonstrated efficiency in computational resource utilization. These experiments underscore the effectiveness of Bi-Mamba4TS in a practical setting, making it a valuable tool for various real-life applications requiring accurate and efficient long-term forecasting.

Model Efficiency and Ablation Study

Efficiency analysis reiterated that Bi-Mamba4TS balances well between accuracy and computational demands. The ablation studies further validated the importance of bidirectional encoding and adaptive strategy selection in enhancing forecasting performance. The model demonstrates robustness across different parameter settings, emphasizing its practical utility.

Future Directions

The promising results invite further exploration into more complex and dynamic scenarios, such as adaptive forecasting in rapidly changing environments. Future work could also delve into refining the SRA decider to accommodate more nuanced dataset characteristics and exploring the integration of Bi-Mamba4TS with other forecasting frameworks to leverage complementary strengths.

Conclusion

Bi-Mamba4TS sets a new benchmark in long-term time series forecasting by effectively addressing the computational inefficiencies of traditional models and introducing an adaptive mechanism that aligns model strategy with data characteristics. Its superior performance, backed by rigorous experimental validation, makes it a potent tool for a wide range of applications in time series forecasting.