Multilingual Needle in a Haystack: Investigating Long-Context Behavior of Multilingual LLMs

This essay examines the insights presented in the paper titled "Multilingual Needle in a Haystack: Investigating Long-Context Behavior of Multilingual LLMs." The research introduces an evaluation framework aimed at understanding the performance of multilingual LLMs in contexts where they need to retrieve information embedded in long multilingual input sequences. This paper is crucial for examining these models within the less explored field of long-context scenarios, especially those involving multiple languages.

Key Contributions

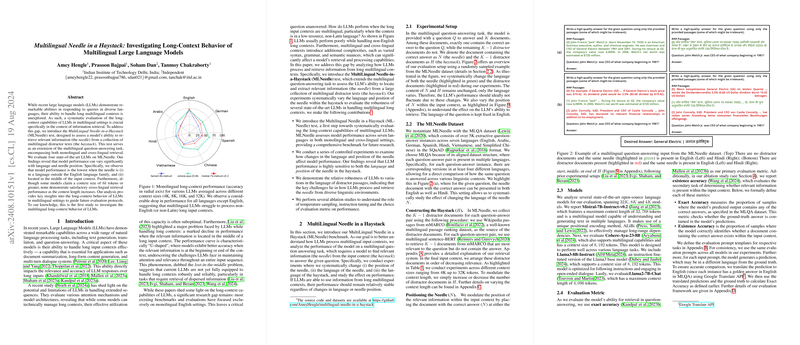

The paper's central contribution is the development of the MultiLingual Needle-in-a-Haystack (MLNeedle) test, which extends existing multilingual question-answering benchmarks. The MLNeedle test is a method to evaluate the LLMs' capacity to locate specific information (the "needle") amidst a collection of multilingual distractor texts (the "haystack"). The test investigates the models' performance in mono- and cross-lingual contexts across several languages.

The authors make several important findings:

- Sensitivity to Language and Position: The retrieval accuracy of LLMs demonstrates a significant dependency on both the language and the position of the needle within the haystack. This sensitivity is heightened when the needle is in non-Latin languages or situated in the middle of a long input context.

- Language of Distractor Passages: Variations in the language of distractor texts did not significantly impact model performance, suggesting that LLMs effectively focus on retrieving the needle despite the language of surrounding non-relevant information.

- Effect of Long Contexts: The paper reports that state-of-the-art LLMs exhibit a marked decline in performance as the input context length increases beyond 8K tokens. While some models claimed capabilities up to 32K tokens, the effective performance remains constrained, with accuracy showing significant drop-offs beyond specified thresholds.

- Instruction Fine-tuning and Sampling: Ablation studies reveal that instruction fine-tuning enhances the retrieval performance of models significantly across various context lengths. Moreover, differing sampling strategies (e.g., temperature sampling vs. greedy decoding) have limited effect on accuracy.

Numerical Results

The performance of models, such as Mistral-7B-Instruct-v0.2, Llama3-8B-Instruct, and Cohere-Aya-23-8B, illustrates crucial patterns. Monolingual long-context performance for non-English languages falls below that for English, reflecting the challenges posed by complex multilingual environments. Notably, the paper shows that models exhibit a 'U'-shaped performance curve, indicating their adeptness at processing information positioned at the beginning or end of the input sequence compared to the middle.

Theoretical and Practical Implications

The findings underscore significant implications for the design and training of LLMs. The models' inherent biases, such as their reduced capacity to effectively retrieve mid-placed information and handle non-Latin languages, indicate areas necessitating refinement. From a theoretical perspective, the research calls for enhancing attention mechanisms to maintain relevance and focus throughout extended procedural sequences, accommodating diverse linguistic structures.

Practically, these insights highlight the potential constraints of deploying current LLMs in real-world applications requiring multilingual information retrieval over extended contexts, such as in multilingual customer service or cross-lingual content generation platforms. Addressing these shortcomings could enhance the models' effectiveness, thereby extending their utility across global markets and diverse user bases.

Future Directions

This paper lays the groundwork for further studies into refining multilingual LLM architectures to better handle extensive and linguistically diverse contexts. The authors' demonstration of context size effectiveness and retrieval accuracy across various languages suggests a potential avenue for further exploring architectural adjustments in models to better address language variance and sequence-scale challenges.

Research in this domain may involve developing advanced attention modules or leveraging novel tokenization strategies to broaden the contextual comprehension capabilities of LLMs in multilingual settings. Such efforts could facilitate improvements in learning paradigms, ultimately leading towards more adept and reliable AI systems in multilingual NLP applications.