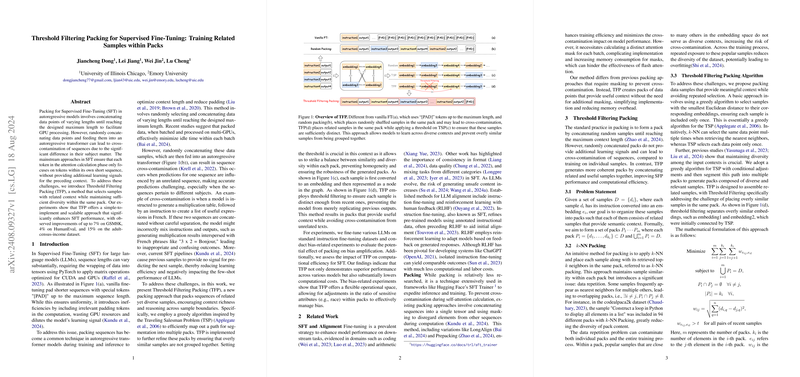

The paper investigates a novel packing strategy for supervised fine-tuning (SFT) of autoregressive LLMs, specifically addressing the challenges arising from heterogeneous sequence lengths and cross-contamination issues inherent in conventional packing techniques. The proposed “Threshold Filtering Packing (TFP)” method introduces a mechanism to group related yet sufficiently diverse samples into coherent packs, thereby enhancing the contextual learning signal during training.

The work is motivated by two primary concerns in standard packing approaches:

- Inefficient utilization of GPU resources: Vanilla fine-tuning pads sequences with special tokens, which leads to redundant computations and diluted learning signals.

- Cross-contamination and overfitting: Random concatenation of samples, even when attention masking is applied, can introduce interference between unrelated contexts. Traditional methods such as -NN packing exacerbate data repetition, which may cause overfitting and reduce generalization.

TFP addresses these issues by employing a greedy algorithm inspired by the Traveling Salesman Problem (TSP, Traveling Salesman Problem). In this framework, each sample’s instruction is first embedded into a high-dimensional vector space. The algorithm then constructs a path through these embedding nodes, selecting at each step the nearest unvisited sample while enforcing a threshold condition. This condition ensures that the Euclidean distance from the most recent samples exceeds a given threshold , thus preventing overly similar samples from being grouped together. The mathematical formulation involves minimizing the total pairwise Euclidean distances within each pack:

where

- denotes the number of packs,

- is the number of elements in the -th pack,

- represents the embedding of the -th sample in pack ,

- measures the Euclidean distance between embeddings,

- and enforces diversity by requiring for recently selected samples.

Key contributions and findings include:

- Improved performance across diverse tasks: Fine-tuning experiments over datasets such as GSM8K, HumanEval, and Alpaca demonstrate quantitative improvements of up to 7% on GSM8K, 4% on HumanEval, and 3% on Alpaca. These gains underscore the advantage of learning from packs that maintain coherent context while mitigating the adverse effects of cross-contamination.

- Robustness against data repetition: Unlike -NN packing—which often leads to the same samples appearing in multiple packs—TFP guarantees disjoint packs by selecting each sample only once. This strategy not only enhances diversity within packs but also avoids detrimental overfitting effects.

- Efficient computational resource usage: By reducing the need for creation and storage of distinct attention masks (since the packs are naturally segmented by context), TFP decreases both memory overhead and training time, which is particularly significant when datasets involve sequences much shorter than the maximum allowable context length.

- Enhanced fairness through controlled sample composition: The work further explores variants of TFP (e.g., TFP (balanced) and TFP (resampling)) that aim to mitigate bias by adjusting the ratio of sensitive demographic attributes within packs. Experiments on tasks such as toxicity classification and income prediction illustrate that balancing the sensitive attribute ratios within packs contributes to lower demographic parity differences () and equalized odds differences (), particularly in low-shot settings.

- Ablation studies and parameter sensitivity: Detailed analyses reveal the critical importance of the choice of embedding model and the distance threshold number . Base models like bert-base-uncased outperform other embedding approaches in capturing the requisite contextual similarity for effective packing. Furthermore, extremes in the choice of —with amounting to naive TSP and overly large values approximating random selection—demonstrate that an intermediate value optimally balances contextual relevance and diversity.

The comprehensive experiments are conducted on various LLMs including Llama2-7B, Llama3-8B, and Mistral-7B under both zero-shot and few-shot settings, ensuring that the proposed approach is evaluated rigorously across different architectures and task scenarios. Furthermore, the method’s simplicity in merging with existing SFT pipelines is highlighted, as it requires only a reordering of the dataset into contextually coherent packs without introducing additional model complexity.

Overall, the paper offers a systematic treatment of the packing problem in SFT, providing both theoretical formulation and extensive empirical validation. Its integration of threshold-based filtering into the greedy TSP-inspired framework represents a methodical advancement for mitigating cross-contamination and achieving better performance and fairness in fine-tuned LLMs.