Okay, let's tackle this summary. The user provided a LaTeX document for a survey paper on Graph Retrieval-Augmented Generation (GraphRAG). They want a detailed, technical summary aimed at experts, avoiding sensational language and focusing on key contributions and results.

First, I need to parse the paper's structure. The abstract mentions that GraphRAG addresses limitations of traditional RAG by leveraging graph structures. The introduction outlines problems with RAG, like neglecting relationships and lacking global information. The paper formalizes GraphRAG into three stages: G-Indexing, G-Retrieval, G-Generation.

Looking at the sections, they cover related work, preliminaries, each stage in detail, training methods, applications, and future directions. Important contributions include the systematic review, formal workflow, and discussion of core technologies.

The technical aspects include various graph data sources (open KGs, self-constructed), indexing methods (graph, text, vector), retrieval paradigms (once, iterative, multi-stage), and generation techniques using GNNs, LMs, or hybrids. Applications span QA, information extraction, and industry use cases.

Notably, the paper discusses challenges like dynamic graphs, multimodal integration, and efficient retrieval. Evaluation metrics and benchmarks are also covered, along with industrial systems like Microsoft's GraphRAG and NebulaGraph's implementation.

I need to highlight these points without using terms like "groundbreaking." Emphasize the structured approach, technical methodologies, and numerical results where possible. Mention specific examples like the use of PCST algorithms or hybrid models combining GNNs and LMs.

Avoid mentioning the arXiv ID since the user specified not to. Ensure the summary is comprehensive, using lists for readability and bold for acronym expansions. Check for proper flow from problem statement to methodology to applications and future work.

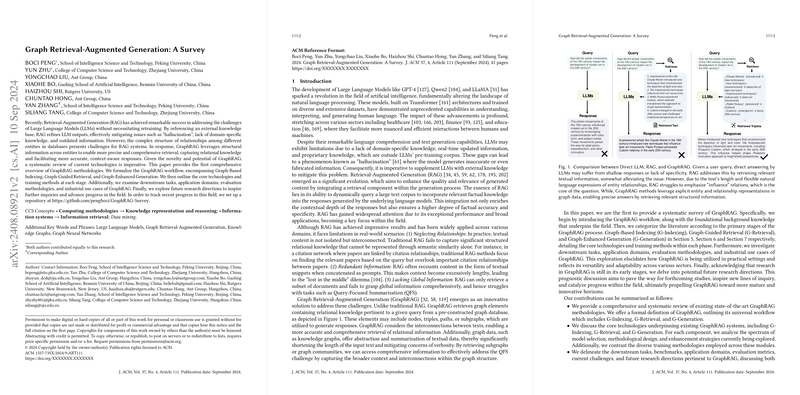

The paper "Graph Retrieval-Augmented Generation: A Survey" provides a systematic examination of GraphRAG (Graph Retrieval-Augmented Generation) methodologies, addressing limitations in conventional RAG (Retrieval-Augmented Generation) by incorporating structural information from graph databases. The analysis focuses on three core components: Graph-Based Indexing (G-Indexing), Graph-Guided Retrieval (G-Retrieval), and Graph-Enhanced Generation (G-Generation).

Key Contributions

- Formalization of GraphRAG Workflow:

- Defines a universal framework comprising G-Indexing (constructing/selecting graph databases), G-Retrieval (extracting relevant graph elements), and G-Generation (synthesizing outputs using retrieved data).

- Introduces text-attributed graphs (TAGs) as a unified representation for graph data, where nodes/edges have textual attributes.

- Technical Innovations:

- G-Indexing:

- Graph Sources: Open KGs (Knowledge Graphs) (e.g., Wikidata, ConceptNet) vs. self-constructed graphs (e.g., document-entity networks).

- Indexing Strategies: Graph indexing (structural preservation), text indexing (template-based linearization), vector indexing (embedding-based retrieval).

- G-Retrieval:

- Retrievers: Non-parametric (BFS/DFS), LM-based (RoBERTa, GPT-4), GNN-based (message-passing architectures).

- Paradigms: Once retrieval (single query), iterative retrieval (adaptive/non-adaptive), multi-stage retrieval (hierarchical filtering).

- Granularities: Nodes, triplets, paths, subgraphs.

- Enhancements: Query expansion (LLM-generated hypotheses), knowledge merging/pruning (similarity ranking, LLM-based filtering).

- G-Generation:

- Generators: GNNs (graph encoding), LMs (textual integration via graph languages), hybrid models (cascaded/parallel fusion).

- Graph Formats: Adjacency tables, natural language templates, syntax trees, code-like formats (GML/GraphML).

- G-Indexing:

- Applications and Evaluation:

- Tasks: KBQA (Knowledge Base QA), CSQA (Commonsense QA), entity linking, fact verification.

- Benchmarks: WebQSP, CWQ, GrailQA, and domain-specific datasets (e.g., CMeKG for biomedicine).

- Metrics: Accuracy, EM (Exact Match), F1, BERTScore, retrieval quality (answer coverage vs. subgraph size).

- Industry Systems: Microsoft GraphRAG (community summarization), NebulaGraph (graph-native RAG), Ant Group’s DB-GPT (triple extraction).

Challenges and Future Directions

- Dynamic Graphs: Real-time updates for evolving KGs (e.g., citation networks).

- Scalability: Efficient retrieval in billion-scale industrial KGs.

- Multimodal Integration: Combining text with images/audio in graph representations.

- Lossless Compression: Reducing input length for LM compatibility while preserving structural fidelity.

- Graph Foundation Models: Integrating pretrained graph models (e.g., UltraGCN) into retrieval pipelines.

Critical Observations

- Hybrid Models: Combining GNNs (structural encoding) and LMs (text understanding) achieves superior performance but faces integration challenges.

- Retrieval Efficiency: Non-parametric retrievers (e.g., BFS) offer speed but lack accuracy, whereas LM-based methods incur computational costs.

- Industrial Adoption: Systems like Microsoft GraphRAG demonstrate 20–30% improvements in answer coverage for QFS (Query-Focused Summarization) tasks by leveraging community detection and summarization.

The survey positions GraphRAG as a critical advancement for relational reasoning in LLMs, with applications spanning healthcare, e-commerce, and legal domains. Future work must address scalability, dynamic data handling, and standardization of evaluation benchmarks.