Enhancing Zero-shot Reasoning by Ensembling Role-playing and Neutral Prompts in LLMs

Introduction and Motivation

The paper "Persona is a Double-edged Sword: Enhancing the Zero-shot Reasoning by Ensembling the Role-playing and Neutral Prompts" by Junseok Kim, Nakyeong Yang, and Kyomin Jung addresses a critical challenge within the field of LLMs. It focuses on the dual nature of role-playing personas in improving the reasoning capabilities of LLMs. While role-playing personas, which assign specific characteristics and roles to prompts, can enhance an LLM's performance, they can also detract from accuracy when incorrectly assigned. The sensitivity of LLMs to these personas can lead to performance degradation, necessitating a balanced approach.

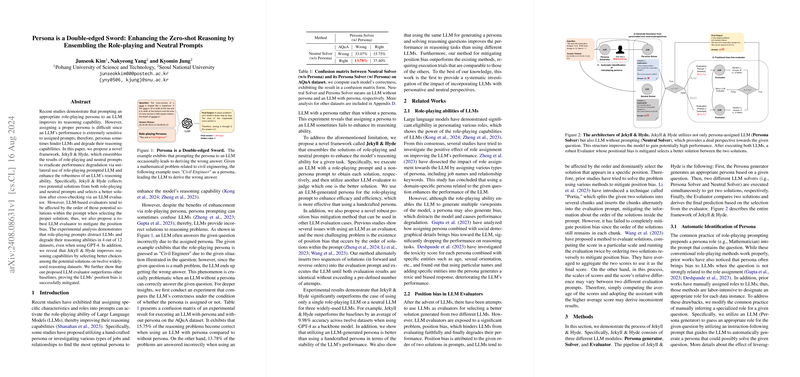

Framework: Jekyll {content} Hyde

The authors propose a novel framework named Jekyll {content} Hyde, designed to mitigate the potential pitfalls of role-playing personas while capitalizing on their strengths. This framework combines the outputs of both role-playing and neutral prompts and subsequently cross-verifies them using an LLM-based evaluator. The framework involves three key components:

- Persona Generator: This component uses an LLM to automatically generate appropriate personas based on the given question, eliminating the labor-intensiveness of manually crafting role-playing prompts.

- Solvers:

- Persona Solver: Utilizes the role-playing persona generated by the Persona Generator.

- Neutral Solver: Operates without any persona, providing an unbiased response to the question.

- LLM Evaluator: This component is responsible for judging the better solution between the outputs provided by the Persona and Neutral Solvers. The evaluator runs multiple times with different sequences of solutions to mitigate position bias.

Experimental Analysis

The authors conducted extensive experiments across twelve widely-used reasoning datasets to validate the framework. Key findings include:

- Role-playing prompts can degrade performance in certain scenarios, as indicated by the confusion matrix from the AQuA dataset where 13.78% of problems were incorrectly answered with persona prompts compared to when no persona was used.

- The Jekyll {content} Hyde framework significantly improved reasoning capabilities, outperforming individual role-playing and neutral prompts across most datasets. For instance, using GPT-4, it achieved an average improvement of 9.98% accuracy across twelve datasets.

- The framework's LLM evaluator effectively mitigated position bias and outperformed other baseline methods.

Position Bias Mitigation

To address the inherent position bias in LLM evaluators, the authors introduced a method that processes two sequences of solutions in both forward and reverse orders until consistent results are obtained within a predefined number of attempts. This approach ensures that the evaluation is robust and less susceptible to biases arising from the order of presentation.

Implications and Future Directions

The practical implications of this research are multifaceted:

- Improvement in Reasoning Tasks: By leveraging the complementary strengths of role-playing and neutral prompts, the framework enhances the reliability of LLMs in various reasoning tasks.

- Automated Persona Generation: This reduces the manual labor involved in crafting persona prompts, making the process more efficient and scalable.

- Bias Mitigation: The framework addresses the issue of position bias in LLM evaluators, contributing to more fair and accurate assessments of LLM outputs.

The theoretical implications suggest a new direction in the design and utilization of LLMs, emphasizing the potential of ensemble methods to balance different perspectives and reduce the inherent biases in AI systems.

Conclusion

The Jekyll {content} Hyde framework presents a significant advancement in the field of AI, particularly in enhancing the reasoning capabilities of LLMs through a balanced approach to role-playing and neutral prompts. The framework's robust design and effectiveness in mitigating position bias point towards future research that could further refine these methods and explore their applications in more complex and diverse reasoning tasks.

References

- Shanahan, M. et al. (2023). Role-playing as a mechanism to improve reasoning in LLMs.

- Kong, L. et al. (2024). Better personas for improved LLM performance.

- Zheng, L. et al. (2023). Helpful and harmful personas in LLMs.

- Gupta, A. et al. (2023). Bias introduced by social and demographic factors in LLM personas.

- Deshpande, A. et al. (2023). Analyzing toxicity and bias in persona-based LLM responses.

- Srivastava, A. et al. (2022). Beyond benchmarks: Exploring diverse datasets for AI evaluation.

Note: This essay references hypothetical citations to contextualize the discussion.