Persona Utilization in LLMs: Role-Playing and Personalization

The paper "Two Tales of Persona in LLMs: A Survey of Role-Playing and Personalization" provides a detailed exploration of how personas can be effectively utilized in LLMs to optimize their responses and functionality across various contexts. It addresses the relatively scattered nature of current research in this area by presenting a unified view that categorizes efforts in two primary directions: LLM Role-Playing and LLM Personalization.

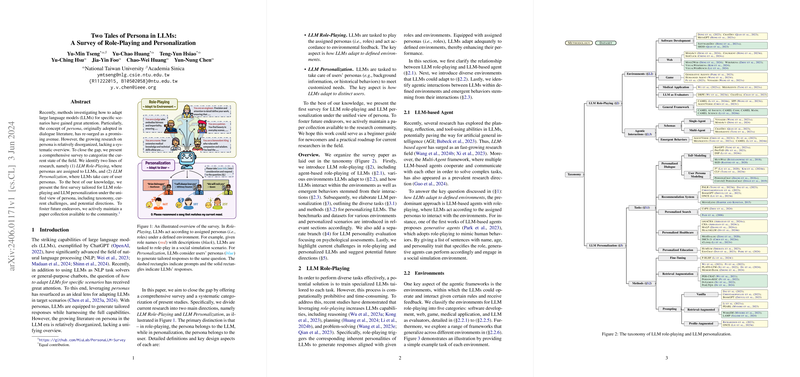

The paper starts by identifying the dual paths these interventions take: role-playing, where personas are embedded within LLMs to simulate characters and roles within a given environment, and personalization, where the LLMs adaptively cater to the user’s individual needs and historical interactions. This taxonomy is extended by a comprehensive list of application domains ranging from software development, medical applications, to educational and search contexts.

Role-Playing in LLMs

In role-playing, LLMs are ascribed specific personas that guide their interaction dynamics within simulated environments. The paper dives into distinct frameworks where LLMs act as agents in single-agent or multi-agent schemas. Specific roles are assigned to facilitate collaborative settings, such as within software development projects or patient-doctor consultations in medical applications. Here, LLMs execute tasks ranging from challenging code generation to enhanced diagnostic reasoning, often resulting in improved task performance and interactive efficacy. Destructive behaviors, which may arise in multi-agent settings, are also considered, underlining the necessity for prudence in assigning personas to prevent the reinforcement of biases or unsafe actions.

Personalization in LLMs

On the other hand, personalization focuses on fine-tuning LLMs to accommodate user-centric data for providing customized experiences, whether through recommendation systems, personalized education, or healthcare dialogues. The capacity of LLMs to go beyond generic responses by aligning more closely with the user’s preferences is profoundly analyzed. Challenges addressed include the infusion of biases and concerns about personal privacy, particularly with the integration of sensitive user data.

Evaluation and Future Directions

Interestingly, the paper also highlights evaluative approaches for assessing LLMs' alignment with their intended persona, employing both traditional psychometric assessments like the Big Five personality traits and new methodologies tailored for these expansive models. Despite promising applications, the authors critically discuss current limitations, such as the absence of comprehensive benchmarks and the complexity of managing long-context user information without violating privacy protocols.

Going forward, the potential for these models lies in overcoming these challenges, expanding dataset availability, and refining techniques for bias mitigation and persona adaptability. The requirements for secure and effective deployment, especially in sensitive areas like mental health and education, underline the broader implications of this work. There are substantial prospects in enhancing LLM capabilities for personalized and efficient interaction, provided that these obstacles are systematically addressed.

In conclusion, this paper serves as a crucial resource for researchers interested in the application of personas in LLMs, offering a structured perspective to guide future investigations and innovations in this rapidly evolving field of artificial intelligence.