AI-Assisted Generation of Difficult Math Questions: An Expert Overview

The paper, "AI-Assisted Generation of Difficult Math Questions," addresses the growing need for high-quality, diverse, and challenging mathematics questions by leveraging the strengths of LLMs in conjunction with human expertise. It presents a novel design framework that integrates a human-in-the-loop approach to produce difficult math questions efficiently.

Methodology

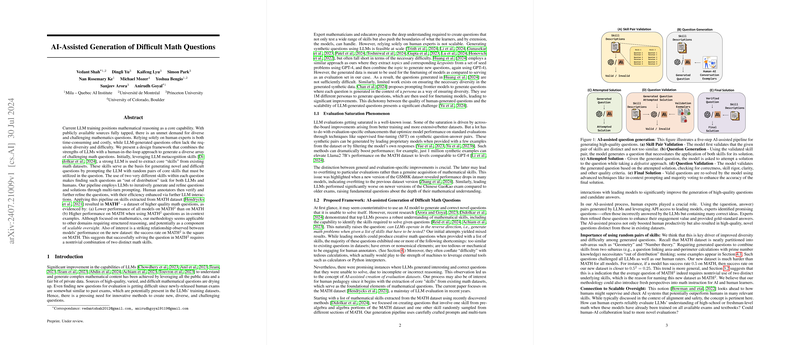

The proposed five-step pipeline intelligently combines LLM capabilities with human verification to achieve the goal:

- Skill Pair Validation: The model first validates randomly selected pairs of distinct mathematical skills to ensure they are not qualitatively similar, using provided skill exemplars.

- Question Generation: Utilizing validated skill pairs, the model generates novel questions incorporating both skills and provides brief solutions.

- Solution Attempt: The model attempts to solve the generated question with a defeatist approach, identifying potential flaws in the question.

- Question Validation: Using a fixed rubric, the model validates questions based on several criteria (e.g., single answer requirement, computational tractability), followed by majority voting for robustness.

- Final Solution and Re-validation: The validated question is re-solved to ensure accuracy and consistency of the final solution, discarding questions with ambiguous answers.

Experimental Setup and Results

The framework was applied to the MATH dataset, extracting 114 distinct skills, which were then filtered and used to generate 180 verified questions, creating the MATH dataset. This dataset was rigorously tested against various open-source and proprietary models.

Key findings include:

- All models showed significantly reduced performance on MATH compared to the original MATH set, highlighting the increased difficulty of the new questions.

- The performance of models on MATH followed an intuitive relationship: , where and are the performance scores on MATH and MATH, respectively. This suggests that solving questions in MATH requires a successful combination of two distinct math skills.

Implications and Future Directions

The creation of MATH dataset demonstrates the efficacy of combining AI and human expertise. The findings emphasize the potential of AI-assisted question generation in other domains requiring structured reasoning beyond mathematics.

Practically, this framework provides a blueprint for generating high-quality, diverse datasets that challenge current AI models, facilitating more robust assessment and improvement. Furthermore, the ability to produce questions that are more difficult than standard datasets suggests this approach could be applied for advanced pedagogical purposes, potentially enhancing human learning processes.

The evident degradation in performance for smaller models and the pronounced relative performance drops indicate areas for improvement in training and diversification of synthetic data.

In future developments, scaling the process to generate larger datasets and improving computational efficiency are necessary. Reducing the dependency on human verification through advancements in automated validation tools and incorporating training-based feedback loops for the model are promising directions.

Conclusion

The paper presents a sophisticated, structured framework for generating challenging math questions, combining AI capabilities with human expertise. The proposed pipeline successfully creates a substantially more difficult dataset, MATH, which meaningfully tests the composed skills of advanced models. This work exemplifies how integrating AI with human input can surpass limitations of both, providing a path forward for scalable and diverse question generation across various domains.