Overview of "InfLLM: Training-Free Long-Context Extrapolation for LLMs with an Efficient Context Memory"

The paper introduces InfLLM, a novel methodology for enhancing the ability of pre-trained LLMs to handle long-sequence data without the need for extensive retraining. The authors address the constraints imposed by traditional LLMs when processing sequences longer than those encountered during pre-training, particularly due to the high computational and memory costs involved. InfLLM distinguishes itself by operating without additional training, utilizing a memory-based system to efficiently manage and extrapolate the context window of LLMs.

Key Contributions

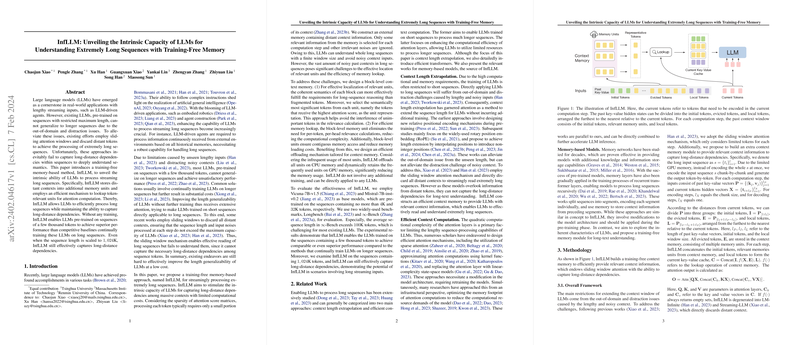

The paper identifies several challenges that traditional methods face, notably the significant computational overhead and the risk of diminishing performance in shorter sequences when continually training LLMs on longer sequences. InfLLM approaches these issues by leveraging a sliding window attention mechanism combined with an innovative context memory module. This setup allows the LLM to focus on the most relevant segments of past context data while discarding irrelevant, noisy contexts that may cause distraction issues.

InfLLM features:

- Block-Level Context Memory: InfLLM introduces a memory organization strategy where past key-value vectors are grouped into blocks, each representing continuous sequences of tokens. These blocks are managed more efficiently than per-token memory units, allowing for significant reductions in computational costs and increased retrieval efficiency.

- Efficient Memory Lookup: The block-level memory units allow InfLLM to dynamically select only the most semantically significant tokens within a block for relevance computations, improving both the hit rate of memory lookups and processing efficiency.

- Training-Free Implementation: Unlike many alternatives requiring model retraining, InfLLM operates without altering the underlying LLM architecture or additional training, making it a versatile and easily applicable solution to existing LLM models.

Experimental Evaluation

InfLLM was rigorously tested using two base LLMs: Mistral-7B and Llama-3-8B, which were pre-trained on input sequences up to 32K and 8K tokens, respectively. The performance of InfLLM was assessed against ∞-Bench and LongBench benchmarks, covering challenging tasks such as question answering and summarization over sequences averaging over 100K tokens. InfLLM demonstrated comparable or superior capabilities relative to models that undergo further training on extended sequences.

Table 1 of the results section highlights InfLLM's capacity to achieve competitive results using a significantly reduced computational budget. Notably, the results for sequences extending to 1,024K tokens underline InfLLM's potential to manage extremely long contexts without inordinate memory usage.

Implications and Future Directions

The approach proposed in InfLLM promotes practical applications by enabling the efficient processing of long streaming inputs with limited computational resources. This enhancement of LLMs is particularly pertinent for real-world applications such as autonomous agents, where continuous input processing is crucial.

Looking forward, InfLLM's framework opens avenues for refining inference speed further by integrating with advanced inference engines and incorporating memory compression strategies to mitigate CPU memory consumption. Additionally, exploring training methods for the context memory module and investigating alternative memory unit representations could contribute to further performance gains.

In conclusion, InfLLM presents a significant advancement in extending the contextual capabilities of LLMs in a resource-efficient manner, emphasizing a shift toward modular, non-intrusive techniques in model enhancement. This work not only provides a scalable solution to length generalizability but also acts as a stepping stone for future innovations in memory-efficient LLM processing.