FACTS About Building Retrieval Augmented Generation-based Chatbots

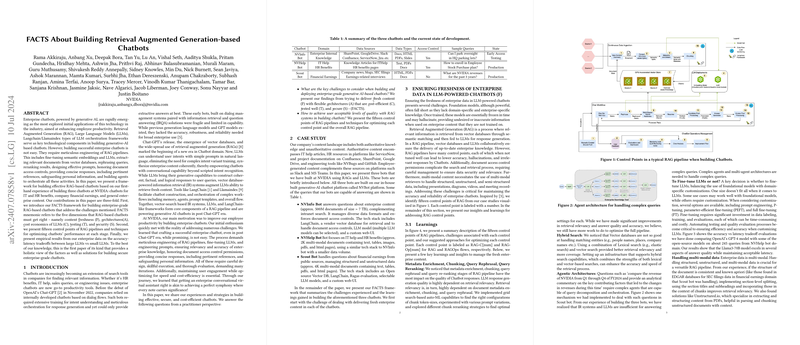

The paper "FACTS About Building Retrieval Augmented Generation-based Chatbots" by researchers at NVIDIA provides a comprehensive framework and set of best practices for developing enterprise-grade chatbots leveraging Retrieval Augmented Generation (RAG) technology. This paper is an empirical paper based on the authors' experience of building three distinct chatbots tailored to different functions within NVIDIA: IT and HR support, company financial earnings, and general enterprise content inquiries. The authors put forth the FACTS framework, focusing on key areas imperative for RAG-based chatbot success: Freshness, Architectures, Cost economics, Testing, and Security.

Introduction

Enterprise chatbots have transitioned from basic dialog flow systems to sophisticated engines powered by Generative AI technologies. The introduction of OpenAI's Chat-GPT marked a significant shift from traditional intent recognition bots to those capable of generating natural, coherent responses. The proliferation of vector databases, enhanced by RAG methods, allows LLMs to tap into current, domain-specific knowledge efficiently.

The evolution of these systems sheds light on the multifaceted nature of developing enterprise-grade chatbots. Effective implementation demands not only advanced technology but also a meticulous approach to engineering, maintaining, and optimizing RAG pipelines.

The FACTS Framework

The authors propose the FACTS mnemonic to encapsulate the essential dimensions for building successful RAG-based chatbots:

- Freshness (F): Ensuring the chatbot retrieves and generates responses based on the most up-to-date information.

- Architectures (A): Developing a flexible and modular infrastructure capable of integrating various LLMs and vector databases.

- Cost Economics (C): Balancing the expense of deploying enterprise chatbots against their performance and scalability.

- Testing (T): Conducting rigorous, automated testing to maintain high standards of accuracy and security.

- Security (S): Implementing robust measures to safeguard sensitive data and prevent unauthorized access.

Ensuring Content Freshness

A RAG pipeline's efficacy is largely dictated by its ability to maintain data relevancy and freshness. This involves several intricate steps:

- Metadata Enrichment, Chunking, Query Rephrasal, and Reranking: Ensuring that retrieved chunks are contextually correct and relevant.

- Hybrid Search Mechanisms: Employing both lexical and vector searches to enhance retrieval relevancy.

- Agentic Architectures: Developing agents capable of decomposing complex queries and orchestrating sequential information retrieval and synthesis.

- Multi-modal Data Handling: Managing structured, unstructured, and semi-structured data efficiently, a task crucial for enterprise environments.

Flexible Architectures

Flexibility in architecture is vital given the rapid advancements in AI technologies. This flexibility is achieved by:

- Developing platforms that support various LLMs, vector databases, and orchestration tools.

- Implementing a multi-bot architecture where domain-specific bots work alongside an overarching enterprise chatbot.

- Facilitating low-code/no-code solutions for rapid development and iteration.

The NVBot platform at NVIDIA exemplifies this approach, promoting modularity and integration flexibility to support diverse enterprise needs.

Cost Economics

Managing the cost of LLM deployments is complex due to variations in model sizes and performance levels. The authors highlight key strategies:

- Bigger vs. Smaller Models: Evaluating the trade-offs between using large, commercial LLMs and smaller, open-source models. Smaller models often offer similar accuracy with significantly reduced latency and cost.

- LLM Gateway: Centralizing LLM API calls through an internal gateway to manage subscriptions, costs, and data security.

An empirical paper showed that models such as Llama-2-70B perform comparably to larger, more expensive models like GPT-4 in specific enterprise use cases, as demonstrated in Figure 6 of the paper.

Rigorous Testing

Due to the complex nature of generative models, thorough testing is paramount:

- Security Testing: Automating security checks to maintain development pace while protecting against vulnerabilities.

- Prompt Sensitivity: Ensuring that changes in prompts are thoroughly tested to prevent degradation of response quality.

- Feedback Loops: Incorporating user feedback through Reinforcement Learning with Human Feedback (RLHF) to continuously enhance model performance.

Security Measures

Maintaining the security and integrity of enterprise chatbots is crucial:

- Access Control Compliance: RAG systems must honor document access control lists to prevent unauthorized data access.

- Guardrails: Implementing mechanisms to prevent hallucinations, toxicity, and sensitive data leaks.

- Content Security: Ensuring sensitive information is adequately filtered and protected within the RAG pipeline.

Implications and Future Directions

The research provides a solid foundation for building robust, efficient, and secure RAG-based chatbots. However, several areas require further exploration:

- Agentic Architectures: Enhancing capabilities to handle complex, analytical queries.

- Real-time Data Summarization: Efficiently updating and summarizing large volumes of enterprise data.

- Auto-ML for RAG Pipelines: Developing automation tools to optimize the various control points within the RAG pipelines continuously.

- Advanced Evaluation Frameworks: Creating more sophisticated methods to assess the quality of chatbot interactions.

Conclusion

The FACTS framework provides a comprehensive and practical approach to developing secure, efficient, and effective RAG-based enterprise chatbots. By addressing the multifaceted challenges of freshness, architecture flexibility, cost economics, rigorous testing, and robust security measures, this paper makes significant contributions towards advancing the field of enterprise AI solutions.