Distilling System 2 into System 1

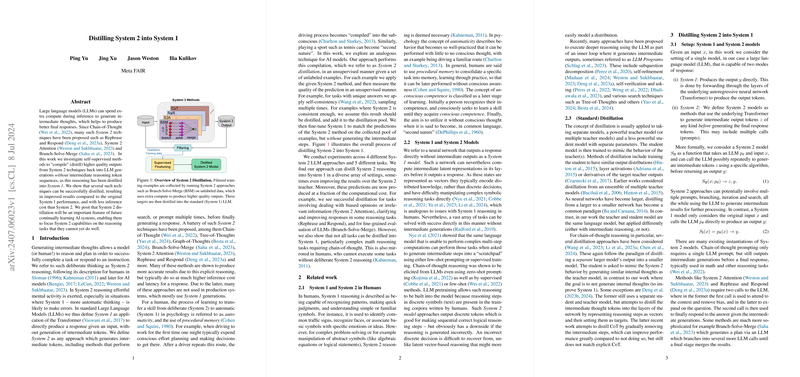

The paper "Distilling System 2 into System 1" introduces a novel approach to enhance the performance efficiency of LLMs by leveraging a process termed as System 2 distillation. This methodology aims to integrate the higher quality outputs obtained from explicit intermediate reasoning steps, typically employed in System 2 techniques, back into a more streamlined and efficient System 1 approach that directly generates end results without intermediate steps.

Overview of System 1 and System 2

System 1 and System 2 are concepts based on cognitive psychology, distinguishing between automatic, quick, and often subconscious responses (System 1) and deliberate, effortful reasoning (System 2). In LLMs, System 1 corresponds to models generating a response directly given an input. Conversely, System 2 involves methods that prompt the model to generate intermediate reasoning steps or run multiple tailored prompts before arriving at a final response. Prior work has characterized and exploited System 2 techniques, such as Chain-of-Thought (CoT) and Branch-Solve-Merge (BSM), for their improved performance on specific tasks albeit at a higher computational cost.

Proposed Approach: System 2 Distillation

The core innovation of this paper lies in System 2 distillation—a process formulated to condense the intricate reasoning abilities of System 2 into the more automatic and efficient responses of System 1. This is achieved through self-supervised learning from unlabeled data by iteratively refining the LLM's output. The key steps include:

- Generating System 2 Responses: For a given set of unlabeled inputs, System 2 methods are employed to generate higher quality outputs.

- Unsupervised Curation: A consistency criterion is used to filter and select high-quality responses. This can involve self-consistency checks through multiple generation sampling or perturbations in the input to ensure reliability.

- Distillation and Fine-tuning: The curated high-quality responses are then used to fine-tune the LLM, enabling it to produce superior outputs directly in a System 1 manner.

Empirical Evaluation

The researchers conducted extensive experiments across various System 2 methods and tasks to evaluate the efficacy of their distillation approach:

- Rephrase and Respond (RaR): Applied to tasks like last letter concatenation and coin flip reasoning, distillation significantly improved System 1 performance without necessitating the intermediate rephrasing steps intrinsic to RaR, exemplifying a nearly perfect accuracy for the former.

- System 2 Attention (S2A): For the TriviaQA task, which often suffers from context irrelevance or bias, System 2 distillation markedly enhanced model performance, demonstrating reduced inference length and increased accuracy.

- Branch-Solve-Merge (BSM): Focusing on LLM-as-a-judge tasks for evaluating candidate responses, distillation yielded improvements in human agreement and consistency on benchmarks like OASST2 and MT-bench, while drastically curbing computational costs.

- Chain-of-Thought (CoT): Although the distillation approach failed to successfully encapsulate the CoT's advantages into System 1 responses for complex reasoning in tasks like GSM8k, this highlights the boundaries and challenges in applying System 2 distillation to highly intricate reasoning tasks.

Implications and Future Directions

The paper underscores several significant implications:

- Efficiency Gains: System 2 distillation can substantially lower computational overhead, making high-performing LLMs more feasible for deployment in production environments.

- Performance and Robustness: The successful distillation of methods like S2A and BSM enhances the robustness of LLMs in handling biases and complex evaluation tasks while maintaining performance parity with their System 2 counterparts.

- Limits and Nuances: The difficulties encountered in distilling CoT reasoning underscore the need for further research into distinguishing which System 2 tasks are amenable to such distillation and developing tailored techniques for those that are not.

Theoretical implications suggest that System 2 distillation aligns with human cognitive processes where deliberate practices eventually become intuitive responses. Future theoretical frameworks and empirical studies could further refine and broaden the scope of this research by investigating adaptive continual learning loops—where LLMs can autonomously identify and distill successful reasoning patterns.

Conclusion

The proposed approach of System 2 distillation presents a nuanced, self-supervised method to enhance the efficiency and performance of LLMs by internalizing complex reasoning pathways. This not only enables more practical and effective deployment of LLMs but also aligns computational strategies with human cognitive evolution. While challenges remain, particularly for tasks requiring deeper reasoning, the initial results are promising and lay a foundational pathway for future innovations in AI and machine learning paradigms.