Scaling Transformers to 1M Tokens and Beyond with Recurrent Memory Transformer

The paper "Scaling Transformer to 1M Tokens and Beyond with RMT" addresses a significant limitation in transformer models: the quadratic scaling of computational complexity with respect to input size. The authors propose the Recurrent Memory Transformer (RMT), which augments existing transformer models with a recurrent memory mechanism to extend their input context length while achieving linear computational scaling.

Problem Statement

The inherent inefficiency of the quadratic complexity in the self-attention mechanism of transformers poses a barrier in handling extremely long sequences. This issue restricts the ability of transformers to model tasks requiring extensive context, such as understanding whole documents, remembering long sequences of facts, or processing complex reasoning tasks.

Proposed Solution: Recurrent Memory Transformer (RMT)

The RMT introduces an innovative memory-augmented segment-level recurrent architecture that enables transformers to process input sequences beyond typical length limitations. The essence of RMT lies in integrating memory tokens into transformer models such as BERT and GPT-2, requiring no architectural modifications to the underlying model. These memory tokens facilitate storing segment-level information across long spans, employing recurrence to pass information between sequence segments.

Methodology and Results

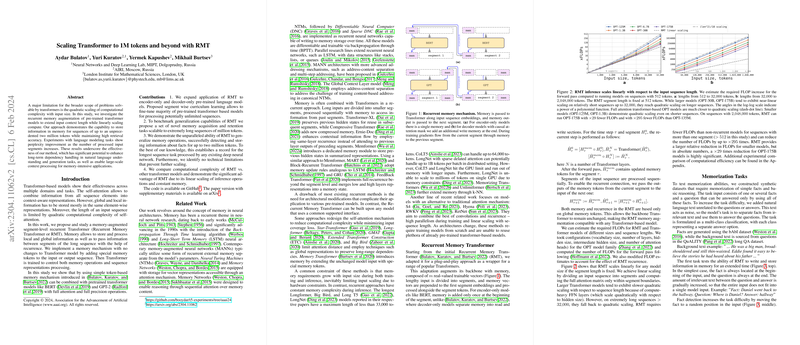

The paper demonstrates RMT's capabilities across several experimental setups, prominently showcasing its ability to handle sequences of up to two million tokens. This is achieved by dividing long inputs into manageable segments and using the memory mechanism to bridge sequence gaps that traditional models would fail to connect. Results from LLMing tasks reveal significant improvements in perplexity as more input segments are processed. This improvement illustrates RMT's effectiveness in capturing long-term dependencies in text, crucial for natural language understanding and generation tasks.

The paper further introduces a novel set of memory-intensive tasks to benchmark generalization capabilities, extending to sequences containing millions of tokens. RMT successfully memorized, detected, and inferred information from these sequences, establishing a notable capability for extremely long sequence tasks that outperforms existing deep neural network architectures.

Computational Efficiency

A comparative analysis revealed that RMT offers substantial computational advantages by providing linear scaling of inference operations and maintaining a constant memory footprint. This efficiency is critical when scaling models for practical applications due to hardware limitations, such as GPU memory constraints. The paper underscores the specific advantage of RMT in terms of FLOPs, demonstrating significant reductions even with large models like OPT-175B.

Future Directions and Implications

The development of the Recurrent Memory Transformer opens new avenues for increasing the input capacity of LLMs without prohibitive increases in computational cost. The implications are far-reaching, with potential applications in memory-intensive scenarios such as document summarization, question answering over extensive corpora, and interactive dialogues spanning extensive topics.

The theoretical implications suggest that by leveraging recurrent mechanisms, transformers can go beyond their traditional input size limitations, mitigating the need to train a new model from scratch every time a larger context is required. This advancement provides a blueprint for future models to extend their processing capabilities more flexibly and efficiently.

Conclusion

This paper positions the Recurrent Memory Transformer as a transformative approach to managing long sequences in pre-trained LLMs. By efficiently handling extensive contexts that exceed millions of tokens, RMT offers a practical, scalable solution to a problem that has long hindered the capacity of transformer-based architectures. The contribution not only enhances the scope and function of existing models but also provides an effective framework for future exploration in long-context sequence processing.