An Expert Evaluation of "video-SALMONN: Speech-Enhanced Audio-Visual LLMs"

The research paper titled "video-SALMONN: Speech-Enhanced Audio-Visual LLMs" introduces video-SALMONN, an advanced audio-visual LLM (av-LLM) that incorporates speech understanding into the field of video analysis. The primary innovation lies in integrating a comprehensive suite of audio-visual elements, specifically enhancing speech capabilities—a facet that remains underexplored in current av-LLMs.

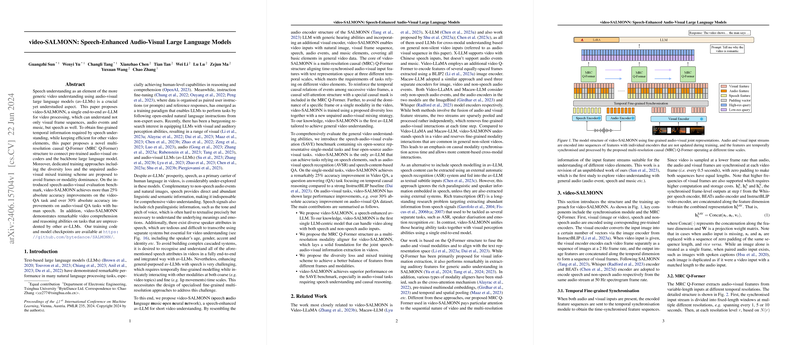

Model and Training Structure

At the core of video-SALMONN's design is a multi-resolution causal Q-Former (MRC Q-Former) structure, facilitating fine-grained temporal modeling. This involves synchronizing audio-visual inputs through specialized encoders before aligning them into a text representation space. The multi-resolution approach allows distinct temporal scales, addressing the unique requirements of diverse video components such as speech, audio events, music, and accompanying visuals.

The training strategy incorporates diversity loss and an unpaired audio-visual mixing approach to prevent dominant bias toward specific frames or modalities. This ensures a balanced integration of speech and non-speech audio cues, fostering a nuanced understanding of video content enriched with auditory elements.

Performance and Benchmarking

Video-SALMONN's performance on the newly introduced SAVE (Speech-Audio-Visual Evaluation) benchmark is noteworthy. It significantly outperformed existing baselines, showing more than a 25% increase in accuracy on video question answering (Video QA) tasks and over 30% on audio-visual QA tasks involving human speech. The AVQA results underscore its compelling capability for speech-visual co-reasoning, a domain where its predecessors fall short.

Related Work and Comparative Analysis

Distinct from related works such as Video-LLaMA and Macaw-LLM, which lack robust speech integration, video-SALMONN effectively bridges the gap by enabling synchronized speech recognition and comprehension in video contexts. Moreover, while these models often treat audio and visual streams as loosely coupled, video-SALMONN prioritizes fine-grained modality interactions across temporal sequences, highlighted by significant improvements in causal reasoning in video contexts.

Implications and Future Directions

The integration of speech elements into LLMs for video processing represents an important evolution, enabling deeper semantic and paralinguistic understanding. Practically, this translates to improved performance in diverse applications such as multimedia presentations, interactive tutorial content, and educational technologies, where speech content plays a crucial role.

Theoretically, video-SALMONN opens up new research directions in multimodal machine learning, particularly in tasks requiring coordinated processing of audio, visual, and textual data. Moreover, the model's architecture could be extended to incorporate more complex audio-visual interactions and potentially scale to more nuanced scenarios involving diverse languages and dialects.

Future developments in AI could leverage video-SALMONN's architecture to foster holistic multimedia understanding, enhancing real-time applications in areas like augmented reality and virtual assistants, where speech comprehension intertwined with visual context is invaluable.

In summary, video-SALMONN marks a significant stride in the integration of speech into multimodal LLMs, presenting opportunities and inviting future exploration into comprehensive audio-visual language understanding.