Evaluation of LLMs in Bug Detection within Large Codebases: The BICS Benchmark

The research paper entitled "Bug In The Code Stack: Can LLMs Find Bugs In Large Python Code Stacks?" investigates the application of LLMs in detecting syntax bugs within extensive Python codebases. The paper introduces the Bug In The Code Stack (BICS) benchmark as a newly developed tool aimed at assessing the debugging capability of LLMs in long-context programming scenarios. The significance of this work is underscored by the increasing integration of LLMs into software development processes, where accurate bug identification is a critical need.

Methodological Advancements

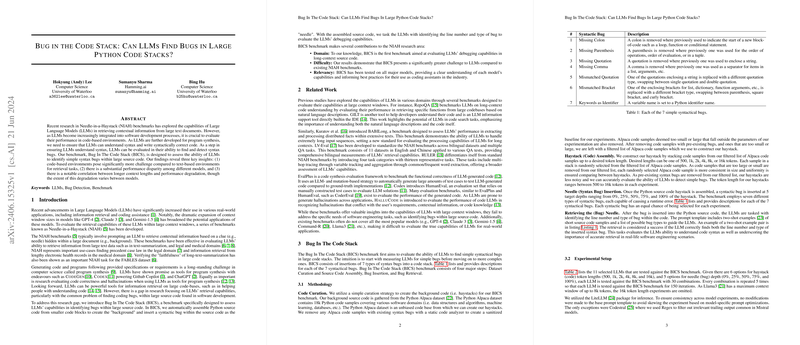

The core methodology of the paper revolves around the construction of Python code stacks, referred to as "haystacks," and the systematic insertion of syntactic bugs, termed as the "needle." The paper details a meticulous process where code samples from the Python Alpaca dataset are curated and sanitized, ensuring uniformity and the absence of pre-existing bugs. The benchmark evaluates LLMs based on their accuracy in identifying seven distinct types of syntactic bugs at various depths within the code stack. The setup encompasses variations in context lengths, from 500 to 16k tokens, and involves 11 prominent LLMs to provide a comprehensive evaluation framework.

Empirical Findings

Analysis reveals a significant performance disparity across different LLMs, with GPT-4o and GPT-4-Turbo emerging as the leading models in terms of accuracy and consistency. Remarkably, the variance in performance is highlighted by the robustness of GPT-4 models against context length and needle depth alterations. The challenging nature of the BICS benchmark is evident from the comparative analysis with text-based benchmarks like BABILong, where LLMs like GPT-3.5-Turbo demonstrated markedly lower accuracy on BICS. This emphasizes the complexity of code-based benchmarks, particularly in detecting subtler syntactical errors.

The performance decline with increased context length and the differential retrieval accuracy across bug types emphasize the nuanced challenges in real-world coding scenarios. Notably, missing colon and missing parenthesis bugs were identified with higher accuracy, whereas, missing quotation and mismatched bracket bugs posed greater challenges to the models.

Implications and Future Prospects

The implications of the BICS benchmark extend to both theoretical and practical domains. The research provides a crucial insight into the limitations of current LLMs in handling large-scale bug detection, suggesting areas for improvement in model development and optimization. From a practical perspective, the benchmark serves as a valuable tool for software developers in selecting appropriate models for debugging tasks, thereby enhancing overall software robustness and reliability.

Looking ahead, the paper outlines several promising directions for extending the BICS benchmark. Future work could include the integration of runtime errors and multilingual support for languages like C++ and Rust. This would address advanced coding challenges related to memory management and ownership, further augmenting the utility of LLMs in diverse programming ecosystems.

Conclusion

In summary, the research paper introduces a significant contribution to the evaluation of LLMs in debugging large Python codebases through the BICS benchmark. The investigation into models' capabilities against intricate syntactical bugs provides a foundation for subsequent advancements in model design and application in software engineering. By presenting a detailed analysis of model performance and identifying key challenges, the paper paves the way for future enhancements in the field of AI-assisted software development.