DebugBench: Evaluating Debugging Capability of LLMs

The research paper introduces DebugBench, a comprehensive benchmark designed to evaluate the debugging capabilities of LLMs. While LLMs have demonstrated significant advancements in code generation, their debugging abilities remain less explored. This paper aims to address this gap by providing a rigorous framework for assessing LLMs in debugging tasks, covering a wide range of bug types across multiple programming languages.

Key Contributions

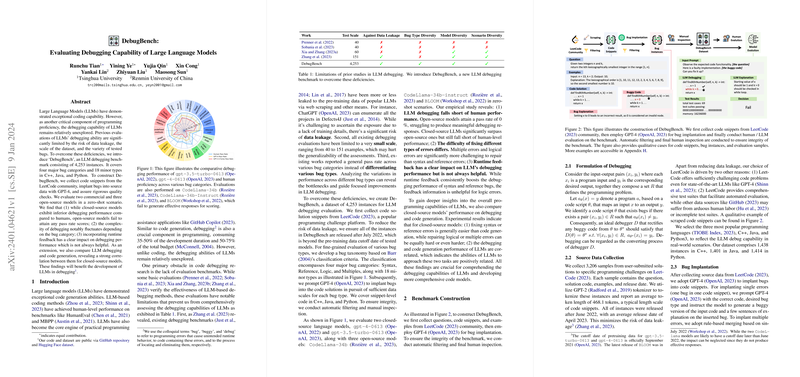

The authors present a benchmark consisting of 4,253 instances, encompassing four major bug categories—Syntax, Reference, Logic, and Multiples—across 18 minor types in C++, Java, and Python. This endeavor addresses several limitations of previous studies, such as risks of data leakage, limited dataset scale, and insufficient bug diversity.

Methodology

- Dataset Construction:

- Code snippets were sourced from the LeetCode community, applying GPT-4 for bug implantation.

- Emphasis was placed on reducing data leakage by utilizing instances released post-cutoff dates of models' pre-training datasets.

- Quality Assurance:

- Both automated filtering and manual inspections were employed to ensure the quality and integrity of the benchmark.

- Instances were inspected based on criteria like bug validity, sensitive information security, and scenario alignment.

- Model Evaluation:

- Two commercial (closed-source) and three open-source models were evaluated on DebugBench.

- The assessment was conducted in zero-shot scenarios to establish baseline debugging abilities.

Empirical Findings

- Performance Comparison:

- Closed-source models showed superior debugging performance to open-source models but still fell short of human-level proficiency.

- No open-source models succeeded in producing effective debugging responses, indicating a gap in debugging capabilities.

- Impact of Bug Types:

- Different bug types presented varying levels of difficulty. Syntax and reference bugs were easier to address compared to logic or multiple simultaneous bugs.

- Runtime Feedback:

- Closed-source models benefitted from runtime feedback for syntax and reference errors but struggled with logic bugs, suggesting the limitations of current runtime diagnostics.

- Correlation with Code Generation:

- Debugging and code generation tasks were found to be correlated in closed-source models, indicating that improvements in one could potentially benefit the other.

Implications and Future Directions

This paper paves the way for future research into automated debugging, leveraging LLMs. The findings suggest the need for models with enhanced debugging-specific training, possibly through tailored datasets encompassing real-world debugging scenarios. Additionally, expanding the scope of debugging benchmarks to more complex, real-world codebases could provide more challenging and informative assessments.

In terms of practical applications, the research highlights potential avenues for integrating LLMs into development environments to assist in automated debugging, thereby reducing time and labor costs. Future work could also explore interactive debugging approaches or leveraging integrated development environments (IDEs) within LLM frameworks to further augment their debugging capabilities.

Overall, DebugBench represents a robust step forward in understanding and advancing the debugging proficiencies of LLMs, contributing to both academic and practical advancements in the field of AI-powered coding and software development.