Enhancing Retrieval-Augmented Generation with Long-context LLMs: The LongRAG Framework

The paper "LongRAG: Enhancing Retrieval-Augmented Generation with Long-context LLMs" by Ziyan Jiang, Xueguang Ma, and Wenhu Chen proposes a novel framework to improve the performance of Retrieval-Augmented Generation (RAG) methods, specifically focusing on balancing the workload between the retriever and reader components using long-context LLMs.

Introduction

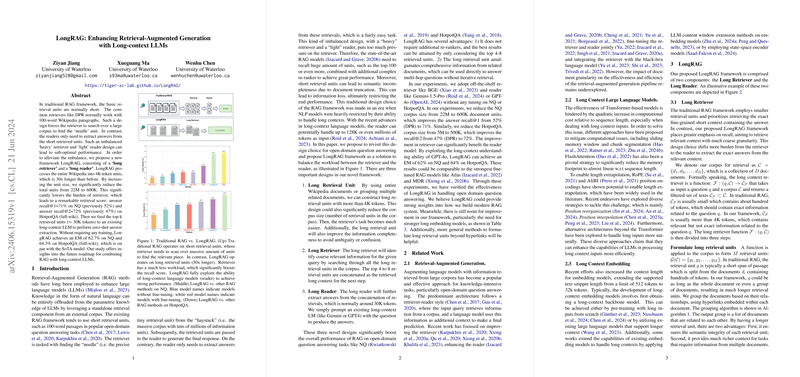

Traditional RAG architectures utilize short retrieval units, such as 100-word passages, which force the retriever to sift through an extensive corpus to find the relevant piece of information. This "heavy" retriever and "light" reader design often results in sub-optimal performance due to the disproportionate burden placed on the retriever. The introduction of long-context LLMs, which can handle significantly larger input contexts, offers an opportunity to revisit the design choices of the traditional RAG framework.

LongRAG Framework

The LongRAG framework comprises three primary components:

- Long Retrieval Unit: Instead of short passages, LongRAG processes entire Wikipedia documents or groups multiple related documents into units exceeding 4,000 tokens. This change reduces the corpus size and ensures semantic completeness, mitigating issues stemming from document truncation.

- Long Retriever: This component searches through long retrieval units, identifying coarse relevant information. The top-k units are concatenated to form a long context for further processing.

- Long Reader: Utilizing an existing long-context LLM, the reader component extracts answers from the concatenated retrieval units, leveraging the LLM's capability to handle large token inputs efficiently.

Experimental Results

Retrieval Performance

The LongRAG framework demonstrates substantial improvements in retrieval performance on two evaluation datasets: Natural Questions (NQ) and HotpotQA. Notable results include:

- For NQ, LongRAG reduces the corpus size from 22 million to 600,000 units, increasing the answer recall at top-1 from 52.24% to 71.69%.

- For HotpotQA, LongRAG enhances the top-2 answer recall from 47.75% to 72.49%, while reducing the corpus size from 5 million to 500,000 units.

The experiments also reveal that encoding long retrieval units directly using current long embedding models is less effective compared to approximations employing general embedding models, prompting the need for further research in developing robust long embedding models.

QA Performance

LongRAG achieves competitive results in end-to-end question answering tasks without any training. Key findings include:

- On the NQ dataset, LongRAG achieves an exact match (EM) score of 62.7% using GPT-4o as the reader, comparable to the strongest fine-tuned RAG models like Atlas.

- On HotpotQA, LongRAG achieves a 64.3% EM score, demonstrating its efficacy in multi-hop question answering tasks.

Implications and Future Directions

The LongRAG framework provides several practical and theoretical implications:

- Practical Implications: By significantly reducing the corpus size and improving retrieval performance, LongRAG streamlines the RAG framework, making it more efficient and potentially more scalable.

- Theoretical Implications: The approach highlights the importance of balancing the roles of the retriever and reader in RAG systems. It underscores the potential for long-context LLMs to handle more extensive and semantically complete contexts, paving the way for further innovations in information retrieval and generation tasks.

Future developments may focus on enhancing long embedding models and exploring more generalized methods for formulating long retrieval units beyond hyperlink-based grouping.

Conclusion

The LongRAG framework represents an advancement in the design of RAG systems, effectively leveraging the capabilities of long-context LLMs to enhance both retrieval and generation performance in open-domain question answering tasks. The proposed approach demonstrates that a balanced distribution of workload between the retriever and reader components can yield substantial improvements, providing valuable insights for the design of future RAG systems.