An Overview of Diffusion Gated Linear Attention Transformers (DiG)

The paper presents a notable advancement in the field of visual content generation through diffusion models. At its core, the paper introduces Diffusion Gated Linear Attention Transformers (DiG), a novel architecture designed to overcome the scalability and efficiency limitations often encountered with traditional Diffusion Transformers (DiT).

Core Contributions

The principal objective of the research is to enhance the scalability and computational efficiency of diffusion models by integrating the long sequence modeling capabilities of Gated Linear Attention (GLA) Transformers into the diffusion framework. The resultant DiG model is positioned as a more efficient alternative to the generic DiT, demonstrating significant improvements in both processing speed and resource consumption.

Key contributions of this work include:

- Introduction of DiG Model: The DiG model is conceptualized by leveraging GLA Transformers, addressing the quadratic complexity challenge in traditional diffusion models.

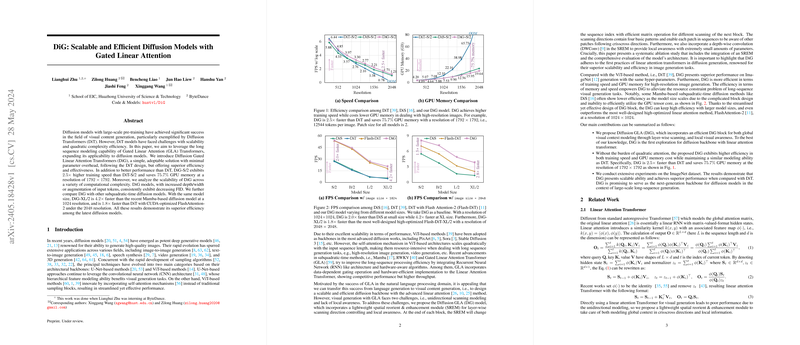

- Efficiency Gains: DiG-S/2 achieves a increase in training speed compared to DiT-S/2 and exhibits a reduction in GPU memory usage for high-resolution images ().

- Scalability Analysis: The paper methodically analyzes the scalability of DiG across various computational complexities, demonstrating consistent performance improvements (decreasing FID) with increased model depth/width and input tokens.

- Comparative Efficiency: DiG-XL/2 outperforms the Mamba-based diffusion model by being faster at $1024$ resolution and is faster than a CUDA-optimized DiT, utilizing FlashAttention-2 at $2048$ resolution.

Methodological Advances

The methodology integrates the linear complexity benefits of GLA Transformers into the diffusion model paradigm, thereby constructing a more efficient architecture without significantly altering the underlying design of DiT. This alteration results in minimal parameter overhead while achieving notable improvements in performance and computational efficiency. DiG's architectural adjustments ensure that it remains highly adoptable and effective, particularly for applications requiring high-resolution image synthesis.

Experimental Validation

Extensive experimentation validates the performance claims of the proposed model. Key experimental results include:

- DiG-S/2 not only improved training speeds but also significantly reduced GPU memory consumption compared to baseline models.

- The scalability tests confirmed that increasing the model's depth or width, alongside augmenting input tokens, consistently yielded better performance metrics, specifically lower FID scores.

- Comparative tests positioned DiG as markedly more efficient than contemporary subquadratic-time diffusion models, solidifying its practical utility in high-resolution visual content generation tasks.

Practical and Theoretical Implications

From a practical perspective, the development of DiG holds substantial implications for large-scale visual content generation. The reduced computational overhead makes it feasible to generate higher quality visuals without proportional resource scaling, potentially democratizing high-resolution visual content creation across diverse application domains.

Theoretically, the integration of GLA within diffusion models opens new avenues for exploring other low-complexity attention mechanisms within advanced machine learning frameworks. This direction fosters an ongoing exploration into combining different architectural efficiencies without compromising model effectiveness.

Future Directions

Looking ahead, further enhancements to DiG could involve:

- Incorporating additional optimization techniques specific to GLA mechanisms.

- Exploring hybrid architectures that combine the strengths of DiG with other emerging efficient transformer models.

- Investigating the application of DiG in broader domains beyond visual content generation, such as natural language processing or complex pattern recognition tasks.

This paper lays a foundation for the future development of efficient, scalable diffusion models, enhancing the computational feasibility and broadening the accessibility of high-quality visual content generation.