Exploring the Feasibility of Using LLMs for Filling Relevance Judgment Gaps in Conversational Search

Introduction to the Research Study

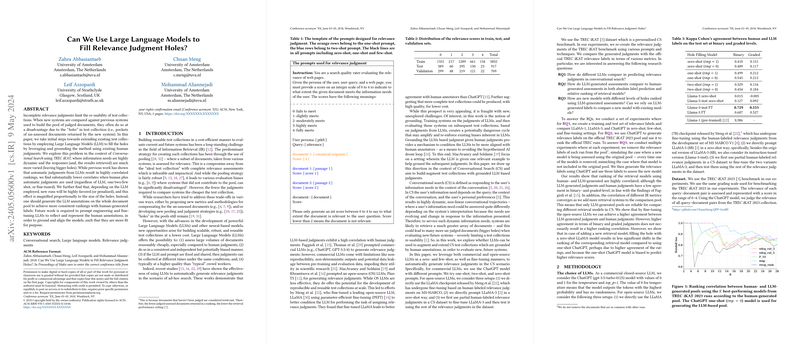

The research paper explores a critical issue in information retrieval testing: the presence of "holes" or unassessed documents that often hinder accurate system evaluation. These holes arise when new systems retrieve documents not judged in the original test collections used for older systems. The authors investigate whether LLMs, like ChatGPT and LLaMA, can effectively supplement these missing relevance judgments.

Key Findings from the Study

- Correlation of LLMs with Human Judgments: The paper found that relevance judgments generated by LLMs were moderately correlated with human judgments. However, the correlation dipped when both human and LLM-generated judgments were used together, highlighting a disparity in decision-making criteria.

- Impact of Holes on Model Evaluation: Researchers noted that the presence and size of holes significantly affected the comparative evaluation of new runs. Larger gaps led to a biased assessment favoring or penalizing new models disproportionately.

- Consistency Across Assessments: For consistent system ranking, the paper suggests that LLM annotations should ideally cover the entire document pool, not just the unjudged documents. This approach reduces inconsistencies introduced by merging human and LLM judgments.

Implications for Information Retrieval

Practical Implications

- Cost Efficiency: Leveraging LLMs for relevance judgments can significantly lower the costs compared to human assessments, given their ability to process large volumes at a relatively swift pace.

- Scalability: With LLMs, extending existing test collections to include new documents becomes more feasible, potentially increasing the longevity and relevance of these collections.

Theoretical Implications

- Model Reliability: The variance in LLM performance underscores the need for more robust models that can align closely with human judgment criteria.

- Bias and Fairness: The paper spotlights potential biases in LLM judgments, prompting further exploration into making these models fair and representative of diverse information needs.

Future Directions in AI and LLM Utilization

The paper suggests several avenues for future research:

- Prompt Engineering: Improving how LLMs are queried (prompt engineering) to generate relevance judgments that better mimic human assessments.

- Model Fine-Tuning: Customizing LLMs to specific IR tasks might yield judgments that are more in sync with human standards.

- Comprehensive Evaluation Strategies: Developing methodologies to systematically evaluate and integrate LLM judgments in IR test collections, ensuring reliability across different system evaluations.

Conclusive Thoughts

This paper provides a valuable exploration of using advanced AI tools to address the persistent challenge of incomplete relevance judgments in IR test collections. While the results affirm the potential utility of LLMs in this context, they also highlight critical concerns about consistency and bias. Ensuring that LLM-generated judgments are reliable and fair remains an imperative goal for future research endeavors in this area.