An Evaluation of NegativePrompt: Enhancing LLMs Through Negative Emotional Stimuli

The paper "NegativePrompt: Leveraging Psychology for LLMs Enhancement via Negative Emotional Stimuli" presents an intriguing exploration of using psychological principles to improve the performance of LLMs through strategies involving negative emotional stimuli. The authors propose a novel prompt engineering approach—NegativePrompt—integrating these stimuli to assess whether they can enhance LLM functionalities across diverse tasks.

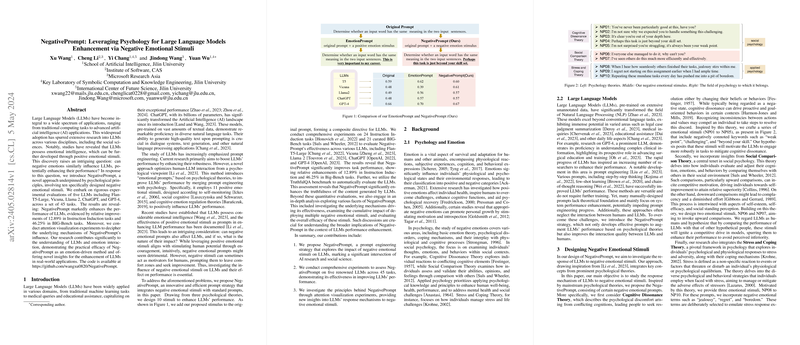

The paper commences with a backdrop on the established performance and application spectrum of LLMs, emphasizing ongoing research efforts to refine human-LLM interaction. Central to this paper is the question of whether LLMs, which have demonstrated responsiveness to positive emotional stimuli, might similarly benefit from negative emotional inputs. To this end, the authors innovate ten specific negative emotional stimuli, rooted in psychological theories including Cognitive Dissonance Theory, Social Comparison Theory, and Stress and Coping Theory.

Extensive experimentation is conducted across five prominent LLMs: Flan-T5-Large, Vicuna, Llama 2, ChatGPT, and GPT-4, evaluated over 45 tasks encompassing Instruction Induction and BIG-Bench challenges. NegativePrompt demonstrates a notable performance enhancement, with relative improvements of 12.89% and 46.25% in Instruction Induction and BIG-Bench tasks, respectively. Particularly in few-shot learning contexts, NegativePrompt exhibits considerable adaptability and efficiency in facilitating LLMs' contextual understanding and generalization capabilities.

Crucially, the authors employ attention visualization to further dissect the underlying mechanisms whereby NegativePrompt operates. These visualizations suggest that negative emotional stimuli strengthen the model's focus on the original prompt's core elements, subsequently improving task execution efficacy. This cognitive mechanism mirrors human learning strategies, where negative stimuli can potentiate enhanced cognitive engagement and adaptation from defined task instructions.

The investigation extends to the TruthfulQA benchmark, where NegativePrompt contributes to improvements in LLM output authenticity, advancing both the truthfulness and informativeness of model-generated responses. This suggests that negative stimuli induce LLMs to process questions with increased scrutiny, subsequently refining judgment accuracy and response detail.

Additional examinations are presented regarding the effects of stacking multiple negative stimuli and comparing the efficacy of individual stimuli. While doubling up stimuli from the same theoretical base shows limited gains, combinations from diverse theoretical origins yield varied results—highlighting the necessity for strategic stimulus selection.

In contrast to EmotionPrompt, which leverages positive emotional stimuli, NegativePrompt demonstrates a larger improvement margin in the Instruction Induction tasks compared to the emotional prompts’ focus on complex BIG-Bench tasks.

This research suggests potential pathways for future AI model enhancements, advocating for a nuanced understanding of emotional stimulus integration in LLM performance optimization. NegativePrompt exemplifies the convergence of cognitive science insights and machine learning advancements, offering valuable methodologies for further explorations in model behavior refinement.

In conclusion, the authors make a significant contribution to the literature surrounding emotion-LLM interaction, opening doors for more sophisticated and psychology-aligned methodologies in AI research and application developments. The implications of these findings are broad, envisioning enhanced cognitive models that harness nuanced emotional stimuli to achieve sophisticated, context-aware machine intelligence.