Hierarchical Speculative Decoding System for Efficient Long-Sequence Inference in LLMs

Introduction

LLMs excel in diverse applications but struggle with efficiency issues during long-sequence generation. A key challenge is the management of the Key-Value (KV) cache, which stores intermediary states to prevent redundant computations but escalates in size with sequence length, causing bottlenecks. Recent solutions involve speculative decoding, where lightweight models predict future tokens which are then validated by the target model. However, these solutions still suffer from various performance drawbacks when scaled to longer sequences.

Dual Bottleneck Observations and Core Insights

The paper identifies two primary memory bottlenecks: model weights and the KV cache. Key insights from the paper indicate:

- Attention Sparsity: A large portion of the KV cache is redundant, where only a small subset is actively used, suggesting the feasibility of using partial caches for prediction without significant performance loss.

- Contextual Locality: Adjacent tokens often require similar contextual information, suggesting potential efficiency in reusing cache segments across multiple tokens, thereby reducing computational overhead.

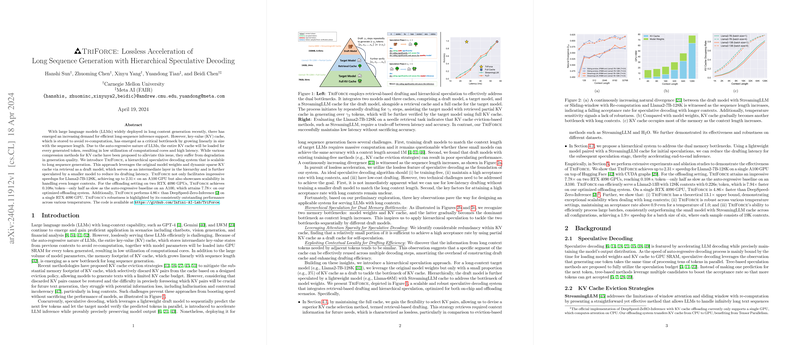

These insights lead to the design of TriForce, a hierarchical speculative decoding system that strategically uses partial caching and model weight reduction techniques to address these bottlenecks.

TriForce System Overview

TriForce integrates retrieval-based drafting with hierarchical speculative decoding to address KV cache and model weights bottlenecks effectively:

- Retrieval-Based Drafting: Instead of permanently discarding KV pairs like traditional eviction methods, TriForce uses a dynamic retrieval strategy that selectively retains the most crucial KV pairs, enhancing both the efficiency and quality of the inference process.

- Hierarchical Speculation: TriForce utilizes a smaller, lightweight model with a partial cache as an initial speculative layer, followed by verifying and refining predictions using the target model with a more complete but selectively reduced cache. This staged speculation reduces overall latency by addressing both identified bottlenecks sequentially.

Empirical Evaluation

TriForce was tested on NVIDIA A100 and RTX 4090 GPUs with models like Llama2-7B-128K:

- Speed Improvement: Achieved up to 2.31x speed-up on a single A100 GPU and up to 7.78x speed-up using dual RTX 4090 GPUs in offloading settings.

- Robustness and Scalability: Demonstrated high acceptance rates and consistent performance across various temperatures and settings. TriForce shows promising scalability, with theoretical projections suggesting further speed optimizations under extended context simulations.

Observations on KV Cache Handling

In-depth analysis of KV cache management shows:

- Optimal Cache Budget: TriForce achieves optimal performance with a 4K KV cache budget, effectively balancing the trade-off between drafting overhead and acceptance rate.

- Chunk Size Selection: Analysis reveals that smaller chunk sizes might overfit specific tokens, while excessively large sizes could dilute the significance of valuable tokens, indicating the importance of balanced chunk sizing in retrieval-based strategies.

Future Directions and Theoretical Implications

TriForce's architecture suggests significant potential for extending LLM applicability in real-world scenarios requiring long-context generation, such as document summarization and extended conversational agents. Additionally, the integration with tree-based speculative models could further enhance throughput and efficiency, showing a promising direction for future research in AI language processing optimizations.

Conclusion

This paper presents a compelling approach to solving the efficiency problems associated with LLMs in processing long sequences. By leveraging hierarchical speculative decoding and tactical KV cache management, TriForce not only improves inference speed but also maintains high generational quality, proving to be a substantial advancement in the practical deployment of large-scale LLMs.