Enhancing LLMs with Structured Memory Modules: Introducing MAuLLM

Introduction to MAuLLM

The recent publication introduces the Memory-Augmented Universal LLM (MAuLLM), a novel architecture designed to address several limitations of current LLMs concerning memory utilization and knowledge management. MAuLLM incorporates a structured, explicit read-and-write memory module aimed at improving both the performance and interpretability of LLMs, especially in tasks that are knowledge-intensive.

Limitations of Existing Approaches

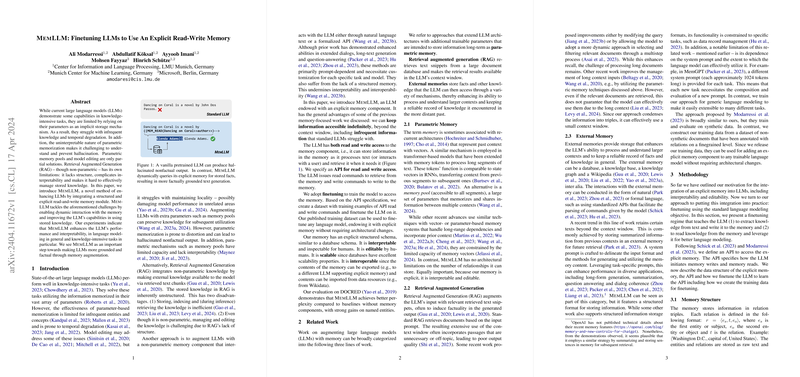

Current LLMs rely heavily on parametric memory, which can lead to issues like temporal degradation and difficulty with infrequent knowledge. Moreover, this reliance results in a system prone to generating hallucinated content. While Retrieval Augmented Generation (RAG) provides a non-parametric alternative, it suffers from unstructured knowledge storage and inefficient retrieval processes during inference. Alternative methods that incorporate non-parametric external memories face challenges regarding the structure and inefficiency of stored knowledge interaction.

MAuLLM Architecture and Capabilities

MAuLLM addresses these issues by integrating a structured and explicitly accessible memory module into the LLM framework, allowing the model to dynamically interact with stored knowledge. The memory component is designed like a database, maintaining a schema that is both interpretable and editable, thus providing a more organized and scalable knowledge storage solution.

- Read and Write Operations: MAuLLM can perform read and write operations to the memory during the engagement with text or user interaction, enabling it to maintain knowledge continuity beyond immediate context.

- Memory Structure: Information is stored in the memory in the form of relation triples, which enhances the model's ability to retrieve and utilize stored knowledge efficiently.

- API for Memory Interaction: A specified API allows MAuLLM to execute memory operations systematically, facilitating the integration of memory interactions within the natural processing flow of the LLM.

Experimental Setup and Evaluation

MAuLLM was evaluated on the DOCRED dataset, which consists of documents annotated with relational data. The model training involves fine-tuning on examples that teach the LLM to interact with the memory module effectively. The primary performance metric used was perplexity, focusing on its components like overall perplexity, target perplexity (for target entities), and entity perplexity (for all entities).

- Perplexity Results: MAuLLM demonstrated significantly improved performance across all perplexity metrics compared to baselines. The model showed particular strength in handling target entities, which directly relates to its enhanced memory interaction capabilities.

- Memory Interaction Analysis: The structured analysis highlighted how the explicit memory interaction through read and write operations contributes to the model's performance, particularly in reducing content hallucination and improving factuality.

- Scalability and Efficiency: The memory system's structure allows it to scale effectively with minimal impact on performance, even as the size of the stored knowledge increases.

Implications and Future Work

The introduction of MAuLLM represents a significant step toward enhancing the factual grounding and interpretability of LLMs. The architecture promises improvements in handling complex, knowledge-intensive tasks by effectively leveraging structured, long-term memory.

- Practical Implications: The ability to edit and inspect memory schema allows for better management and utilization of knowledge, which is crucial for applications requiring high levels of accuracy and reliability, such as automated content generation and complex data interaction tasks.

- Theoretical Implications: This approach pushes forward the understanding of memory utilization in neural models, suggesting that structured and explicit memory can significantly enhance model capabilities without compromising performance.

- Future Developments: Further research could explore more sophisticated memory structures and the integration of MAuLLM with other modalities of data, potentially leading to even more robust models capable of cross-domain knowledge utilization.

In summary, MAuLLM’s introduction of a structured and explicitly manageable memory module within an LLM framework offers a promising avenue for advancing the capabilities of generative models, particularly in terms of their factual accuracy and operational interpretability.