LLMs and Collaborative Filtering: Advancements in Recommender Systems

The paper "LLMs meet Collaborative Filtering: An Efficient All-round LLM-based Recommender System" by Sein Kim et al. explores the integration of LLMs with collaborative filtering approaches to build a robust recommender system, named A-LLMRec. This system addresses the limitations of traditional collaborative filtering recommender systems (CF-RecSys) in cold-start scenarios and proposes a novel architecture that excels in both cold and warm scenarios by leveraging collaborative knowledge and textual information.

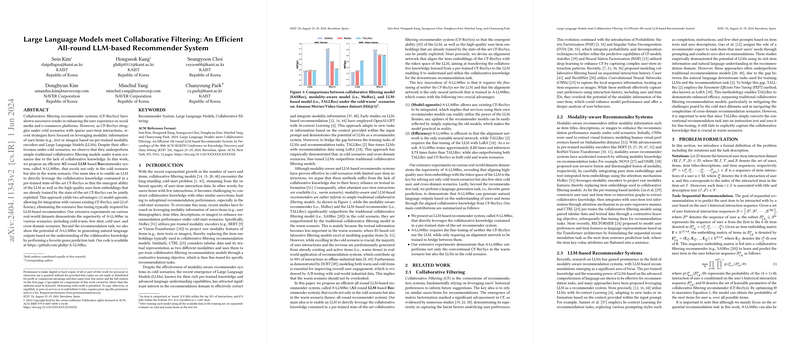

Collaborative filtering recommender systems have been instrumental in improving user experiences across platforms, yet they face significant challenges in scenarios with sparse user-item interactions. Previous research efforts have focused on blending modality information—such as text or images—through pre-trained modality encoders like BERT and Vision-Transformer to combat these challenges, particularly under cold-start scenarios. However, these methods often underperform in warm scenarios when compared to traditional collaborative filtering models due to inadequate exploitation of collaborative knowledge derived from extensive user-item interactions.

To remedy this, the authors introduce A-LLMRec, which innovatively integrates a state-of-the-art CF-RecSys with an LLM, thereby exploiting the inherent abilities of LLMs alongside high-quality user/item embeddings from CF-RecSys. A distinguishing feature of A-LLMRec is its model-agnostic nature, which facilitates its integration with any existing recommender systems, thereby enhancing its practical applicability. Moreover, the system is designed for efficiency by eliminating the necessity for extensive fine-tuning of LLM-based recommenders.

The experiments conducted on various real-world datasets highlight the superior performance of A-LLMRec in multiple scenarios including cold/warm, few-shot, cold user, and cross-domain contexts. The paper reports robust results, displaying significant improvements over both traditional and contemporary models across these settings. Particularly, the ability of A-LLMRec to perform well with few interactions or in cross-domain recommendations underscores its versatility and robustness.

The implications of this research are multifaceted. Practically, A-LLMRec provides a scalable solution capable of handling various recommendation scenarios effectively, thus offering substantial improvements in user experience on platforms reliant on recommender systems. Theoretically, this work demonstrates the merits of symbiotically using collaborative filtering's latent knowledge with LLM's contextual understanding, enriching the domain literature by proposing an innovative way of enhancing traditional systems with modern deep learning architectures.

Future directions could explore deeper integrations of personalized natural language interactions and recommendations by leveraging advances in multi-modal LLMs or further optimizing the balance between collaborative knowledge and modality influences. Additionally, the implications of the system's model-agnostic properties could lead to wider adoption and experimentation on different recommendation tasks beyond those considered in this paper. Overall, A-LLMRec represents a significant step forward in enhancing recommender systems through a nuanced blend of collaborative and machine learning advancements.