Enhancing Recommender Systems with LLM Collaborative Filtering

Introduction to LLM-CF Framework

The recommender systems (RSs) domain has seen significant advancements with the integration of LLMs, particularly in generating knowledge-rich texts and embeddings to improve recommendation quality. However, a gap exists in fully leveraging LLMs for collaborative filtering (CF) due to their limited capacity to process extensive user and item data in a single prompt. Addressing this challenge, the proposed LLMs enhanced Collaborative Filtering (LLM-CF) framework introduces a novel approach that distils LLMs' world knowledge and reasoning capabilities directly into CF. LLM-CF operates by fine-tuning an LLM with a concise instruction-tuning method that balances the model's recommendation-specific and general functionalities. Through comprehensive experiments on three real-world datasets, LLM-CF is demonstrated to significantly enhance various backbone recommendation models and consistently outperform competitive baselines.

Key Contributions and Framework Overview

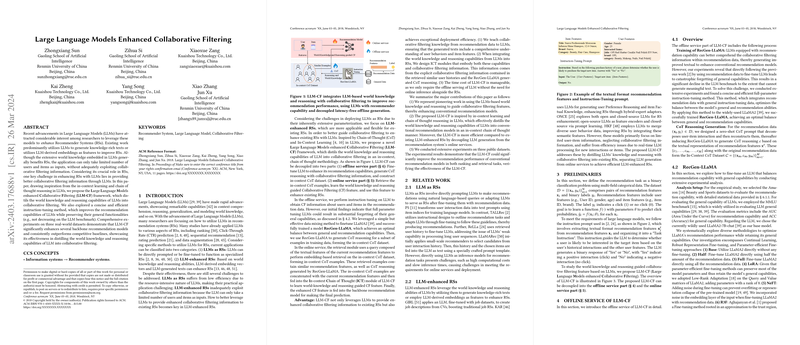

The LLM-CF framework comprises two main parts: an offline service for fine-tuning and generating CoT (Chain of Thought) reasoning and an online service that incorporates the distilled LLM knowledge into RSs. The framework's development was motivated by the limitations of existing LLM applications in RSs, which either underutilize the collaborative filtering information or suffer from deployment inefficiencies due to LLMs' extensive parameters.

- Distillation of World Knowledge: LLM-CF fine-tunes an LLM on a mix of recommendation and general instruction-tuning data to obtain a model, RecGen-LLaMA, which maintains an optimal balance between general functionalities and recommendation capabilities. This model is then utilized to generate CoT reasoning for a subset of training examples, creating an in-context CoT dataset.

- Learning Collaborative Filtering Features: The in-context CoT examples are retrieved based on similarity with current recommendation features, and the In-context Chain of Thought (ICT) module processes these examples to extract world-knowledge and reasoning guided collaborative filtering feature. This process leverages transformer decoder layers for the in-context learning of CoT examples, encapsulating the enhanced CF feature into existing RSs efficiently.

Experimental Validation and Implications

The framework underwent extensive testing on three public datasets, demonstrating its ability to significantly improve several conventional recommendation models across ranking and retrieval tasks. The results underscore LLM-CF's efficacy in leveraging LLMs for distilling world knowledge and reasoning into RSs, reflecting on several crucial insights:

- Enhancement of Collaborative Filtering: The integration of CoT reasoning and world knowledge from LLMs directly into collaborative filtering processes leads to a substantial improvement in recommendation accuracy.

- Deployment Efficiency: By decoupling LLM generation from the RS's online services through an efficient, two-part framework, LLM-CF addresses a significant challenge in deploying LLM-enhanced RSs, offering a scalable solution.

- Model Versatility: The application of LLM-CF across various backbone models and tasks (both ranking and retrieval) highlights its versatility and potential to generalize across different recommendation scenarios.

Future Directions

The demonstrated success of the LLM-CF framework opens several avenues for further research:

- Exploration of LLM Capacities: Future studies could explore the integration of other LLM capabilities, such as sentiment analysis or question-answering, into the recommendation process.

- Scalability and Efficiency: Investigating methods to further optimize the efficiency of LLM-CF, particularly in processing larger datasets or real-time recommendation scenarios, remains a critical area.

- Cross-Domain Applications: Extending the framework's application to other domains beyond traditional RSs, such as content curation or personalized search, could further validate its utility and impact.

In summary, the LLM-CF framework represents a significant step forward in utilizing LLMs to enhance RSs, particularly through the effective distillation of collaborative filtering information. Its implications for both practical deployments and theoretical advancements in AI and RSs highlight the potential of merging LLMs' cognitive capabilities with domain-specific applications.