Enhancing LLM Inference Efficiency with Prepacking: An Approach to Optimizing Prefilling

Introduction to Prepacking

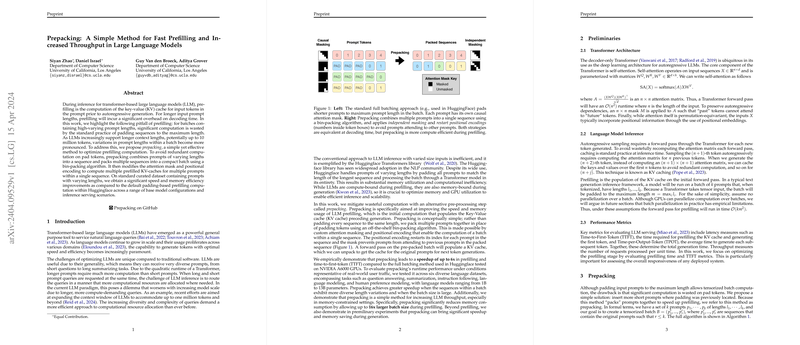

In the landscape of transformer-based LLMs, prompt-based prefilling accounts for a significant portion of the computational overhead during inference. This paper introduces "prepacking," an innovative method aimed at mitigating the computational inefficiencies associated with processing variable-length prompts. Traditional padding practices, which adjust all prompts in a batch to match the longest sequence, result in substantial computational waste. By smartly packing prompts of varying lengths into compact batches and adjusting attention masks and positional encodings accordingly, prepacking substantially improves the speed and memory usage during the prefilling stage of LLM inference.

The Problem with Conventional Prefilling

The quintessential approach to handling prompts of diverse lengths involves padding shorter sequences to align with the longest prompt in a batch. Although this practice facilitates batch-wise processing, it inherently leads to uneconomical computation and memory utilization -- deficiencies that become increasingly pronounced as models support longer context lengths and as the gap between the shortest and longest prompts within a batch widens.

Prepacking: An Overview

Prepacking addresses the shortcomings of traditional prefilling by:

- Dynamically combining multiple prompts into a single sequence within a batch, thereby replacing padding tokens with actual prompt content.

- Employing a bin-packing algorithm to optimize the arrangement of prompts, ensuring efficient use of computational resources.

- Implementing custom attention masking and positional encoding adjustments to maintain prompt independence within packed sequences.

Through these mechanisms, prepacking significantly reduces the computational load associated with processing padded tokens, thus enhancing both the speed and memory efficiency of LLM inference.

Empirical Validation

The paper substantiates the efficacy of prepacking through rigorous evaluation across various datasets and LLM configurations. Key findings include:

- Speedup and Efficiency: Compared to conventional padding methods, prepacking achieves up to a 6x speedup in prefilling and time-to-first-token (TTFT) metrics, alongside substantial memory usage reductions, enabling up to 16x larger batch sizes for the prefilling phase.

- Scalability and Generalization: The benefits of prepacking extend across different models and datasets, showcasing its adaptability and scalability. The approach is particularly beneficial in handling batches with wide prompt length variability and large batch sizes.

- Future Applications: Preliminary results suggest that the principles of prepacking could also augment the efficiency of the generation phase, potentially opening new avenues for further optimizations in LLM serving.

Analytical Insights and Limitations

The paper’s analysis reveals that prepacking’s performance advantages are closely tied to the characteristics of prompt length distributions within a batch. It effectively leverages GPU resources by minimizing unnecessary computation on padding tokens. However, the paper acknowledges practical limitations, including the inherent complexity of bin-packing algorithms and potential trade-offs in the context of real-time LLM serving.

Conclusion and Future Directions

By introducing prepacking, the paper offers a compelling solution to a prevalent inefficiency in LLM inference, backed by strong empirical evidence. As LLMs continue to evolve in scale and capability, optimizing computational procedures such as prefilling remains critical for their practical deployment. Looking ahead, the concepts underlying prepacking could inspire further innovations in LLM servicing strategies, potentially extending its advantages beyond prefilling to encompass entire inference pipelines.