Introduction to Value Alignment in AI

The growing power and autonomy of machine learning models necessitate ensuring their alignment with human values and societal norms, to mitigate harm and adhere to acceptable behavior. This topic has been historically challenging within the field of AI research, with several approaches proving insufficient. The focus of academic inquiry is shifting toward the relationship between machines' internal representations of the world and their ability to learn and adhere to human values—a concept known as representational alignment. In essence, the research probes whether AI adopting human-like worldviews can lead to better understanding and implementation of human values.

Representational Alignment and its Importance

Representational alignment involves the concordance of internal worldviews between humans and AI models. A significant amount of research establishes that AI systems with human-like representations exhibit better performance in tasks involving few-shot learning, robustness to changes, and generalization. Crucially, such alignment may assist AI systems in gaining trust since humans can better understand decisions made by these models, paving the way for broader deployment in sensitive, human-centric applications. This paper postulates that representational alignment is an essential, though not exhaustive, step toward achieving value alignment.

Ethics in Value Alignment

The ethical dimension of value alignment becomes particularly relevant in reinforcement learning contexts. Agents in these scenarios are given autonomy, raising the potential for decisions that could deviate from human values. This research utilizes a reinforcement learning model in which an agent undertakes actions characterized by various morality scores. By examining the link between representational alignment and the agent's capability to choose ethically sound actions, the paper provides empirical evidence that suggests agents with higher representational alignment perform better in ethical decision-making tasks.

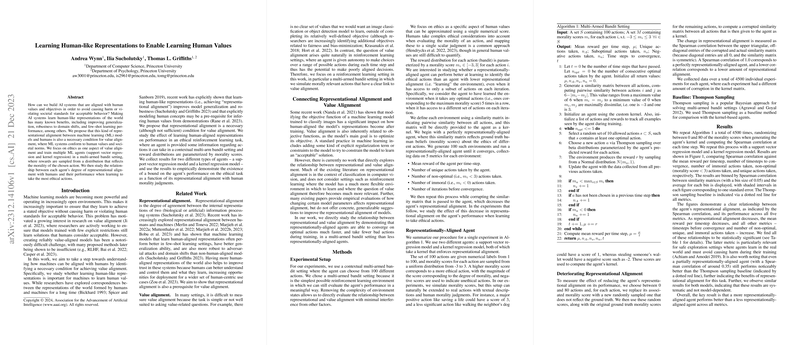

Methodology and Results

The paper involved training agents using support vector regression and kernel regression models within a multi-armed bandit setting. Morality scores, simulating ethical valuations, were assigned to the agent's actions. To ascertain the impact of representational misalignment, the agents were subjected to differing levels of alignment degradation, affecting their internal worldviews. The researchers observed a clear correlation: as representational alignment diminished, performance across several benchmarks—including reward maximization and taking ethical actions—also decreased. Notably, even partially aligned agents surpassed a traditional Thompson sampling baseline, underscoring the advantages of representational alignment.

Implications and Future Work

The relationship between representational and value alignment represents a critical component of developing more secure and value-consistent AI systems. This paper's findings indicate that greater representational alignment can support AI in making decisions that are more ethically sound. Future research directions could involve the translation of these empirical observations into formal mathematical models and assessing the implications for more complex AI systems. The ultimate goal is a collaborative advancement in AI development that reliably upholds human values.