Introducing OSWorld: A Real Computer Environment for Multimodal Agent Training and Evaluation

Overview of OSWorld

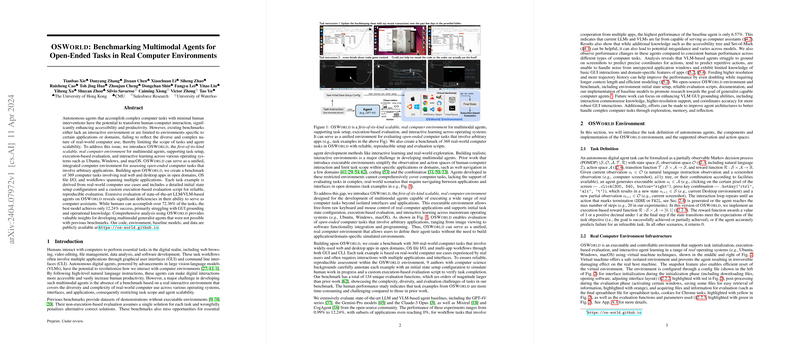

OSWorld is introduced as the first environment of its kind, offering a fully interactive and scalable real computer environment for the development and assessment of autonomous digital agents capable of handling diverse computer tasks. Unlike prior benchmarks focusing on specific applications or lacking interactive capability, OSWorld supports a broad range of open-ended tasks across different operating systems (OS), including Ubuntu, Windows, and macOS. This environment is designed to evaluate multimodal agents' ability to execute real-world computer tasks involving web and desktop applications, file operations, and workflows bridging multiple applications, thus overcoming the limitations of existing benchmarks.

Technical Contributions and Environment Capabilities

OSWorld's architecture facilitates task setup, execution-based evaluation, and interactive learning in a realistic computer interaction context. The environment leverages virtual machine technology to ensure task scalability across OS and applications, supporting raw keyboard and mouse control actions. A notable contribution is the creation of a benchmark comprising 369 tasks reflecting real-world computer usage, encompassing a wide range of applications and domains. These tasks are meticulously designed to include starting state configurations and execution-based evaluation scripts, providing a robust framework for reproducible and reliable assessment of agent performance.

Evaluation of Modern LLM and VLM Agents

The paper reports an extensive evaluation of contemporary LLMs (LLM) and Vision-LLMs (VLM) within the OSWorld environment. Despite significant advancements in multimodal agent development, the evaluation highlights a considerable performance gap between human users and the best-performing models. For instance, while humans can successfully complete over 72% of the tasks, the highest-performing model achieves only a 12.24% success rate. This discrepancy underscores current models' challenges in mastering GUI grounding, operational knowledge, and executing long-horizon tasks involving complex workflows and multiple applications.

Insights and Implications for Future Research

The comprehensive analysis conducted using OSWorld reveals several insights into the development of multimodal generalist agents. There is a pronounced need for enhancing models' understanding of GUI elements and their operational functionalities across diverse software. The findings point towards the potential of refining agent architectures to improve their exploration and decision-making capabilities, emphasizing the importance of interactive learning and adaptation in real-world environments. Furthermore, the paper speculates on advancing VLMs' capability for high-resolution image processing and precise action prediction, which are crucial for more robust and effective GUI interactions.

Conclusions and Future Directions

The introduction of OSWorld marks a significant step towards developing more capable and generalizable digital agents for autonomous computer interaction. By providing a rich, variable, and realistic benchmarking environment, OSWorld sets the stage for future breakthroughs in agent capabilities. The research underscores the necessity for continued advancements in multimodal agent technology, highlighting areas such as enhanced GUI understanding, long-horizon planning, and operational knowledge across various applications and domains. As the field progresses, OSWorld will serve as a valuable resource for assessing and guiding the development of the next generation of digital agents.