Probing LLMs for Food-Related Cultural Knowledge

The paper, "Does Mapo Tofu Contain Coffee? Probing LLMs for Food-related Cultural Knowledge," presents an in-depth examination of the cultural knowledge embedded within LLMs, focusing on food as a culturally significant domain. The authors introduce a dataset named FmLAMA, designed to assess LLMs' cultural knowledge retrieval concerning food across different languages and cultural traditions.

The primary findings of this paper suggest that current LLMs, prominently trained on diverse datasets, exhibit a notable bias towards food knowledge prevalent in the United States. The research emphasizes that the inclusion of the relevant cultural context in query prompts significantly enhances the models' ability to retrieve precise cultural knowledge. This capability is shown to be highly sensitive to the interplay between the language of probing, the architecture of the model, and the cultural context.

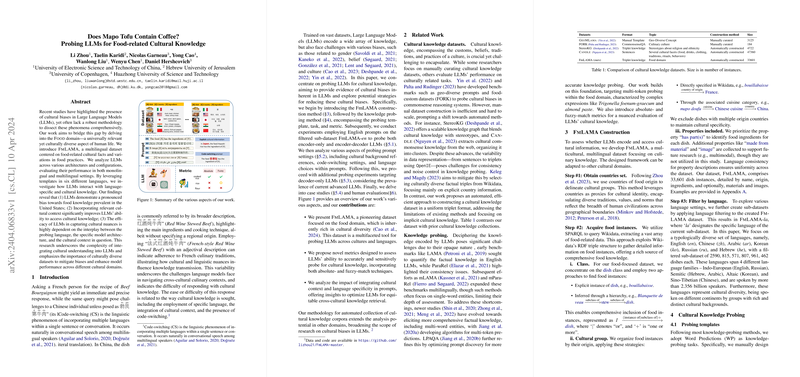

The methodology involves the automated construction of FmLAMA, a dataset that captures the intricacies of cultural knowledge in the domain of food. The authors employ Wikidata to gather extensive food-related data, leveraging language-specific attributes to categorize this information into culturally related content. This approach highlights current LLMs' deficiencies in managing culturally implicit knowledge and underscores the importance of diverse datasets for accurate knowledge retrieval.

In probing different architectures, such as encoder-only, encoder-decoder, and decoder-only models, the paper reveals variations in their ability to access cultural knowledge. Monolingual English models performed better in English-speaking contexts, while multilingual models did not show definitive superiority in non-English contexts, indicating a potential discrepancy in the pretraining data distribution concerning language and culture.

A significant contribution is the introduction of novel metrics for evaluating LLMs' cultural knowledge probing capabilities: Mean Average Precision (mAP) and Mean Word Similarity (mWS). These metrics allow for a more nuanced understanding of LLMs' performance beyond mere syntactic and semantic matching, considering the flexibility required in capturing cultural nuances.

Moreover, the case paper on ingredient analysis within the paper offers a practical illustration of LLMs making repetitive, context-independent predictions. This hints at an inherent limitation within current LLMs, relying on generalized assumptions rather than context-aware cultural understanding, particularly concerning diverse culinary practices.

The implications of this research are twofold: practically, it stresses the need for incorporating enhanced datasets that account for cultural diversity to improve model performance in real-world applications. Theoretically, it provides insights into understanding how LLMs encode cultural knowledge, guiding future enhancements in LLM pretraining and fine-tuning strategies to reduce systematic biases.

Looking forward, future developments in AI, especially in natural language processing, could pivot around integrating more culturally diverse databases. This may assist in rendering LLMs not just repositories of information but nuanced entities informed by culturally relative knowledge frameworks, fostering better cross-cultural understanding and communication.

This paper is a seminal addition to the existing literature on the cultural probing of machine learning models, providing valuable insights and benchmarks that can inform future research in addressing cultural biases and improving the robustness of LLMs in capturing global diversity in knowledge representation.