Improving the Understanding of Error Detection in LLMs through ReaLMistake Benchmark

Introduction

Recent advances in NLP have led to the widespread use of LLMs across a variety of applications, ranging from chatbots to content generation. Recognizing the increase in dependency on these models, the evaluation of their output has become a necessity, particularly in detecting errors in LLM responses. Despite its importance, research focused specifically on this aspect of LLM performance has been minimal. Existing benchmarks often fail to adequately capture the diversity and complexity of errors made by LLMs, resulting in a gap in our understanding and the development of more effective error detection strategies.

ReaLMistake: A New Benchmark for Error Detection

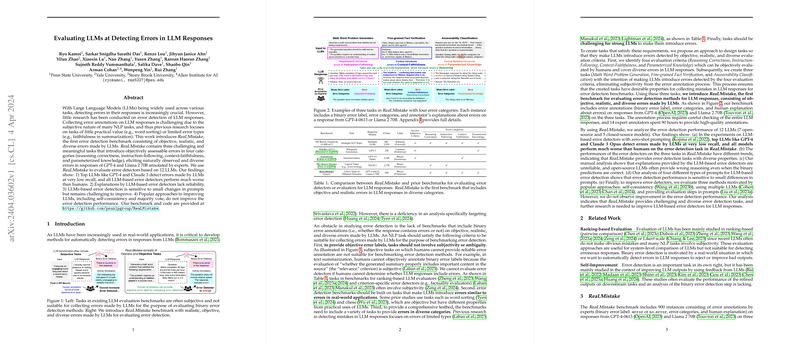

To address this gap, the paper introduces "ReaLMistake," a benchmark designed to evaluate error detection in responses generated by LLMs. ReaLMistake is distinctive in several respects:

- It consists of objective, realistic, and diverse errors, thereby providing a comprehensive evaluation platform that mirrors practical scenarios.

- The benchmark encompasses three tasks, each designed to elicit a broad spectrum of errors across four categories: reasoning correctness, instruction-following, context-faithfulness, and parameterized knowledge. These tasks were meticulously constructed to ensure that errors are both naturally occurring and objectively assessable.

- A significant volume of expert annotations supports the benchmark, underscoring the high-quality nature of the dataset provided for evaluation.

Insights from Evaluating LLMs with ReaLMistake

The authors employed ReaLMistake to critically evaluate a range of LLMs, including state-of-the-art models such as GPT-4 and Llama 2 70B. The findings from these evaluations are illuminating:

- Notably, even top-performing LLMs detect errors at remarkably low recall rates, with all LLM-based detectors exhibiting inferior performance compared to human evaluators. This highlights a significant challenge in the current capabilities of LLMs in reliably identifying errors in their outputs.

- An analysis of explanation reliability indicates a substantial variance in the quality of explanations provided by LLM-based detectors, particularly among open-source models.

- Investigation into improving error detectors revealed that conventional approaches, including self-consistency and the utilization of multiple LLMs, did not yield notable enhancements in detection performance.

- The evaluation further demonstrates the sensitivity of LLM-based error detection to minor changes in prompt design, suggesting the potential for prompt optimization but also the challenges in achieving significant performance improvements through simple modifications.

Implications and Future Directions

The findings from the ReaLMistake evaluation offer critical insights into the current limitations and challenges faced by LLMs in error detection tasks. These insights have significant implications for both the theoretical understanding of LLM performance and the practical application of LLMs in real-world settings.

- The revealed sensitivity to prompt design underscores the importance of careful prompt engineering in maximizing detection performance.

- The lack of improvement from conventional enhancement strategies suggests a need for innovative approaches in the development of error detection methodologies.

- The overall performance trends highlighted by the benchmark, including the notable gap between human and LLM detectors, present clear targets for future research in LLM error detection.

Conclusion

ReaLMistake fills a critical gap in the evaluation of LLMs, offering a robust and comprehensive benchmark for assessing error detection capabilities. The insights gained from this benchmark contribute to our understanding of the limitations of current LLMs in this area and suggest directions for future research and development. As the use of LLMs continues to grow, the importance of effective error detection mechanisms will only increase, making the contributions of this work particularly timely and valuable.