Evaluating Grammatical Error Correction Using LLMs

Introduction

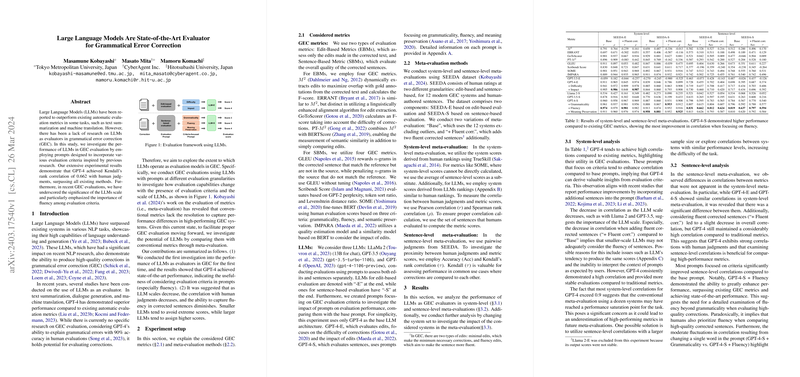

The utilization of LLMs for evaluating Grammatical Error Correction (GEC) represents an emergent area of interest within NLP. While LLMs, such as GPT-4, have demonstrated remarkable performance across various tasks including text summarization and machine translation, their application in GEC evaluation has been relatively unexplored. This paper presents a pioneering investigation into the efficacy of LLMs in GEC evaluation, leveraging prompts designed to capture a range of evaluation criteria. The results highlight GPT-4’s superior performance, achieving a Kendall's rank correlation of 0.662 with human judgments, thereby outperforming existing metrics. This paper also draws attention to the critical role of fluency within evaluation criteria and the significance of LLM scale in performance outcomes.

Experiment Setup

Considered Metrics

Metrics for GEC evaluation can be categorized into Edit-Based Metrics (EBMs) and Sentence-Based Metrics (SBMs). EBMs focus on the edits made to correct a sentence, while SBMs assess the overall quality of the corrected sentences. This paper considers various metrics within these categories, such as ERRANT and GECToR for EBMs, and GLEU and IMPARA for SBMs.

LLMs and Prompts

Three LLMs were evaluated: LLaMa 2, GPT-3.5, and GPT-4, across different prompts addressing both overall sentence quality and specific edits. The paper leveraged prompts emphasizing different evaluation criteria, observing their impact on the evaluation performance.

Results

System-Level Analysis

At the system level, GPT-4 consistently demonstrated superior performance, showing high correlations with human judgments. Prompts tailored to specific evaluation criteria generally enhanced performance, suggesting that GPT-4 can extract meaningful insights from these criteria. The reduction in performance with smaller LLMs reinforced the importance of LLM scale.

Sentence-Level Analysis

At the sentence level, disparities in correlations suggested by metrics not evident in the system-level analysis were observed. GPT-4, especially with fluency-focused prompts, achieved state-of-the-art performance, thereby asserting the need to prioritize fluency in evaluating high-quality corrections.

Further Analysis

Window analysis comparing system sets of similar performance revealed GPT-4's robust evaluation capabilities, particularly emphasizing fluency's role. In contrast, conventional metrics frequently showed either no correlation or negative correlation, showcasing their limitations in GEC evaluation.

Implications and Future Work

This paper underscores LLMs, especially GPT-4, as potent evaluators for grammatical error correction, surpassing traditional metrics in correlation with human judgments. The findings advocate for the importance of fluency in evaluation criteria and hint at the potential need for sentence-level correlations or evaluating systems with similar performances to overcome performance saturation in system-level meta-evaluation.

Future research avenues may explore few-shot learning impacts and refine prompt engineering for enhanced evaluation performance. Additionally, extending evaluation to document-level considerations may yield further insights, given the expansion of context windows in LLMs.

Conclusion

LLMs, particularly GPT-4, exhibit promising capabilities as evaluators in grammatical error correction, offering nuanced insights over traditional metrics. This investigation into LLM-based GEC evaluation not only highlights the paramountcy of fluency and the scale of LLMs but also sets the stage for advanced research into optimizing evaluation methods in the continually evolving landscape of NLP.