Mapping the Increasing Use of LLMs in Scientific Papers: An Analytical Overview

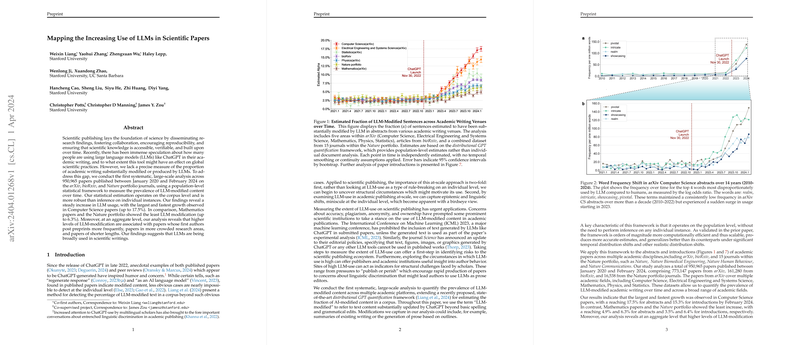

The paper "Mapping the Increasing Use of LLMs in Scientific Papers" by Weixin Liang et al. presents a systematic, large-scale analysis aimed at quantifying the prevalence of LLM-modified content across various academic disciplines. The paper scrutinizes 950,965 papers published between January 2020 and February 2024 from arXiv, bioRxiv, and a portfolio of Nature journals, employing a statistical framework adapted for corpus-level rather than individual-level analysis. This approach is particularly suited to understanding structural patterns and shifts in academic writing attributable to LLM usage.

Methodology

The authors employ an advanced adaptation of the distributional GPT quantification framework developed by Liang et al. (2024). This methodology undertakes the following steps:

- Problem Formulation: The goal is to estimate the fractional contribution () of LLM-modified content in a mixture distribution of human and AI-generated texts.

- Parameterization: This framework models token distributions, focusing on the occurrence probabilities in human-written () and LLM-modified () texts.

- Estimation: Using a two-fold estimation process to generate these probabilities from known human and AI-modified text collections.

- Inference: The paper leverages a maximum likelihood estimation (MLE) approach to infer by maximizing the log-likelihood across the given corpus.

A noteworthy feature is the two-stage approach to generating realistic LLM-produced training data, which aims to avoid creating fabricated or hallucinated academic content. The added step of summarizing and expanding original text via LLMs helps produce plausible AI-generated scientific writing.

Main Findings

Temporal Trends in LLM Usage

The analysis reveals a noticeable uptrend in LLM-modified content starting approximately five months post-release of ChatGPT. The most significant increase was observed in the domain of Computer Science, with the fraction of LLM-modified content in abstracts rising to 17.5% and in introductions to 15.3% by February 2024. Electrical Engineering and Systems Science also demonstrated substantial growth, while Mathematics and journals in the Nature portfolio exhibited relatively lower increases.

Attributes Associated with Increased LLM Usage

- First-Author Preprint Posting Frequency: Papers whose first authors posted more preprints on arXiv showed higher levels of LLM-modified content. By February 2024, the estimated fraction was 19.3% for abstracts and 16.9% for introductions among prolific preprint posters, compared to 15.6% and 13.7%, respectively, for less prolific authors. This correlation persists across different subcategories within Computer Science, indicating the influence of publication pressure on embracing LLM tools.

- Paper Similarity: There is a strong relationship between a paper's similarity to its closest peer and the extent of LLM modification. Papers that were more similar to their nearest peer (below median distance in the embedding space) had a higher fraction of LLM-modified content, peaking at 22.2% in abstracts by February 2024. This phenomenon might suggest that the use of LLMs contributes to more homogenized writing styles or is more prevalent in densely populated research fields.

- Paper Length: Shorter papers consistently exhibited higher LLM-modified content compared to longer ones. By February 2024, shorter papers had 17.7% of their abstract sentences modified, versus 13.6% for longer papers. This trend implies that concise papers, possibly due to brevity-oriented constraints or time pressures, rely more on LLM assistance.

Implications and Future Outlook

The paper provides granular insights into how and where LLMs are being integrated into scientific workflows. These findings have multiple implications:

- Research Integrity: The increasing prevalence of LLM-modified content raises questions about the authenticity, originality, and potential risks, including the homogenization of scientific styles and possible dependencies on proprietary LLM tools.

- Policy Formulation: The evidence supports the need for clear guidelines and policies regarding the ethical use of LLMs in academic writing, as exemplified by the stances taken by ICML and the journal Science.

- Future Research Directions: Future investigations could extend this work to other LLMs and explore the causal relationship between LLM usage and associated factors such as research productivity, competitive pressures, and quality of scholarly output.

In summary, this paper provides a comprehensive quantitative foundation to understand LLM usage trends in academia, emphasizing the nuanced and varied adoption across different scientific fields. The insights derived offer a critical basis for formulating policies and ethical guidelines, ensuring the robust and equitable integration of LLMs into the scholarly ecosystem.