Estimating the Prevalence of LLM-Assisted Writing in Scholarly Literature

The paper authored by Andrew Gray addresses the application and impact of LLMs such as ChatGPT in scholarly communication and academic publication. Utilizing keywords that are disproportionately present in LLM-generated text, the paper attempts to estimate the prevalence of LLM-assisted writing in scholarly literature published in 2023.

In late 2022, the public release of ChatGPT 3.5 democratized access to high-quality text generation, precipitating widespread discussions regarding its implications for academic writing. Gray's research builds on early observations and surveys indicating increasing use of such tools by researchers, alongside the establishment of usage guidelines by major publishers. For instance, Wiley permits the use of LLMs for content development provided there's full authorial responsibility and transparency. However, the true extent and nature of LLM utilization in scholarly publications have remained largely anecdotal until now.

Methodology

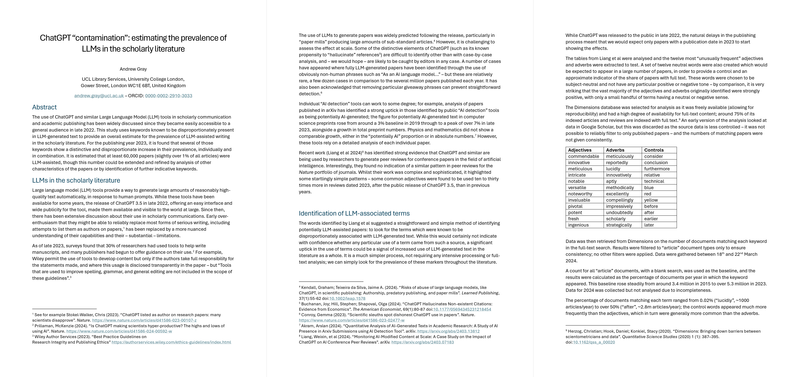

Gray's approach to identifying LLM-influenced text involves scrutinizing specific keywords recognized for their frequent appearance in LLM-generated content. The paper employed a methodology based on the analysis presented by Liang et al., who identified certain adjectives and adverbs as markers of LLM usage. Gray's investigation expands this by selecting both these identified terms and a set of neutral control words. Using the Dimensions database—a comprehensive repository with a significant proportion of full-text articles—the paper quantifies the occurrence of these terms in scientific publications over recent years.

The key adjectives examined include "commendable," "innovative," "intricate," "notable," "versatile," "noteworthy," "invaluable," "pivotal," "potent," "fresh," and "ingenious." Similarly, adverbs like "meticulously," "reportedly," "lucidly," "innovatively," "aptly," "methodically," and "excellently" were considered. The analysis extends to combinations of these terms to detect potential LLM-assisted text with greater confidence.

Findings

Gray's analysis yielded significant insights:

- Single Word Analysis: The frequency of control words remained relatively stable year-over-year, whereas there was a notable spike in the presence of specific adjectives and adverbs in 2023. For instance, terms like "intricate" and "commendable" showed a striking increase of 117% and 83%, respectively.

- Combined Terms Analysis: Combining terms revealed even more substantial increases. Articles with at least one strong indicator term (like "intricate" or "meticulously") appeared 87.4% more frequently in 2023 than in prior years. Similar patterns were observed across various groupings of strong and medium indicators, pointing towards an escalating prevalence of LLM usage.

- Subject Area Variations: There was variation in the rate of LLM-related term usage across different fields. Engineering and biomedical sciences exhibited higher occurrences of these terms compared to other domains.

Implications

The findings underscore the growing integration of LLM tools in academic writing. The estimated 60,000 to 85,000 papers reflecting LLM assistance in 2023 invites several scholarly and ethical considerations:

- Scholarly Integrity: The lack of explicit disclosure regarding LLM usage poses significant questions about research integrity. The tools might go beyond stylistic polishing, influencing the substantive content of papers without proper authorial oversight.

- Future LLM Training: Gray raises a crucial point about the potential recursive impact on future LLMs. If LLM-generated text becomes a predominant part of training datasets, it could lead to model collapse, where the artificial quality of generated texts degrades over time, adversely affecting future generations of LLMs.

Future Directions

Gray's paper highlights the necessity for ongoing monitoring and refined methodologies to better understand LLM impacts. Future research could explore other markers, the prevalence of LLM-generated text in various publication types, and its correlation with different collaborative structures. Understanding these dynamics could inform more robust guidelines for LLM usage in academic contexts.

In conclusion, Andrew Gray's paper provides a foundational estimate of LLM prevalence in scholarly literature. This work calls for increased transparency and ethical considerations in the use of LLMs, aligning with broader efforts to maintain integrity in scientific communication. Potential future investigations could significantly enhance our understanding of LLMs' role and influence, guiding their ethical and effective integration into academic workflows.