Exploring Stable Code: A New Benchmark in Code LLMing

Introduction to Stable Code

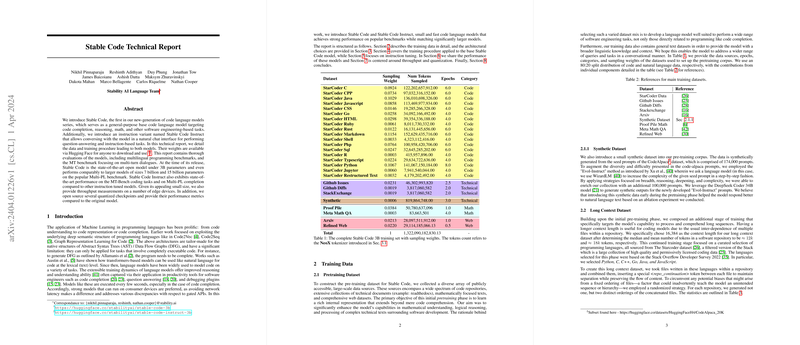

Stable Code emerges as a compelling advancement in the domain of code LLMs (LMs), aimed at enhancing code completion, reasoning, mathematical problem-solving, and broad software engineering tasks. Accompanying Stable Code, the report also introduces Stable Code Instruct, designed for natural language interfacing, enabling question-answering and instruction-based executions. This technical report meticulously outlines the models' training regime, datasets, and evaluations, providing the research community with both models through Hugging Face. It distinguishes itself by setting new benchmarks in multilingual programming tasks and even parallels the performance of much larger models in the field.

Training Data and Architecture

The report explores the comprehensive data sourcing and preparation strategy, comprising a blend of code repositories, technical documents, mathematical texts, and the web, tailored to foster a comprehensive understanding relevant to software development. The data strategy not only broadens the model's comprehension skills but also imbues it with a versatile conversational ability, thereby enhancing its applicability across a plethora of software engineering queries.

The model's architecture is built upon the Stable LM 3B framework, incorporating adjustments like Rotary Position Embeddings, LayerNorm modifications, and refined bias configurations. The chosen architecture underscores an emphasis on efficiency and performance, leveraging backend optimizations and demonstrating a grasp of advancements in the LLM landscape.

Training Methodology and Model Initialization

Intriguingly, the report outlines a multi-stage training approach enriched with Fill in the Middle (FIM) objectives. This strategic methodology is designed to combat the limitations of traditional causal LLMing by enriching the model's exposure to diverse structural patterns, thereby boosting its comprehension and prediction capabilities concerning code.

Moreover, the training section presents an insightful comparison between models trained from scratch versus those initialized from pre-trained LMs. The findings compellingly advocate for pre-trained initialization, spotlighting the beneficial crossover between natural language processing and code comprehension abilities.

Fine-Tuning and Alignment

Post the base model training, Stable Code Instruct undergoes a rigorous fine-tuning regimen, leveraging a curated blend of datasets tailored to enhance conversational interactivity and response quality. The fine-tuning phase adheres to established practices such as supervised fine-tuning followed by Direct Preference Optimization, highlighting a meticulous effort to refine the model's conversational capabilities.

Performance Evaluations

The evaluative benchmarks provide a robust testament to the models' capabilities. In code completion tasks, Stable Code demonstrates an impressive parity with much larger models across various programming languages. Furthermore, when specialized tasks such as Fill in the Middle (FIM) and SQL queries are considered, the models not only exhibit superior performance but also highlight the nuanced understanding of code contexts and databases.

Additionally, in the field of instruction-based tasks, Stable Code Instruct showcases exemplary performance, underscoring the successful integration of conversational finesse post fine-tuning. These evaluations collectively emphasize the models' standing as competitive, if not superior, alternatives in the landscape of code LMs.

Throughput and Quantization Considerations

A notable mention is given to the throughput measurements and quantization strategies, showcasing the model's practicality in real-world scenarios, especially on edge devices. The report provides insight into the substantial throughput gains achievable through precision adjustments, marking an important consideration for developers aiming to deploy these models in varied computing environments.

Conclusions and Implications

The Stable Code series marks a pivotal advancement in the code LM domain, primarily by marrying the robustness of LLMs with the specificity of software engineering tasks. The detailed account of data sourcing, training methodologies, and fine-tuning strategies underlines a comprehensive effort to develop models that are not just cutting-edge in technology but also versatile in application. The performance metrics reinforce the models' competitiveness, making them valuable assets for researchers and practitioners alike.

Looking forward, the implications of Stable Code and Stable Code Instruct extend beyond mere code completion. They promise advancements in the way we interact with and conceptualize the development of software, paving the way for models that are increasingly in tune with the multifaceted needs of developers. As the field progresses, one can anticipate further refinements and applications stemming from this groundbreaking work.